Francesco Maria Donini

Adherence and Constancy in LIME-RS Explanations for Recommendation

Sep 05, 2021

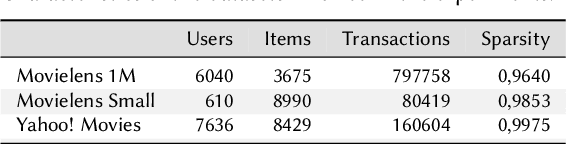

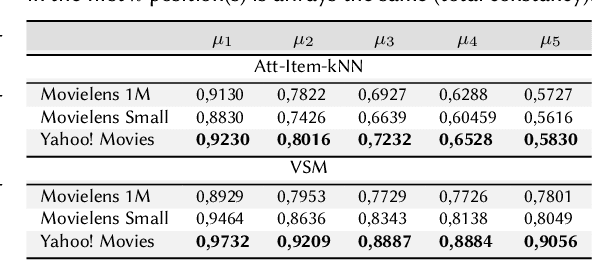

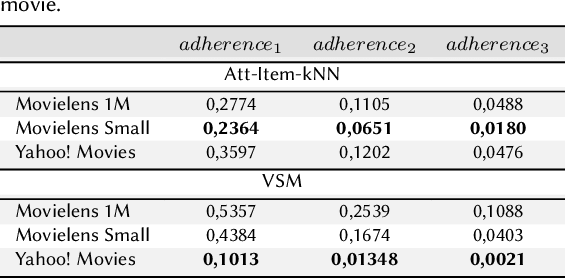

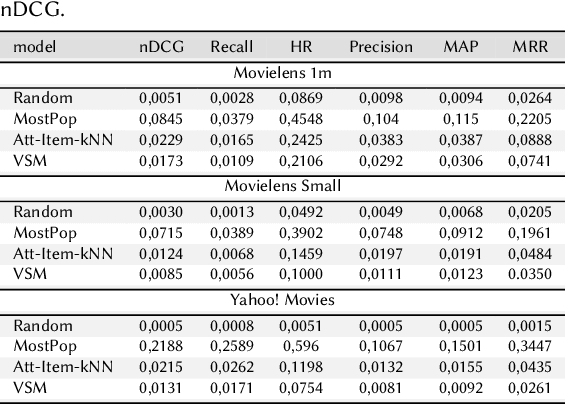

Abstract:Explainable Recommendation has attracted a lot of attention due to a renewed interest in explainable artificial intelligence. In particular, post-hoc approaches have proved to be the most easily applicable ones to increasingly complex recommendation models, which are then treated as black-boxes. The most recent literature has shown that for post-hoc explanations based on local surrogate models, there are problems related to the robustness of the approach itself. This consideration becomes even more relevant in human-related tasks like recommendation. The explanation also has the arduous task of enhancing increasingly relevant aspects of user experience such as transparency or trustworthiness. This paper aims to show how the characteristics of a classical post-hoc model based on surrogates is strongly model-dependent and does not prove to be accountable for the explanations generated.

Elliot: a Comprehensive and Rigorous Framework for Reproducible Recommender Systems Evaluation

Mar 03, 2021

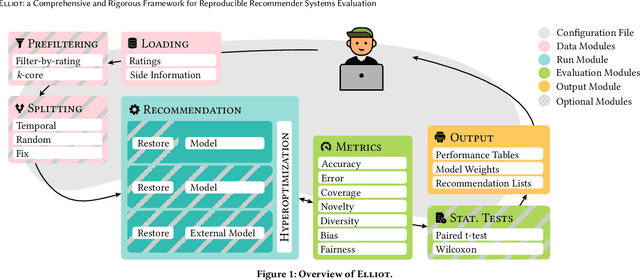

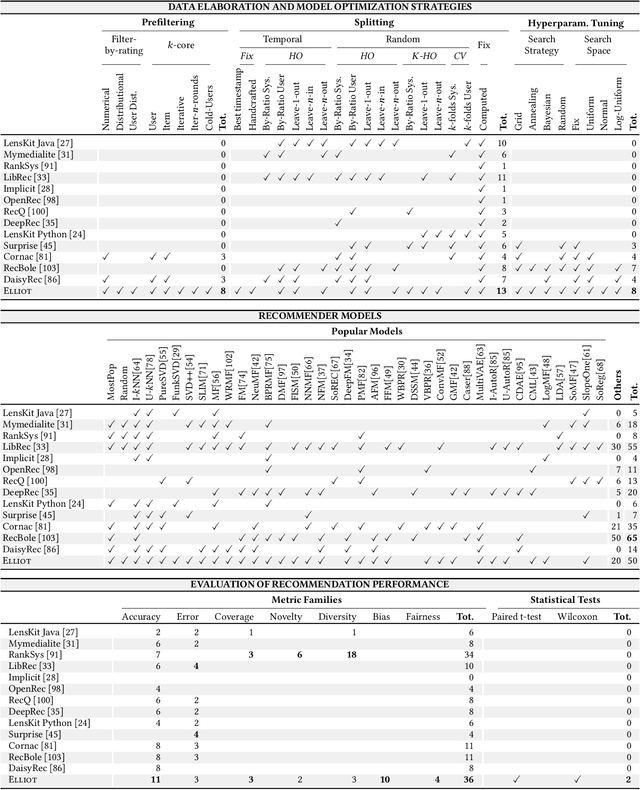

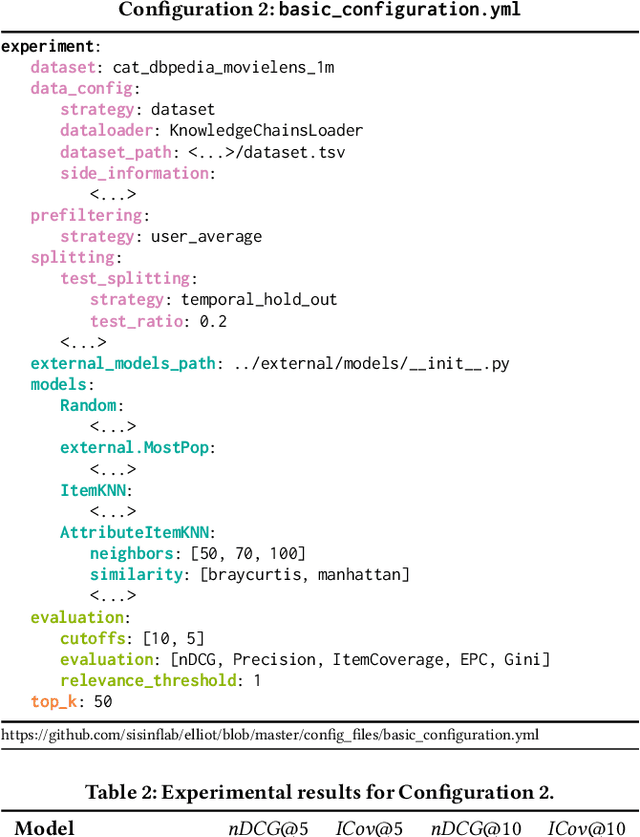

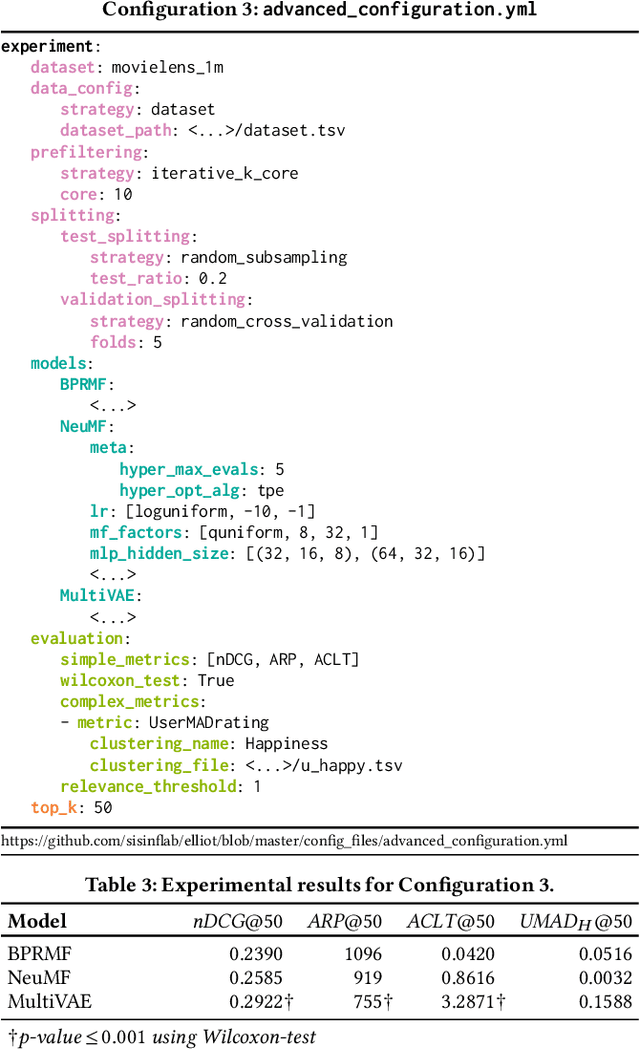

Abstract:Recommender Systems have shown to be an effective way to alleviate the over-choice problem and provide accurate and tailored recommendations. However, the impressive number of proposed recommendation algorithms, splitting strategies, evaluation protocols, metrics, and tasks, has made rigorous experimental evaluation particularly challenging. Puzzled and frustrated by the continuous recreation of appropriate evaluation benchmarks, experimental pipelines, hyperparameter optimization, and evaluation procedures, we have developed an exhaustive framework to address such needs. Elliot is a comprehensive recommendation framework that aims to run and reproduce an entire experimental pipeline by processing a simple configuration file. The framework loads, filters, and splits the data considering a vast set of strategies (13 splitting methods and 8 filtering approaches, from temporal training-test splitting to nested K-folds Cross-Validation). Elliot optimizes hyperparameters (51 strategies) for several recommendation algorithms (50), selects the best models, compares them with the baselines providing intra-model statistics, computes metrics (36) spanning from accuracy to beyond-accuracy, bias, and fairness, and conducts statistical analysis (Wilcoxon and Paired t-test). The aim is to provide the researchers with a tool to ease (and make them reproducible) all the experimental evaluation phases, from data reading to results collection. Elliot is available on GitHub (https://github.com/sisinflab/elliot).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge