Francesco Caravelli

How to Train Your Resistive Network: Generalized Equilibrium Propagation and Analytical Learning

Feb 03, 2026Abstract:Machine learning is a powerful method of extracting meaning from data; unfortunately, current digital hardware is extremely energy-intensive. There is interest in an alternative analog computing implementation that could match the performance of traditional machine learning while being significantly more energy-efficient. However, it remains unclear how to train such analog computing systems while adhering to locality constraints imposed by the physical (as opposed to digital) nature of these systems. Local learning algorithms such as Equilibrium Propagation and Coupled Learning have been proposed to address this issue. In this paper, we develop an algorithm to exactly calculate gradients using a graph theoretic and analytical framework for Kirchhoff's laws. We also introduce Generalized Equilibrium Propagation, a framework encompassing a broad class of Hebbian learning algorithms, including Coupled Learning and Equilibrium Propagation, and show how our algorithm compares. We demonstrate our algorithm using numerical simulations and show that we can train resistor networks without the need for a replica or readout over all resistors, only at the output layer. We also show that under the analytical gradient approach, it is possible to update only a subset of the resistance values without a strong degradation in performance.

Neuromorphic on-chip reservoir computing with spiking neural network architectures

Jul 30, 2024

Abstract:Reservoir computing is a promising approach for harnessing the computational power of recurrent neural networks while dramatically simplifying training. This paper investigates the application of integrate-and-fire neurons within reservoir computing frameworks for two distinct tasks: capturing chaotic dynamics of the H\'enon map and forecasting the Mackey-Glass time series. Integrate-and-fire neurons can be implemented in low-power neuromorphic architectures such as Intel Loihi. We explore the impact of network topologies created through random interactions on the reservoir's performance. Our study reveals task-specific variations in network effectiveness, highlighting the importance of tailored architectures for distinct computational tasks. To identify optimal network configurations, we employ a meta-learning approach combined with simulated annealing. This method efficiently explores the space of possible network structures, identifying architectures that excel in different scenarios. The resulting networks demonstrate a range of behaviors, showcasing how inherent architectural features influence task-specific capabilities. We study the reservoir computing performance using a custom integrate-and-fire code, Intel's Lava neuromorphic computing software framework, and via an on-chip implementation in Loihi. We conclude with an analysis of the energy performance of the Loihi architecture.

Direct observation of a dynamical glass transition in a nanomagnetic artificial Hopfield network

Feb 04, 2022

Abstract:Spin glasses, generally defined as disordered systems with randomized competing interactions, are a widely investigated complex system. Theoretical models describing spin glasses are broadly used in other complex systems, such as those describing brain function, error-correcting codes, or stock-market dynamics. This wide interest in spin glasses provides strong motivation to generate an artificial spin glass within the framework of artificial spin ice systems. Here, we present the experimental realization of an artificial spin glass consisting of dipolar coupled single-domain Ising-type nanomagnets arranged onto an interaction network that replicates the aspects of a Hopfield neural network. Using cryogenic x-ray photoemission electron microscopy (XPEEM), we performed temperature-dependent imaging of thermally driven moment fluctuations within these networks and observed characteristic features of a two-dimensional Ising spin glass. Specifically, the temperature dependence of the spin glass correlation function follows a power law trend predicted from theoretical models on two-dimensional spin glasses. Furthermore, we observe clear signatures of the hard to observe rugged spin glass free energy in the form of sub-aging, out of equilibrium autocorrelations and a transition from stable to unstable dynamics.

Projective Embedding of Dynamical Systems: uniform mean field equations

Jan 07, 2022

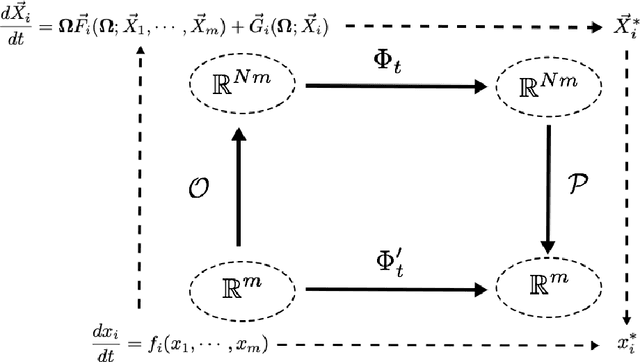

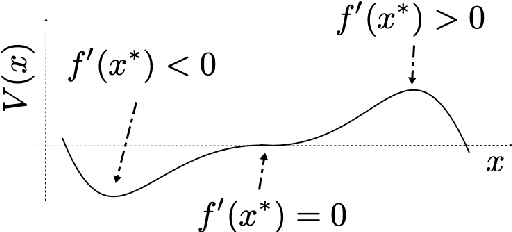

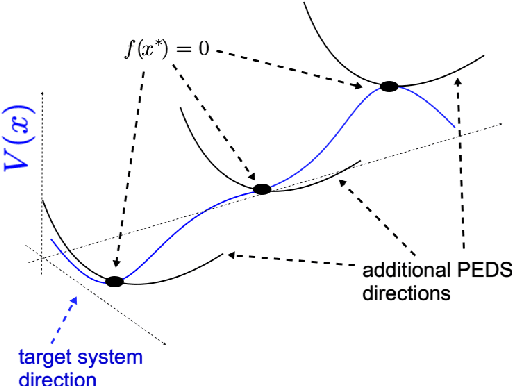

Abstract:We study embeddings of continuous dynamical systems in larger dimensions via projector operators. We call this technique PEDS, projective embedding of dynamical systems, as the stable fixed point of the dynamics are recovered via projection from the higher dimensional space. In this paper we provide a general definition and prove that for a particular type of projector operator of rank-1, the uniform mean field projector, the equations of motion become a mean field approximation of the dynamical system. While in general the embedding depends on a specified variable ordering, the same is not true for the uniform mean field projector. In addition, we prove that the original stable fixed points remain stable fixed points of the dynamics, saddle points remain saddle, but unstable fixed points become saddles.

Global minimization via classical tunneling assisted by collective force field formation

Feb 05, 2021

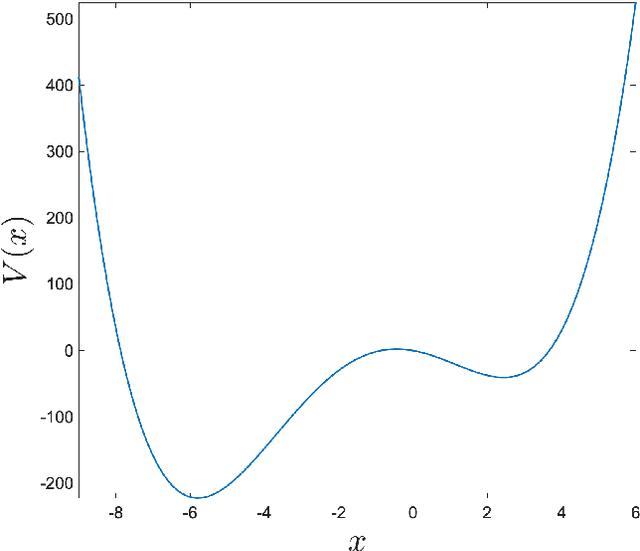

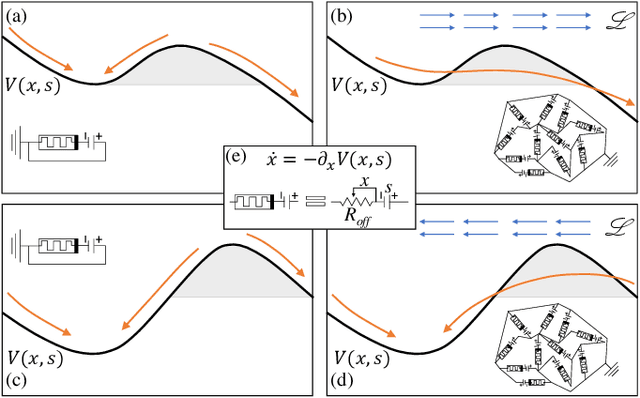

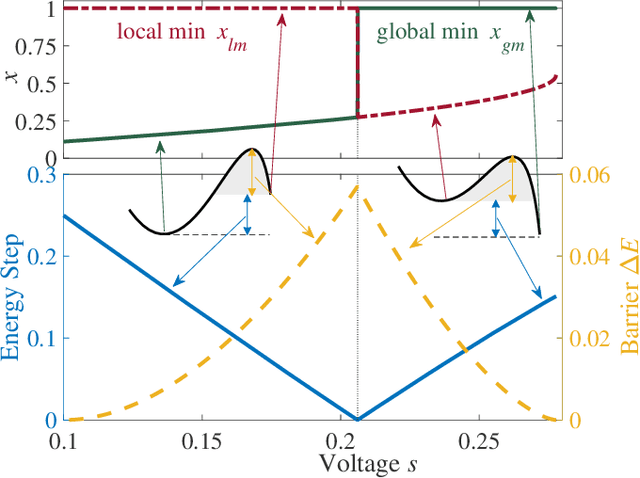

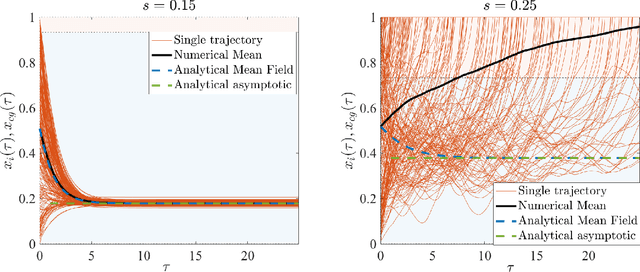

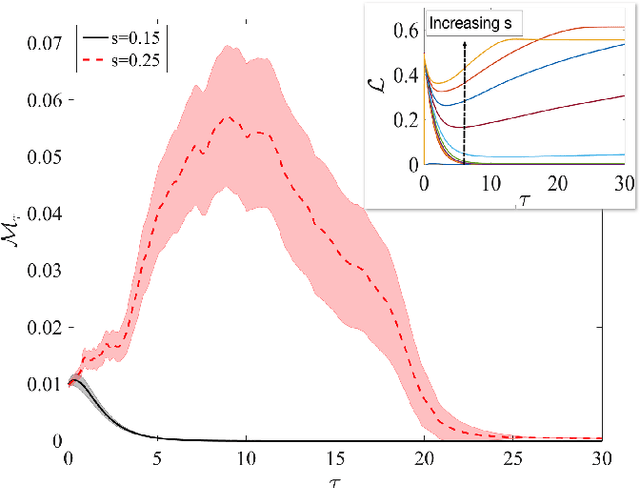

Abstract:Simple dynamical models can produce intricate behaviors in large networks. These behaviors can often be observed in a wide variety of physical systems captured by the network of interactions. Here we describe a phenomenon where the increase of dimensions self-consistently generates a force field due to dynamical instabilities. This can be understood as an unstable ("rumbling") tunneling mechanism between minima in an effective potential. We dub this collective and nonperturbative effect a "Lyapunov force" which steers the system towards the global minimum of the potential function, even if the full system has a constellation of equilibrium points growing exponentially with the system size. The system we study has a simple mapping to a flow network, equivalent to current-driven memristors. The mechanism is appealing for its physical relevance in nanoscale physics, and to possible applications in optimization, novel Monte Carlo schemes and machine learning.

The Computational Capacity of Memristor Reservoirs

Sep 04, 2020

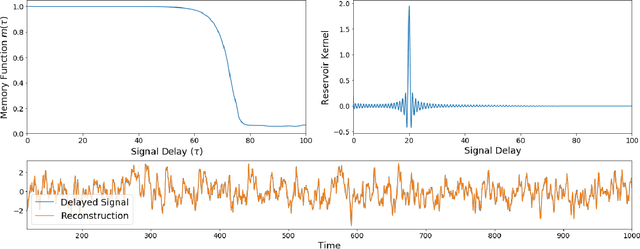

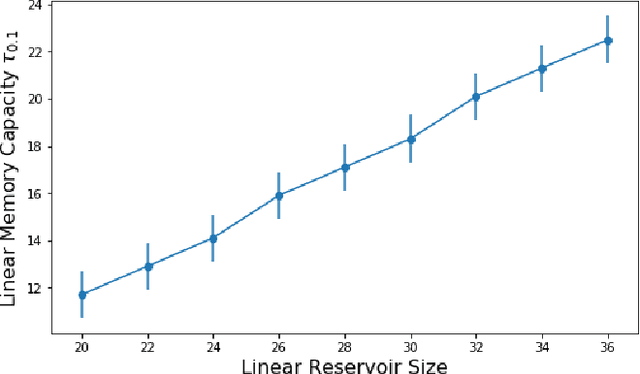

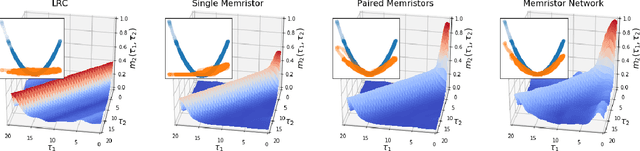

Abstract:Reservoir computing is a machine learning paradigm in which a high-dimensional dynamical system, or \emph{reservoir}, is used to approximate and perform predictions on time series data. Its simple training procedure allows for very large reservoirs that can provide powerful computational capabilities. The scale, speed and power-usage characteristics of reservoir computing could be enhanced by constructing reservoirs out of electronic circuits, but this requires a precise understanding of how such circuits process and store information. We analyze the feasibility and optimal design of such reservoirs by considering the equations of motion of circuits that include both linear elements (resistors, inductors, and capacitors) and nonlinear memory elements (called memristors). This complements previous studies, which have examined such systems through simulation and experiment. We provide analytic results regarding the fundamental feasibility of such reservoirs, and give a systematic characterization of their computational properties, examining the types of input-output relationships that may be approximated. This allows us to design reservoirs with optimal properties in terms of their ability to reconstruct a certain signal (or functions thereof). In particular, by introducing measures of the total linear and nonlinear computational capacities of the reservoir, we are able to design electronic circuits whose total computation capacity scales linearly with the system size. Comparison with conventional echo state reservoirs show that these electronic reservoirs can match or exceed their performance in a form that may be directly implemented in hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge