Florian Vollnhals

DiffRenderGAN: Addressing Training Data Scarcity in Deep Segmentation Networks for Quantitative Nanomaterial Analysis through Differentiable Rendering and Generative Modelling

Feb 13, 2025Abstract:Nanomaterials exhibit distinctive properties governed by parameters such as size, shape, and surface characteristics, which critically influence their applications and interactions across technological, biological, and environmental contexts. Accurate quantification and understanding of these materials are essential for advancing research and innovation. In this regard, deep learning segmentation networks have emerged as powerful tools that enable automated insights and replace subjective methods with precise quantitative analysis. However, their efficacy depends on representative annotated datasets, which are challenging to obtain due to the costly imaging of nanoparticles and the labor-intensive nature of manual annotations. To overcome these limitations, we introduce DiffRenderGAN, a novel generative model designed to produce annotated synthetic data. By integrating a differentiable renderer into a Generative Adversarial Network (GAN) framework, DiffRenderGAN optimizes textural rendering parameters to generate realistic, annotated nanoparticle images from non-annotated real microscopy images. This approach reduces the need for manual intervention and enhances segmentation performance compared to existing synthetic data methods by generating diverse and realistic data. Tested on multiple ion and electron microscopy cases, including titanium dioxide (TiO$_2$), silicon dioxide (SiO$_2$)), and silver nanowires (AgNW), DiffRenderGAN bridges the gap between synthetic and real data, advancing the quantification and understanding of complex nanomaterial systems.

Synthetic Image Rendering Solves Annotation Problem in Deep Learning Nanoparticle Segmentation

Nov 20, 2020

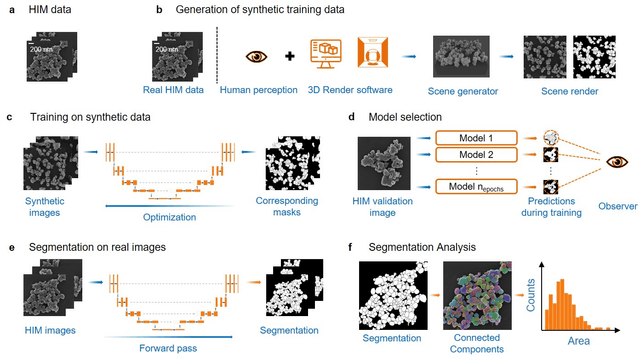

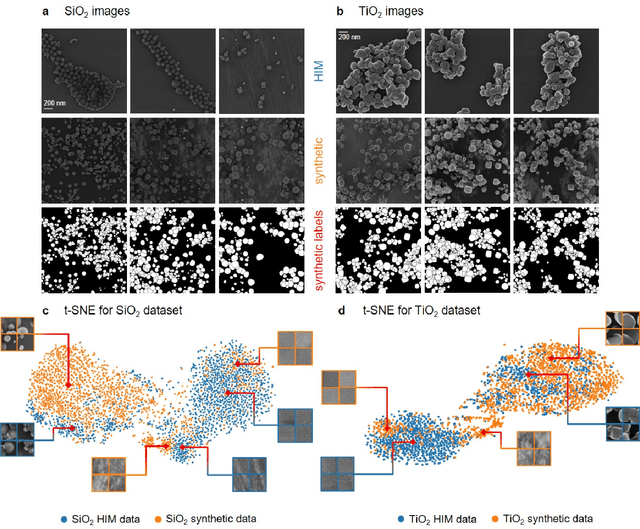

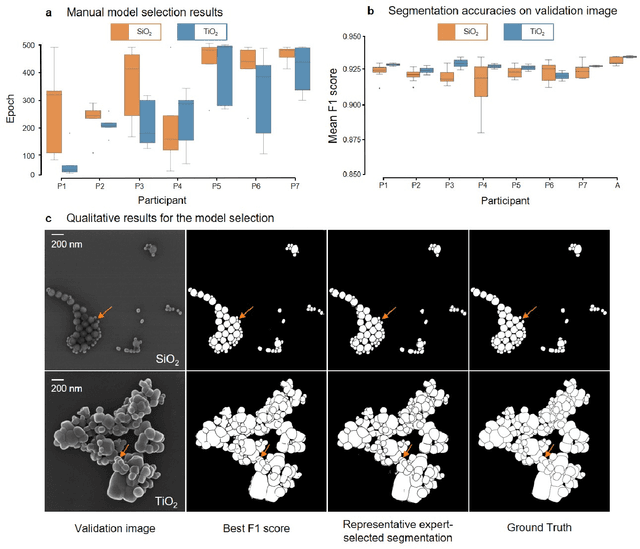

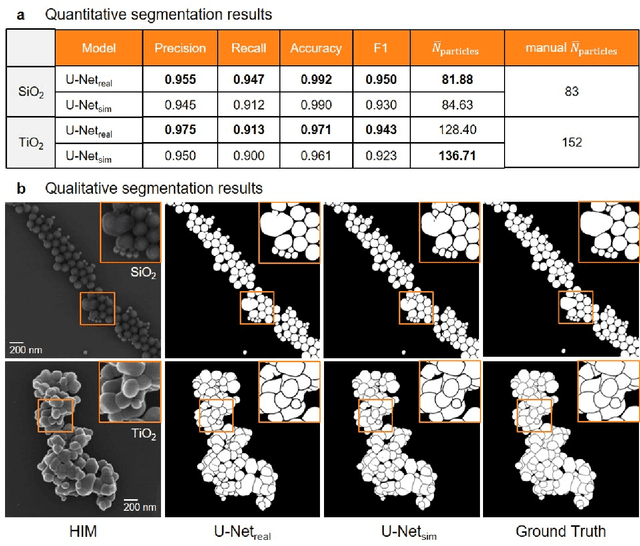

Abstract:Nanoparticles occur in various environments as a consequence of man-made processes, which raises concerns about their impact on the environment and human health. To allow for proper risk assessment, a precise and statistically relevant analysis of particle characteristics (such as e.g. size, shape and composition) is required that would greatly benefit from automated image analysis procedures. While deep learning shows impressive results in object detection tasks, its applicability is limited by the amount of representative, experimentally collected and manually annotated training data. Here, we present an elegant, flexible and versatile method to bypass this costly and tedious data acquisition process. We show that using a rendering software allows to generate realistic, synthetic training data to train a state-of-the art deep neural network. Using this approach, we derive a segmentation accuracy that is comparable to man-made annotations for toxicologically relevant metal-oxide nanoparticle ensembles which we chose as examples. Our study paves the way towards the use of deep learning for automated, high-throughput particle detection in a variety of imaging techniques such as microscopies and spectroscopies, for a wide variety of studies and applications, including the detection of plastic micro- and nanoparticles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge