Filipe Assunção

Natural language to SQL in low-code platforms

Aug 29, 2023

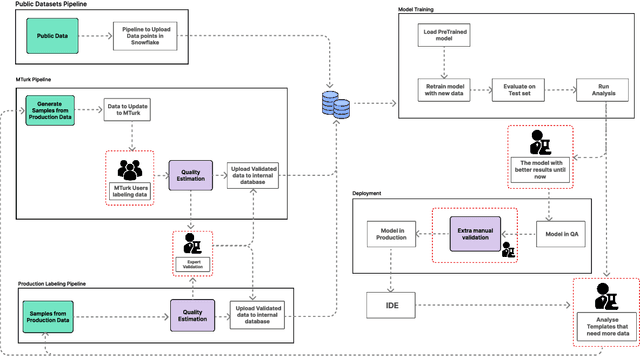

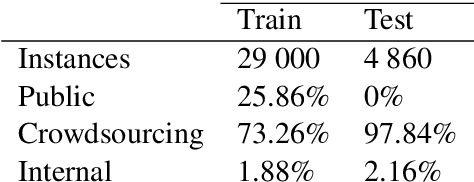

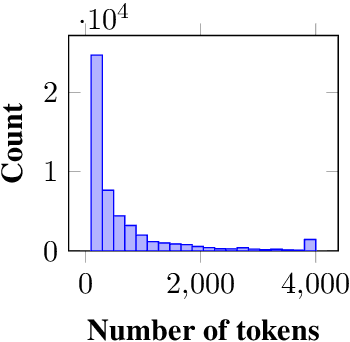

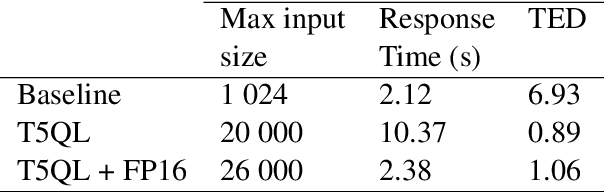

Abstract:One of the developers' biggest challenges in low-code platforms is retrieving data from a database using SQL queries. Here, we propose a pipeline allowing developers to write natural language (NL) to retrieve data. In this study, we collect, label, and validate data covering the SQL queries most often performed by OutSystems users. We use that data to train a NL model that generates SQL. Alongside this, we describe the entire pipeline, which comprises a feedback loop that allows us to quickly collect production data and use it to retrain our SQL generation model. Using crowd-sourcing, we collect 26k NL and SQL pairs and obtain an additional 1k pairs from production data. Finally, we develop a UI that allows developers to input a NL query in a prompt and receive a user-friendly representation of the resulting SQL query. We use A/B testing to compare four different models in production and observe a 240% improvement in terms of adoption of the feature, 220% in terms of engagement rate, and a 90% decrease in failure rate when compared against the first model that we put into production, showcasing the effectiveness of our pipeline in continuously improving our feature.

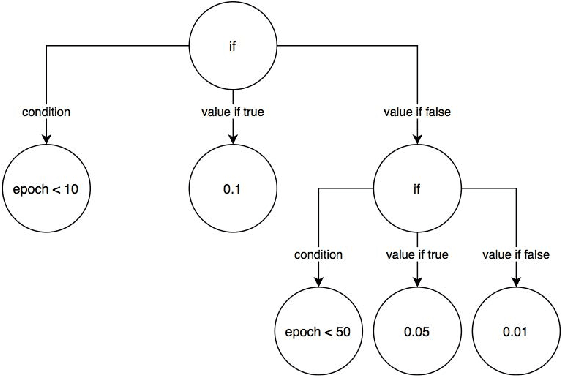

AutoLR: An Evolutionary Approach to Learning Rate Policies

Jul 08, 2020

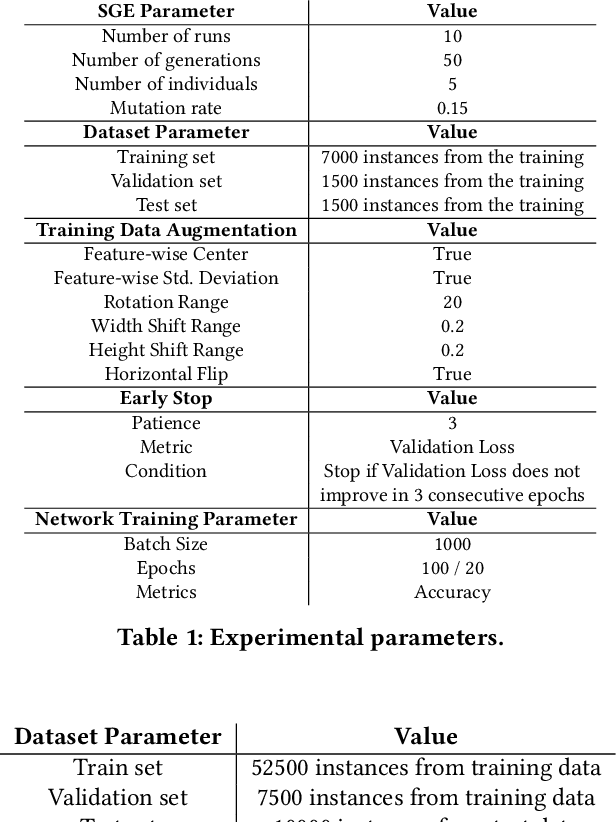

Abstract:The choice of a proper learning rate is paramount for good Artificial Neural Network training and performance. In the past, one had to rely on experience and trial-and-error to find an adequate learning rate. Presently, a plethora of state of the art automatic methods exist that make the search for a good learning rate easier. While these techniques are effective and have yielded good results over the years, they are general solutions. This means the optimization of learning rate for specific network topologies remains largely unexplored. This work presents AutoLR, a framework that evolves Learning Rate Schedulers for a specific Neural Network Architecture using Structured Grammatical Evolution. The system was used to evolve learning rate policies that were compared with a commonly used baseline value for learning rate. Results show that training performed using certain evolved policies is more efficient than the established baseline and suggest that this approach is a viable means of improving a neural network's performance.

Evolution of Scikit-Learn Pipelines with Dynamic Structured Grammatical Evolution

Apr 01, 2020

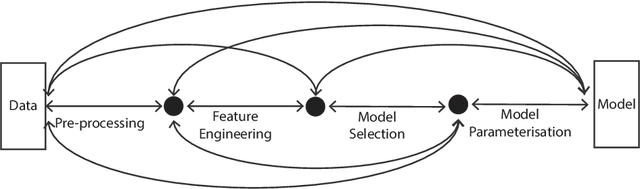

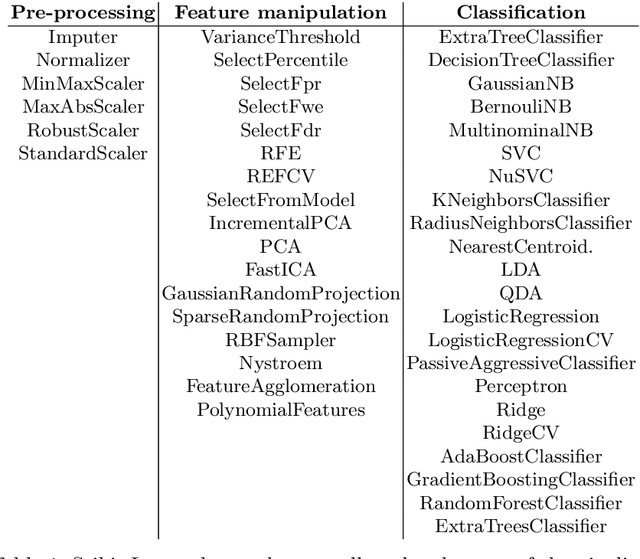

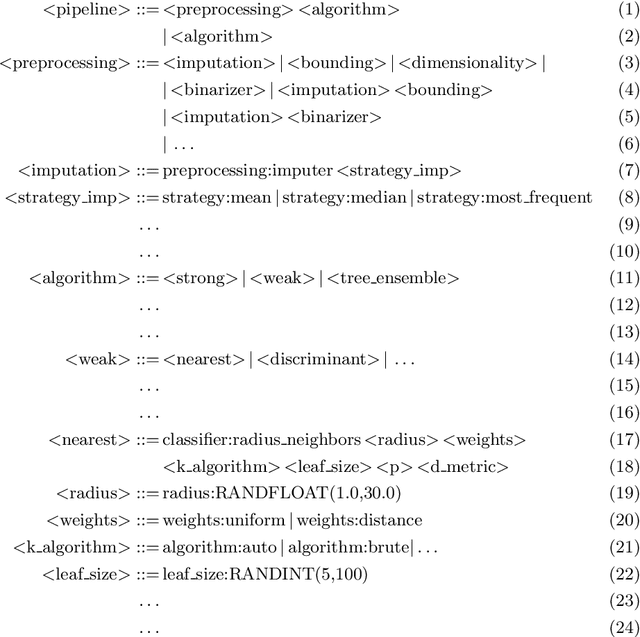

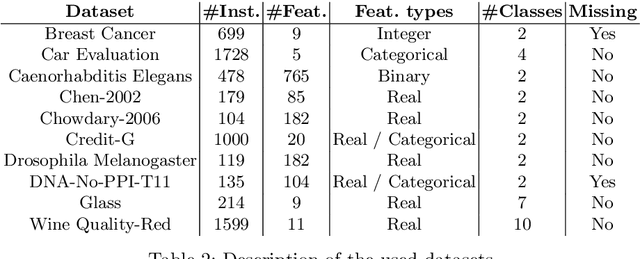

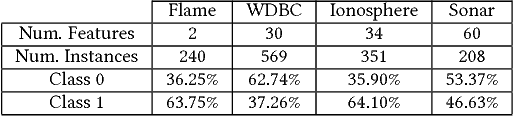

Abstract:The deployment of Machine Learning (ML) models is a difficult and time-consuming job that comprises a series of sequential and correlated tasks that go from the data pre-processing, and the design and extraction of features, to the choice of the ML algorithm and its parameterisation. The task is even more challenging considering that the design of features is in many cases problem specific, and thus requires domain-expertise. To overcome these limitations Automated Machine Learning (AutoML) methods seek to automate, with few or no human-intervention, the design of pipelines, i.e., automate the selection of the sequence of methods that have to be applied to the raw data. These methods have the potential to enable non-expert users to use ML, and provide expert users with solutions that they would unlikely consider. In particular, this paper describes AutoML-DSGE - a novel grammar-based framework that adapts Dynamic Structured Grammatical Evolution (DSGE) to the evolution of Scikit-Learn classification pipelines. The experimental results include comparing AutoML-DSGE to another grammar-based AutoML framework, Resilient ClassificationPipeline Evolution (RECIPE), and show that the average performance of the classification pipelines generated by AutoML-DSGE is always superior to the average performance of RECIPE; the differences are statistically significant in 3 out of the 10 used datasets.

Incremental Evolution and Development of Deep Artificial Neural Networks

Apr 01, 2020

Abstract:NeuroEvolution (NE) methods are known for applying Evolutionary Computation to the optimisation of Artificial Neural Networks(ANNs). Despite aiding non-expert users to design and train ANNs, the vast majority of NE approaches disregard the knowledge that is gathered when solving other tasks, i.e., evolution starts from scratch for each problem, ultimately delaying the evolutionary process. To overcome this drawback, we extend Fast Deep Evolutionary Network Structured Representation (Fast-DENSER) to incremental development. We hypothesise that by transferring the knowledge gained from previous tasks we can attain superior results and speedup evolution. The results show that the average performance of the models generated by incremental development is statistically superior to the non-incremental average performance. In case the number of evaluations performed by incremental development is smaller than the performed by non-incremental development the attained results are similar in performance, which indicates that incremental development speeds up evolution. Lastly, the models generated using incremental development generalise better, and thus, without further evolution, report a superior performance on unseen problems.

Automatic Design of Artificial Neural Networks for Gamma-Ray Detection

May 09, 2019

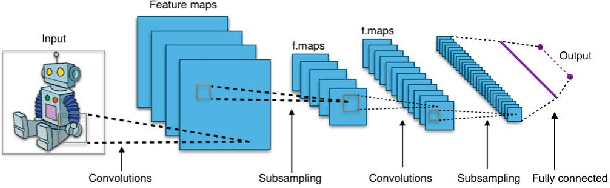

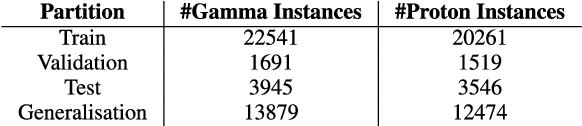

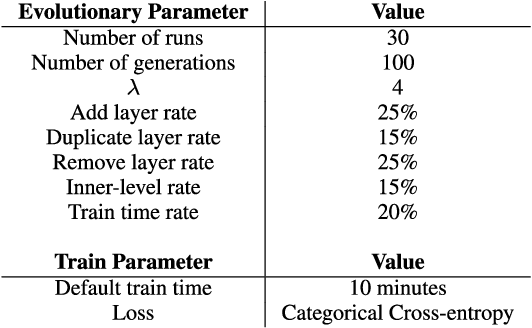

Abstract:The goal of this work is to investigate the possibility of improving current gamma/hadron discrimination based on their shower patterns recorded on the ground. To this end we propose the use of Convolutional Neural Networks (CNNs) for their ability to distinguish patterns based on automatically designed features. In order to promote the creation of CNNs that properly uncover the hidden patterns in the data, and at same time avoid the burden of hand-crafting the topology and learning hyper-parameters we resort to NeuroEvolution; in particular we use Fast-DENSER++, a variant of Deep Evolutionary Network Structured Representation. The results show that the best CNN generated by Fast-DENSER++ improves by a factor of 2 when compared with the results reported by classic statistical approaches. Additionally, we experiment ensembling the 10 best generated CNNs, one from each of the evolutionary runs; the ensemble leads to an improvement by a factor of 2.3. These results show that it is possible to improve the gamma/hadron discrimination based on CNNs that are automatically generated and are trained with instances of the ground impact patterns.

Fast-DENSER++: Evolving Fully-Trained Deep Artificial Neural Networks

May 08, 2019

Abstract:This paper proposes a new extension to Deep Evolutionary Network Structured Evolution (DENSER), called Fast-DENSER++ (F-DENSER++). The vast majority of NeuroEvolution methods that optimise Deep Artificial Neural Networks (DANNs) only evaluate the candidate solutions for a fixed amount of epochs; this makes it difficult to effectively assess the learning strategy, and requires the best generated network to be further trained after evolution. F-DENSER++ enables the training time of the candidate solutions to grow continuously as necessary, i.e., in the initial generations the candidate solutions are trained for shorter times, and as generations proceed it is expected that longer training cycles enable better performances. Consequently, the models discovered by F-DENSER++ are fully-trained DANNs, and are ready for deployment after evolution, without the need for further training. The results demonstrate the ability of F-DENSER++ to effectively generate fully-trained DANNs; by the end of evolution, whilst the average performance of the models generated by F-DENSER++ is of 88.73%, the performance of the models generated by the previous version of DENSER (Fast-DENSER) is 86.91% (statistically significant), which increases to 87.76% when allowed to train for longer.

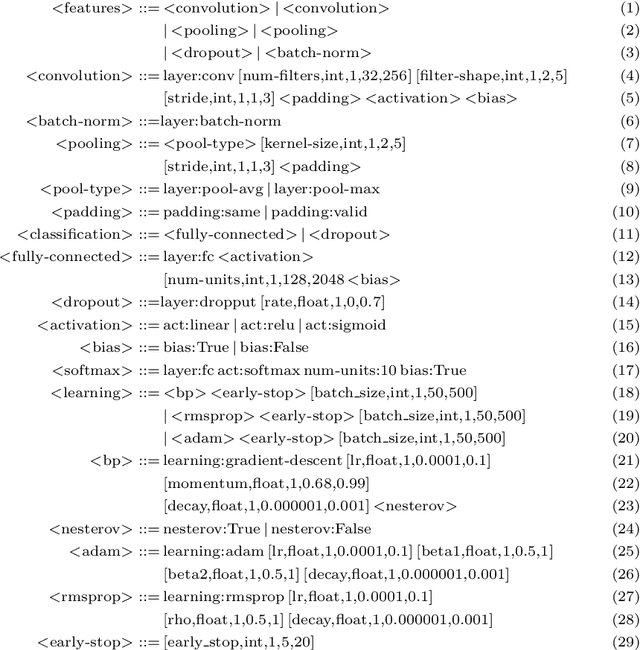

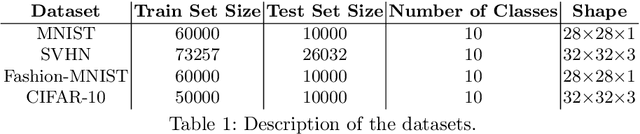

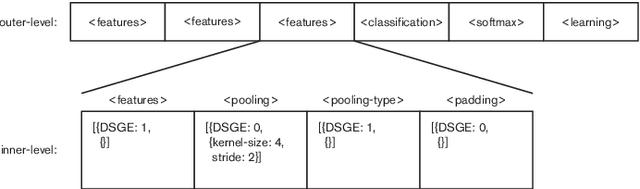

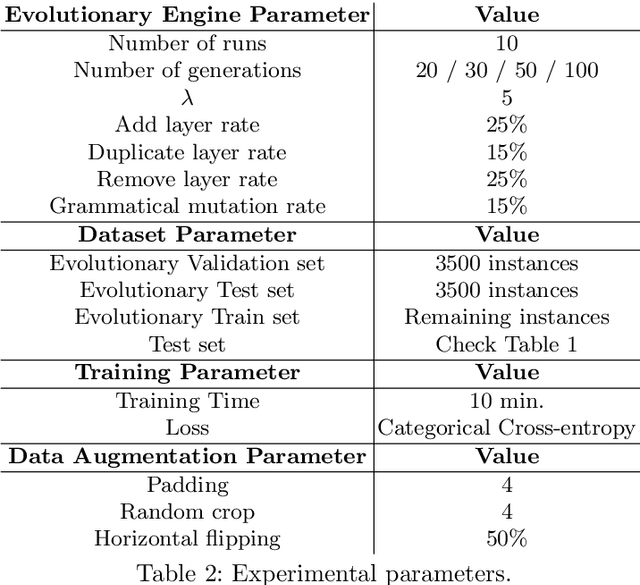

DENSER: Deep Evolutionary Network Structured Representation

Jun 01, 2018

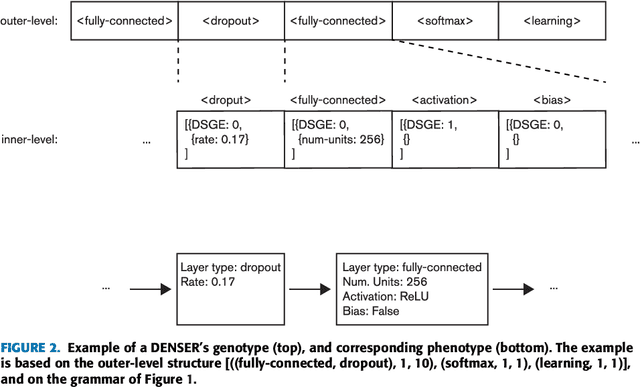

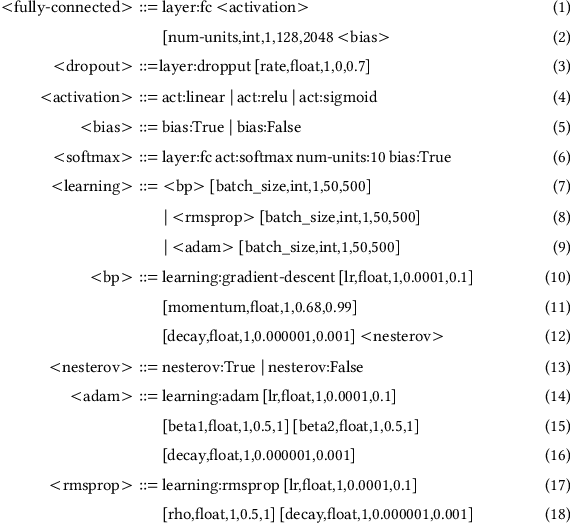

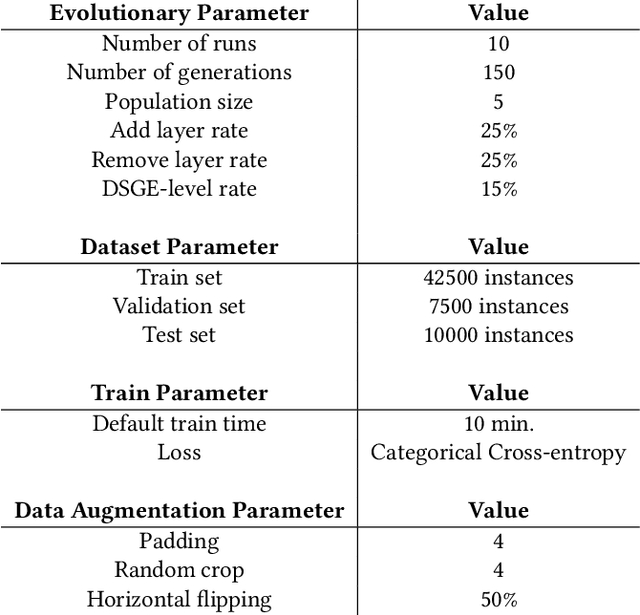

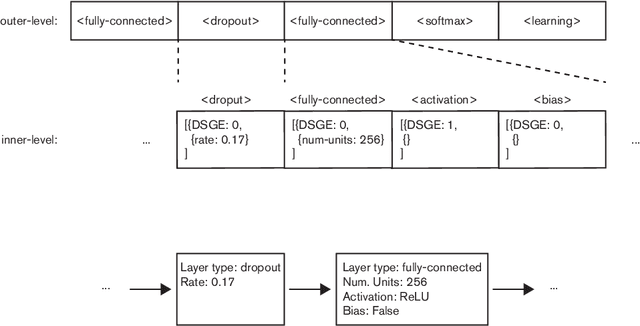

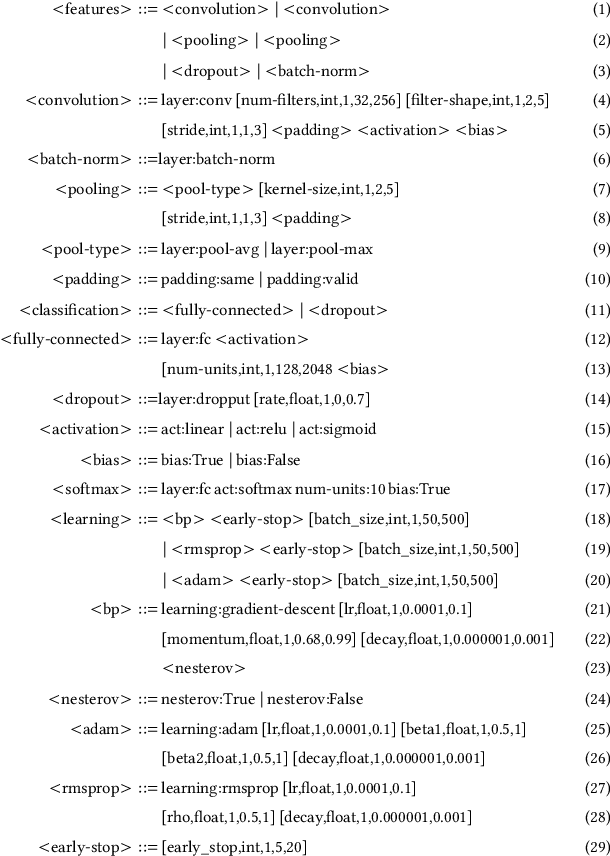

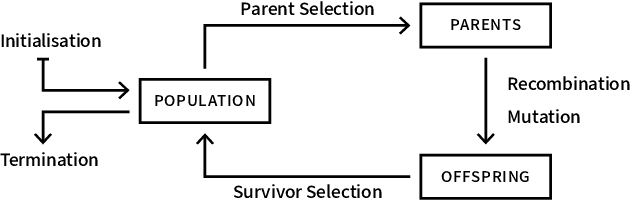

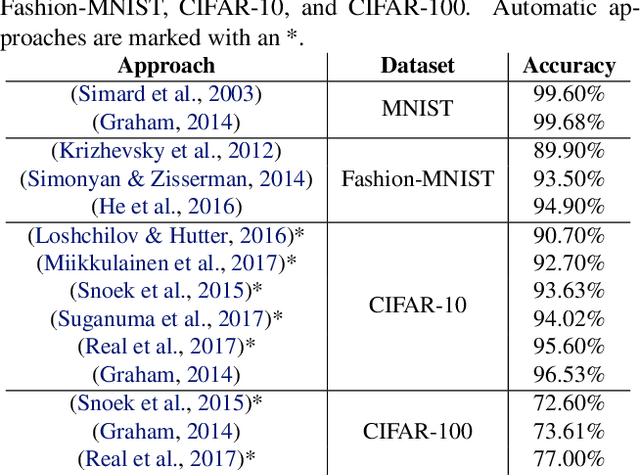

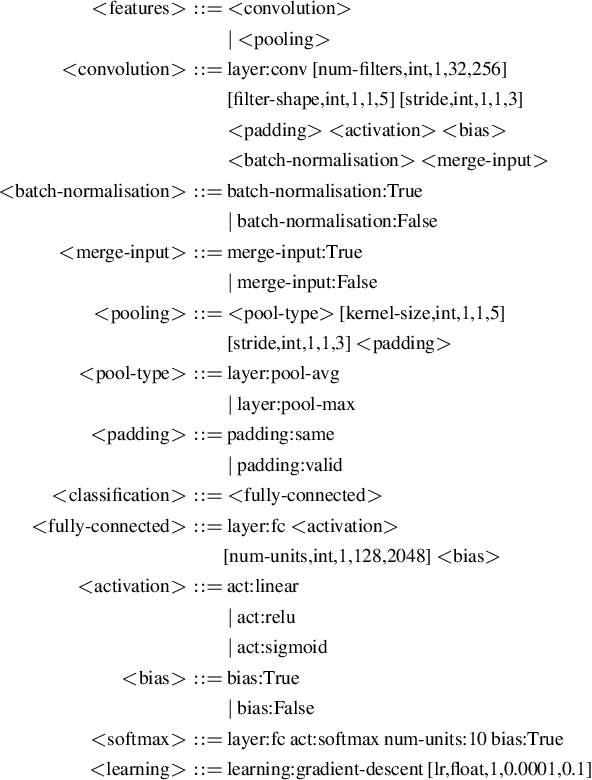

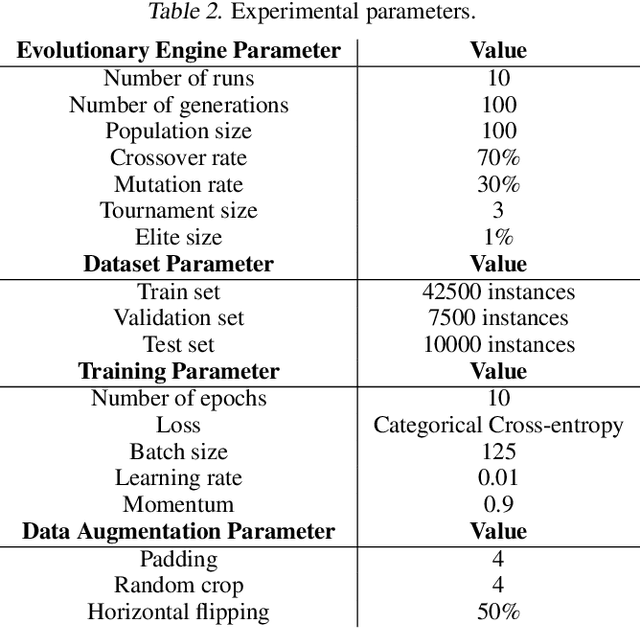

Abstract:Deep Evolutionary Network Structured Representation (DENSER) is a novel approach to automatically design Artificial Neural Networks (ANNs) using Evolutionary Computation. The algorithm not only searches for the best network topology (e.g., number of layers, type of layers), but also tunes hyper-parameters, such as, learning parameters or data augmentation parameters. The automatic design is achieved using a representation with two distinct levels, where the outer level encodes the general structure of the network, i.e., the sequence of layers, and the inner level encodes the parameters associated with each layer. The allowed layers and range of the hyper-parameters values are defined by means of a human-readable Context-Free Grammar. DENSER was used to evolve ANNs for CIFAR-10, obtaining an average test accuracy of 94.13%. The networks evolved for the CIFA--10 are tested on the MNIST, Fashion-MNIST, and CIFAR-100; the results are highly competitive, and on the CIFAR-100 we report a test accuracy of 78.75%. To the best of our knowledge, our CIFAR-100 results are the highest performing models generated by methods that aim at the automatic design of Convolutional Neural Networks (CNNs), and are amongst the best for manually designed and fine-tuned CNNs.

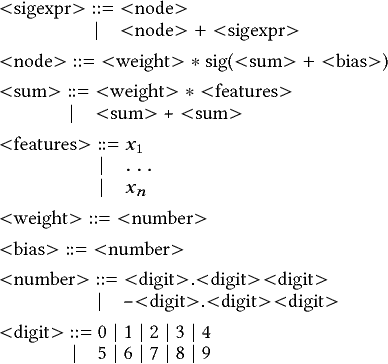

Towards the Evolution of Multi-Layered Neural Networks: A Dynamic Structured Grammatical Evolution Approach

Jun 26, 2017

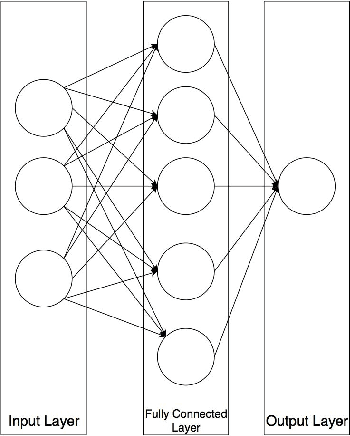

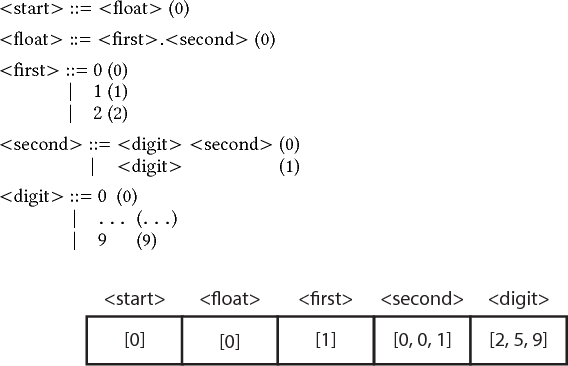

Abstract:Current grammar-based NeuroEvolution approaches have several shortcomings. On the one hand, they do not allow the generation of Artificial Neural Networks (ANNs composed of more than one hidden-layer. On the other, there is no way to evolve networks with more than one output neuron. To properly evolve ANNs with more than one hidden-layer and multiple output nodes there is the need to know the number of neurons available in previous layers. In this paper we introduce Dynamic Structured Grammatical Evolution (DSGE): a new genotypic representation that overcomes the aforementioned limitations. By enabling the creation of dynamic rules that specify the connection possibilities of each neuron, the methodology enables the evolution of multi-layered ANNs with more than one output neuron. Results in different classification problems show that DSGE evolves effective single and multi-layered ANNs, with a varying number of output neurons.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge