Pedro Carvalho

A transition towards virtual representations of visual scenes

Oct 10, 2024

Abstract:Visual scene understanding is a fundamental task in computer vision that aims to extract meaningful information from visual data. It traditionally involves disjoint and specialized algorithms for different tasks that are tailored for specific application scenarios. This can be cumbersome when designing complex systems that include processing of visual and semantic data extracted from visual scenes, which is even more noticeable nowadays with the influx of applications for virtual or augmented reality. When designing a system that employs automatic visual scene understanding to enable a precise and semantically coherent description of the underlying scene, which can be used to fuel a visualization component with 3D virtual synthesis, the lack of flexibility and unified frameworks become more prominent. To alleviate this issue and its inherent problems, we propose an architecture that addresses the challenges of visual scene understanding and description towards a 3D virtual synthesis that enables an adaptable, unified and coherent solution. Furthermore, we expose how our proposition can be of use into multiple application areas. Additionally, we also present a proof of concept system that employs our architecture to further prove its usability in practice.

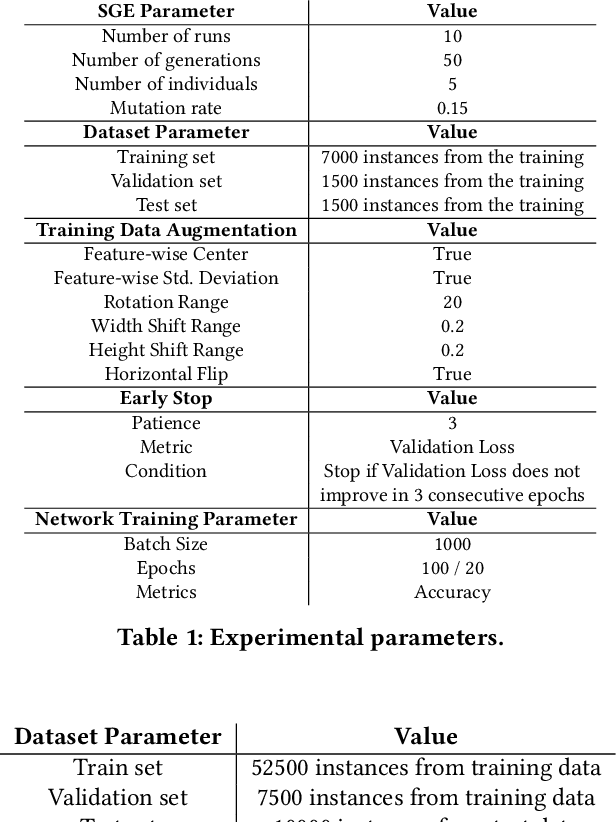

Context Matters: Adaptive Mutation for Grammars

Mar 25, 2023Abstract:This work proposes Adaptive Facilitated Mutation, a self-adaptive mutation method for Structured Grammatical Evolution (SGE), biologically inspired by the theory of facilitated variation. In SGE, the genotype of individuals contains a list for each non-terminal of the grammar that defines the search space. In our proposed mutation, each individual contains an array with a different, self-adaptive mutation rate for each non-terminal. We also propose Function Grouped Grammars, a grammar design procedure, to enhance the benefits of the proposed mutation. Experiments were conducted on three symbolic regression benchmarks using Probabilistic Structured Grammatical Evolution (PSGE), a variant of SGE. Results show our approach is similar or better when compared with the standard grammar and mutation.

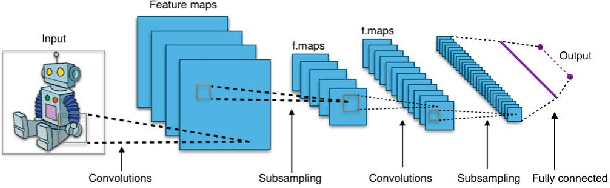

Structured mutation inspired by evolutionary theory enriches population performance and diversity

Feb 01, 2023Abstract:Grammar-Guided Genetic Programming (GGGP) employs a variety of insights from evolutionary theory to autonomously design solutions for a given task. Recent insights from evolutionary biology can lead to further improvements in GGGP algorithms. In this paper, we apply principles from the theory of Facilitated Variation and knowledge about heterogeneous mutation rates and mutation effects to improve the variation operators. We term this new method of variation Facilitated Mutation (FM). We test FM performance on the evolution of neural network optimizers for image classification, a relevant task in evolutionary computation, with important implications for the field of machine learning. We compare FM and FM combined with crossover (FMX) against a typical mutation regime to assess the benefits of the approach. We find that FMX in particular provides statistical improvements in key metrics, creating a superior optimizer overall (+0.48\% average test accuracy), improving the average quality of solutions (+50\% average population fitness), and discovering more diverse high-quality behaviors (+400 high-quality solutions discovered per run on average). Additionally, FM and FMX can reduce the number of fitness evaluations in an evolutionary run, reducing computational costs in some scenarios.

Evolving Learning Rate Optimizers for Deep Neural Networks

Mar 23, 2021

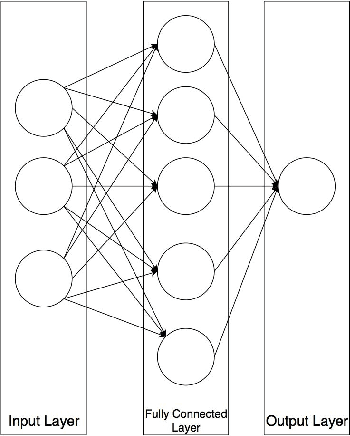

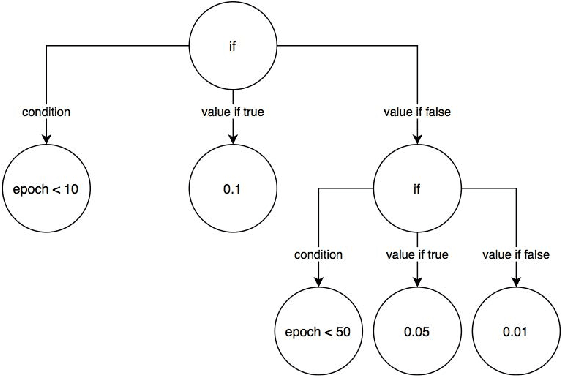

Abstract:Artificial Neural Networks (ANNs) became popular due to their successful application difficult problems such image and speech recognition. However, when practitioners want to design an ANN they need to undergo laborious process of selecting a set of parameters and topology. Currently, there are several state-of-the art methods that allow for the automatic selection of some of these aspects. Learning Rate optimizers are a set of such techniques that search for good values of learning rates. Whilst these techniques are effective and have yielded good results over the years, they are general solution i.e. they do not consider the characteristics of a specific network. We propose a framework called AutoLR to automatically design Learning Rate Optimizers. Two versions of the system are detailed. The first one, Dynamic AutoLR, evolves static and dynamic learning rate optimizers based on the current epoch and the previous learning rate. The second version, Adaptive AutoLR, evolves adaptive optimizers that can fine tune the learning rate for each network eeight which makes them generally more effective. The results are competitive with the best state of the art methods, even outperforming them in some scenarios. Furthermore, the system evolved a classifier, ADES, that appears to be novel and innovative since, to the best of our knowledge, it has a structure that differs from state of the art methods.

AutoLR: An Evolutionary Approach to Learning Rate Policies

Jul 08, 2020

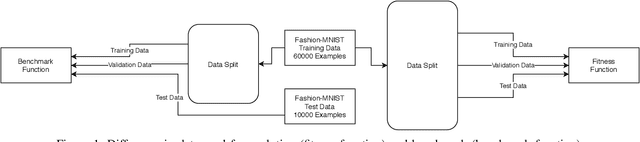

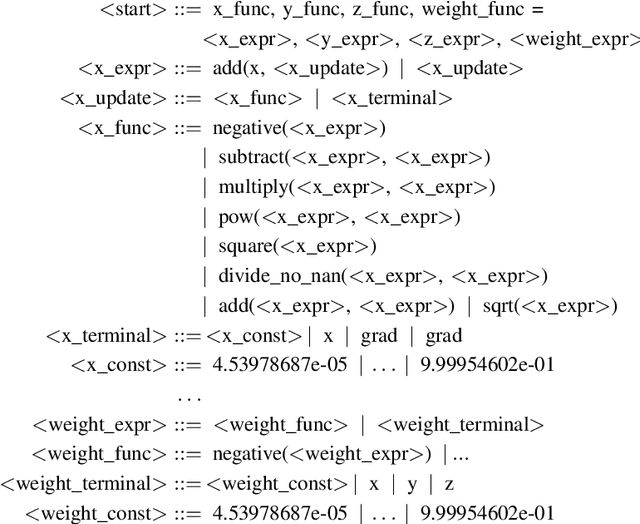

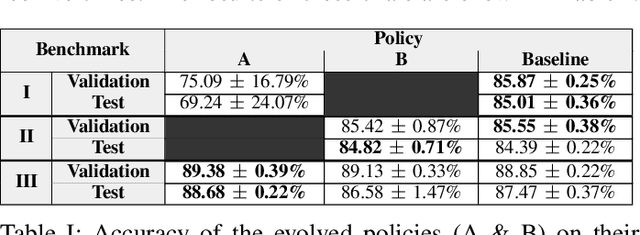

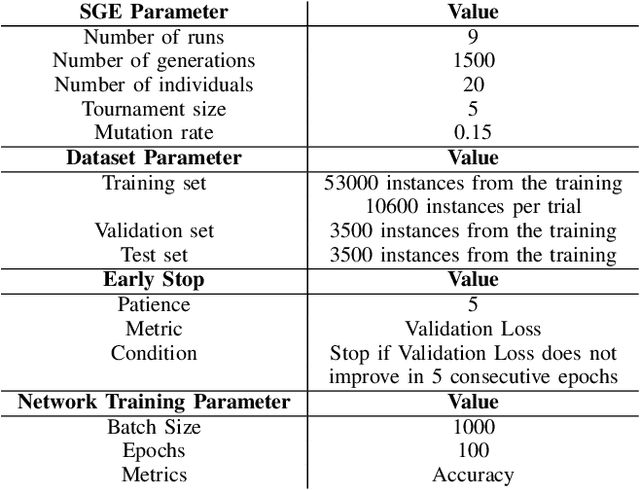

Abstract:The choice of a proper learning rate is paramount for good Artificial Neural Network training and performance. In the past, one had to rely on experience and trial-and-error to find an adequate learning rate. Presently, a plethora of state of the art automatic methods exist that make the search for a good learning rate easier. While these techniques are effective and have yielded good results over the years, they are general solutions. This means the optimization of learning rate for specific network topologies remains largely unexplored. This work presents AutoLR, a framework that evolves Learning Rate Schedulers for a specific Neural Network Architecture using Structured Grammatical Evolution. The system was used to evolve learning rate policies that were compared with a commonly used baseline value for learning rate. Results show that training performed using certain evolved policies is more efficient than the established baseline and suggest that this approach is a viable means of improving a neural network's performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge