Fengyuan Zhang

Adaptive Temporal Motion Guided Graph Convolution Network for Micro-expression Recognition

Jun 13, 2024Abstract:Micro-expressions serve as essential cues for understanding individuals' genuine emotional states. Recognizing micro-expressions attracts increasing research attention due to its various applications in fields such as business negotiation and psychotherapy. However, the intricate and transient nature of micro-expressions poses a significant challenge to their accurate recognition. Most existing works either neglect temporal dependencies or suffer from redundancy issues in clip-level recognition. In this work, we propose a novel framework for micro-expression recognition, named the Adaptive Temporal Motion Guided Graph Convolution Network (ATM-GCN). Our framework excels at capturing temporal dependencies between frames across the entire clip, thereby enhancing micro-expression recognition at the clip level. Specifically, the integration of Adaptive Temporal Motion layers empowers our method to aggregate global and local motion features inherent in micro-expressions. Experimental results demonstrate that ATM-GCN not only surpasses existing state-of-the-art methods, particularly on the Composite dataset, but also achieves superior performance on the latest micro-expression dataset CAS(ME)$^3$.

Emotion Recognition based on Multi-Task Learning Framework in the ABAW4 Challenge

Jul 25, 2022

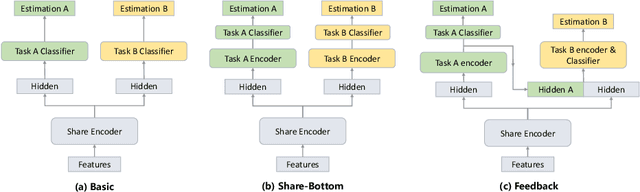

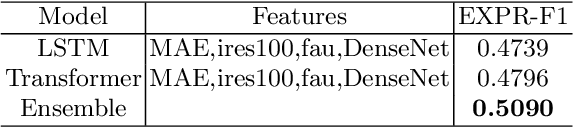

Abstract:This paper presents our submission to the Multi-Task Learning (MTL) Challenge of the 4th Affective Behavior Analysis in-the-wild (ABAW) competition. Based on visual feature representations, we utilize three types of temporal encoder to capture the temporal context information in the video, including the transformer based encoder, LSTM based encoder and GRU based encoder. With the temporal context-aware representations, we employ multi-task framework to predict the valence, arousal, expression and AU values of the images. In addition, smoothing processing is applied to refine the initial valence and arousal predictions, and a model ensemble strategy is used to combine multiple results from different model setups. Our system achieves the performance of $1.742$ on MTL Challenge validation dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge