Fenglin Ding

Improved Speech Pre-Training with Supervision-Enhanced Acoustic Unit

Dec 07, 2022

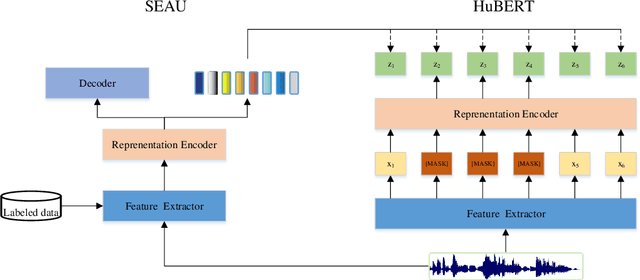

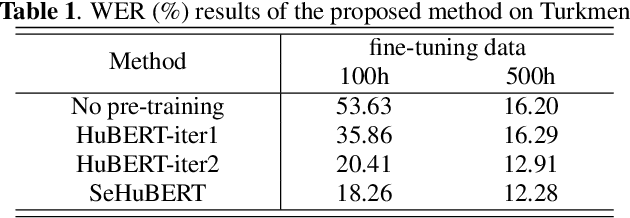

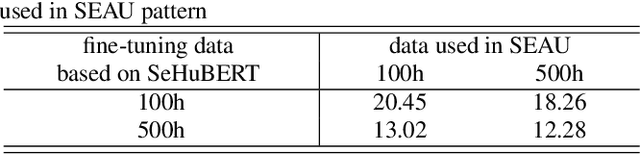

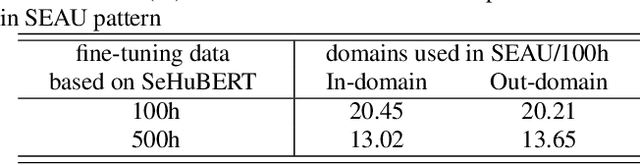

Abstract:Speech pre-training has shown great success in learning useful and general latent representations from large-scale unlabeled data. Based on a well-designed self-supervised learning pattern, pre-trained models can be used to serve lots of downstream speech tasks such as automatic speech recognition. In order to take full advantage of the labed data in low resource task, we present an improved pre-training method by introducing a supervision-enhanced acoustic unit (SEAU) pattern to intensify the expression of comtext information and ruduce the training cost. Encoder representations extracted from the SEAU pattern are used to generate more representative target units for HuBERT pre-training process. The proposed method, named SeHuBERT, achieves a relative word error rate reductions of 10.5% and 4.9% comared with the standard HuBERT on Turkmen speech recognition task with 500 hours and 100 hours fine-tuning data respectively. Extended to more languages and more data, SeHuBERT can aslo achieve a relative word error rate reductions of approximately 10% at half of the training cost compared with HuBERT.

Improved Self-Supervised Multilingual Speech Representation Learning Combined with Auxiliary Language Information

Dec 07, 2022

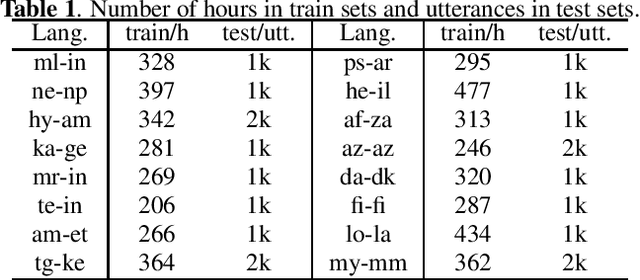

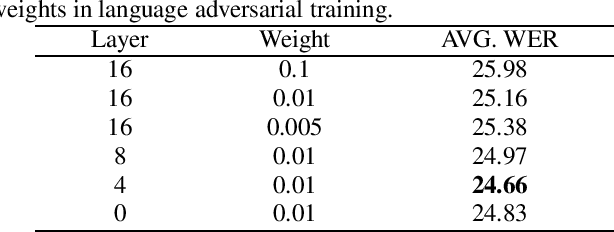

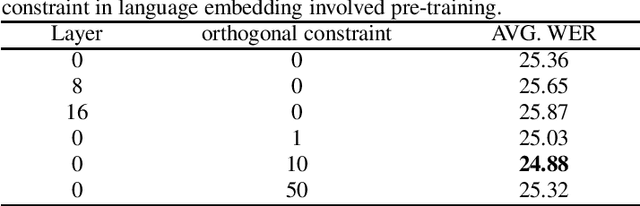

Abstract:Multilingual end-to-end models have shown great improvement over monolingual systems. With the development of pre-training methods on speech, self-supervised multilingual speech representation learning like XLSR has shown success in improving the performance of multilingual automatic speech recognition (ASR). However, similar to the supervised learning, multilingual pre-training may also suffer from language interference and further affect the application of multilingual system. In this paper, we introduce several techniques for improving self-supervised multilingual pre-training by leveraging auxiliary language information, including the language adversarial training, language embedding and language adaptive training during the pre-training stage. We conduct experiments on a multilingual ASR task consisting of 16 languages. Our experimental results demonstrate 14.3% relative gain over the standard XLSR model, and 19.8% relative gain over the no pre-training multilingual model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge