Faraz Faruqi

TactStyle: Generating Tactile Textures with Generative AI for Digital Fabrication

Mar 03, 2025

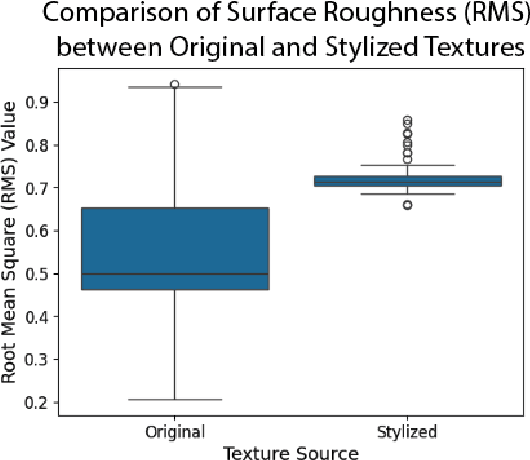

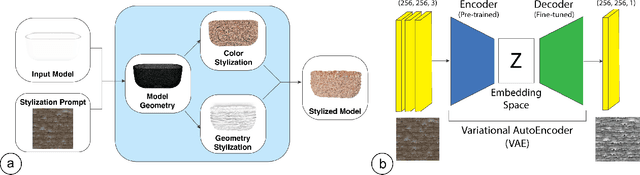

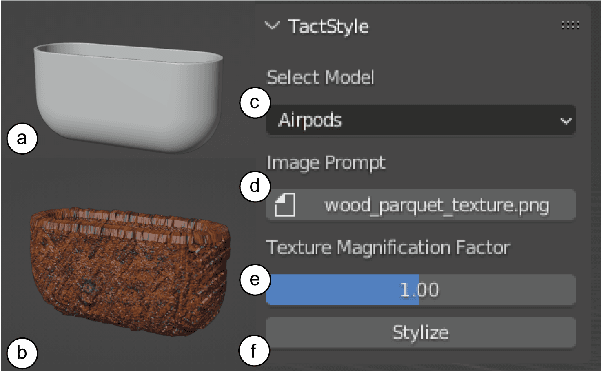

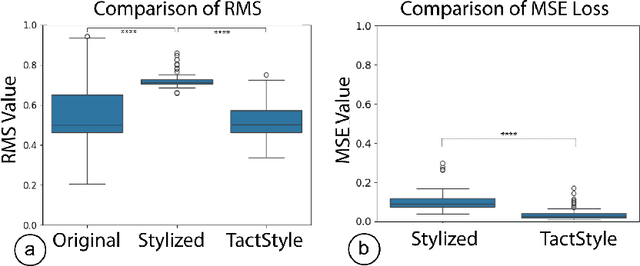

Abstract:Recent work in Generative AI enables the stylization of 3D models based on image prompts. However, these methods do not incorporate tactile information, leading to designs that lack the expected tactile properties. We present TactStyle, a system that allows creators to stylize 3D models with images while incorporating the expected tactile properties. TactStyle accomplishes this using a modified image-generation model fine-tuned to generate heightfields for given surface textures. By optimizing 3D model surfaces to embody a generated texture, TactStyle creates models that match the desired style and replicate the tactile experience. We utilize a large-scale dataset of textures to train our texture generation model. In a psychophysical experiment, we evaluate the tactile qualities of a set of 3D-printed original textures and TactStyle's generated textures. Our results show that TactStyle successfully generates a wide range of tactile features from a single image input, enabling a novel approach to haptic design.

Generative AI in Color-Changing Systems: Re-Programmable 3D Object Textures with Material and Design Constraints

Apr 25, 2024Abstract:Advances in Generative AI tools have allowed designers to manipulate existing 3D models using text or image-based prompts, enabling creators to explore different design goals. Photochromic color-changing systems, on the other hand, allow for the reprogramming of surface texture of 3D models, enabling easy customization of physical objects and opening up the possibility of using object surfaces for data display. However, existing photochromic systems require the user to manually design the desired texture, inspect the simulation of the pattern on the object, and verify the efficacy of the generated pattern. These manual design, inspection, and verification steps prevent the user from efficiently exploring the design space of possible patterns. Thus, by designing an automated workflow desired for an end-to-end texture application process, we can allow rapid iteration on different practicable patterns. In this workshop paper, we discuss the possibilities of extending generative AI systems, with material and design constraints for reprogrammable surfaces with photochromic materials. By constraining generative AI systems to colors and materials possible to be physically realized with photochromic dyes, we can create tools that would allow users to explore different viable patterns, with text and image-based prompts. We identify two focus areas in this topic: photochromic material constraints and design constraints for data-encoded textures. We highlight the current limitations of using generative AI tools to create viable textures using photochromic material. Finally, we present possible approaches to augment generative AI methods to take into account the photochromic material constraints, allowing for the creation of viable photochromic textures rapidly and easily.

Shaping Realities: Enhancing 3D Generative AI with Fabrication Constraints

Apr 17, 2024

Abstract:Generative AI tools are becoming more prevalent in 3D modeling, enabling users to manipulate or create new models with text or images as inputs. This makes it easier for users to rapidly customize and iterate on their 3D designs and explore new creative ideas. These methods focus on the aesthetic quality of the 3D models, refining them to look similar to the prompts provided by the user. However, when creating 3D models intended for fabrication, designers need to trade-off the aesthetic qualities of a 3D model with their intended physical properties. To be functional post-fabrication, 3D models have to satisfy structural constraints informed by physical principles. Currently, such requirements are not enforced by generative AI tools. This leads to the development of aesthetically appealing, but potentially non-functional 3D geometry, that would be hard to fabricate and use in the real world. This workshop paper highlights the limitations of generative AI tools in translating digital creations into the physical world and proposes new augmentations to generative AI tools for creating physically viable 3D models. We advocate for the development of tools that manipulate or generate 3D models by considering not only the aesthetic appearance but also using physical properties as constraints. This exploration seeks to bridge the gap between digital creativity and real-world applicability, extending the creative potential of generative AI into the tangible domain.

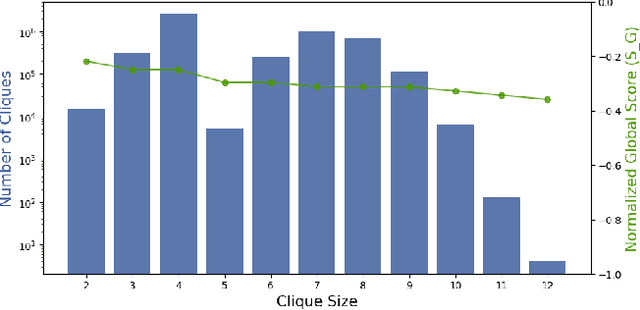

Style2Fab: Functionality-Aware Segmentation for Fabricating Personalized 3D Models with Generative AI

Sep 12, 2023Abstract:With recent advances in Generative AI, it is becoming easier to automatically manipulate 3D models. However, current methods tend to apply edits to models globally, which risks compromising the intended functionality of the 3D model when fabricated in the physical world. For example, modifying functional segments in 3D models, such as the base of a vase, could break the original functionality of the model, thus causing the vase to fall over. We introduce a method for automatically segmenting 3D models into functional and aesthetic elements. This method allows users to selectively modify aesthetic segments of 3D models, without affecting the functional segments. To develop this method we first create a taxonomy of functionality in 3D models by qualitatively analyzing 1000 models sourced from a popular 3D printing repository, Thingiverse. With this taxonomy, we develop a semi-automatic classification method to decompose 3D models into functional and aesthetic elements. We propose a system called Style2Fab that allows users to selectively stylize 3D models without compromising their functionality. We evaluate the effectiveness of our classification method compared to human-annotated data, and demonstrate the utility of Style2Fab with a user study to show that functionality-aware segmentation helps preserve model functionality.

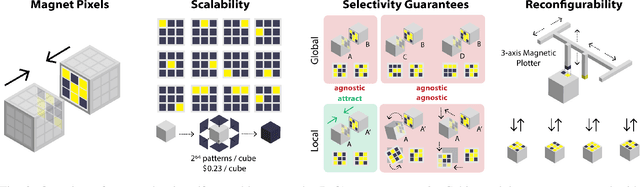

Selective Self-Assembly using Re-Programmable Magnetic Pixels

Aug 07, 2022

Abstract:This paper introduces a method to generate highly selective encodings that can be magnetically "programmed" onto physical modules to enable them to self-assemble in chosen configurations. We generate these encodings based on Hadamard matrices, and show how to design the faces of modules to be maximally attractive to their intended mate, while remaining maximally agnostic to other faces. We derive guarantees on these bounds, and verify their attraction and agnosticism experimentally. Using cubic modules whose faces have been covered in soft magnetic material, we show how inexpensive, passive modules with planar faces can be used to selectively self-assemble into target shapes without geometric guides. We show that these modules can be easily re-programmed for new target shapes using a CNC-based magnetic plotter, and demonstrate self-assembly of 8 cubes in a water tank.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge