Donald Degraen

TactStyle: Generating Tactile Textures with Generative AI for Digital Fabrication

Mar 03, 2025

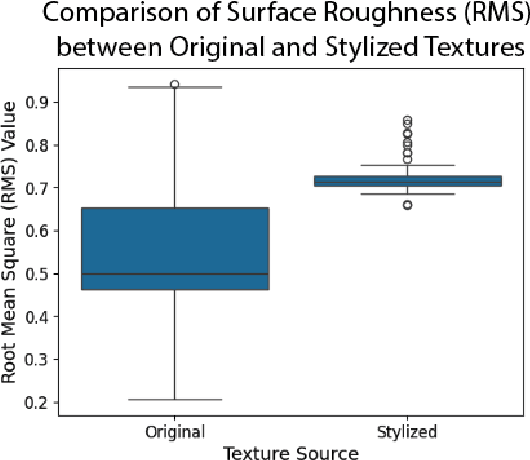

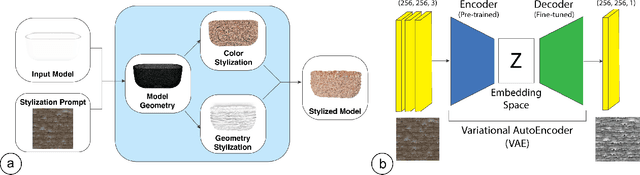

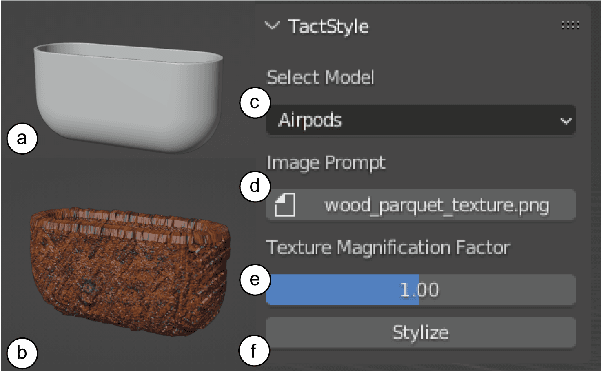

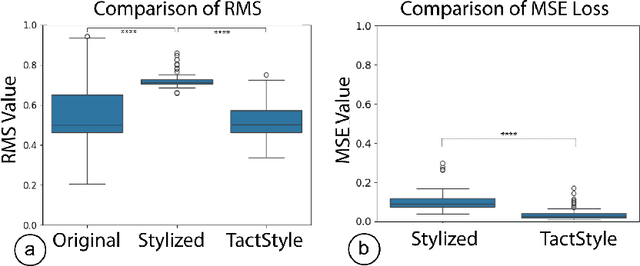

Abstract:Recent work in Generative AI enables the stylization of 3D models based on image prompts. However, these methods do not incorporate tactile information, leading to designs that lack the expected tactile properties. We present TactStyle, a system that allows creators to stylize 3D models with images while incorporating the expected tactile properties. TactStyle accomplishes this using a modified image-generation model fine-tuned to generate heightfields for given surface textures. By optimizing 3D model surfaces to embody a generated texture, TactStyle creates models that match the desired style and replicate the tactile experience. We utilize a large-scale dataset of textures to train our texture generation model. In a psychophysical experiment, we evaluate the tactile qualities of a set of 3D-printed original textures and TactStyle's generated textures. Our results show that TactStyle successfully generates a wide range of tactile features from a single image input, enabling a novel approach to haptic design.

Mixing realities for sketch retrieval in Virtual Reality

Nov 05, 2019

Abstract:Drawing tools for Virtual Reality (VR) enable users to model 3D designs from within the virtual environment itself. These tools employ sketching and sculpting techniques known from desktop-based interfaces and apply them to hand-based controller interaction. While these techniques allow for mid-air sketching of basic shapes, it remains difficult for users to create detailed and comprehensive 3D models. In our work, we focus on supporting the user in designing the virtual environment around them by enhancing sketch-based interfaces with a supporting system for interactive model retrieval. Through sketching, an immersed user can query a database containing detailed 3D models and replace them into the virtual environment. To understand supportive sketching within a virtual environment, we compare different methods of sketch interaction, i.e., 3D mid-air sketching, 2D sketching on a virtual tablet, 2D sketching on a fixed virtual whiteboard, and 2D sketching on a real tablet. %using a 2D physical tablet, a 2D virtual tablet, a 2D virtual whiteboard, and 3D mid-air sketching. Our results show that 3D mid-air sketching is considered to be a more intuitive method to search a collection of models while the addition of physical devices creates confusion due to the complications of their inclusion within a virtual environment. While we pose our work as a retrieval problem for 3D models of chairs, our results can be extrapolated to other sketching tasks for virtual environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge