Fang Hu

Moore Threads

Advanced Multi-Microscopic Views Cell Semi-supervised Segmentation

Mar 21, 2023Abstract:Although deep learning (DL) shows powerful potential in cell segmentation tasks, it suffers from poor generalization as DL-based methods originally simplified cell segmentation in detecting cell membrane boundary, lacking prominent cellular structures to position overall differentiating. Moreover, the scarcity of annotated cell images limits the performance of DL models. Segmentation limitations of a single category of cell make massive practice difficult, much less, with varied modalities. In this paper, we introduce a novel semi-supervised cell segmentation method called Multi-Microscopic-view Cell semi-supervised Segmentation (MMCS), which can train cell segmentation models utilizing less labeled multi-posture cell images with different microscopy well. Technically, MMCS consists of Nucleus-assisted global recognition, Self-adaptive diameter filter, and Temporal-ensembling models. Nucleus-assisted global recognition adds additional cell nucleus channel to improve the global distinguishing performance of fuzzy cell membrane boundaries even when cells aggregate. Besides, self-adapted cell diameter filter can help separate multi-resolution cells with different morphology properly. It further leverages the temporal-ensembling models to improve the semi-supervised training process, achieving effective training with less labeled data. Additionally, optimizing the weight of unlabeled loss contributed to total loss also improve the model performance. Evaluated on the Tuning Set of NeurIPS 2022 Cell Segmentation Challenge (NeurIPS CellSeg), MMCS achieves an F1-score of 0.8239 and the running time for all cases is within the time tolerance.

Transferable Active Grasping and Real Embodied Dataset

Apr 28, 2020

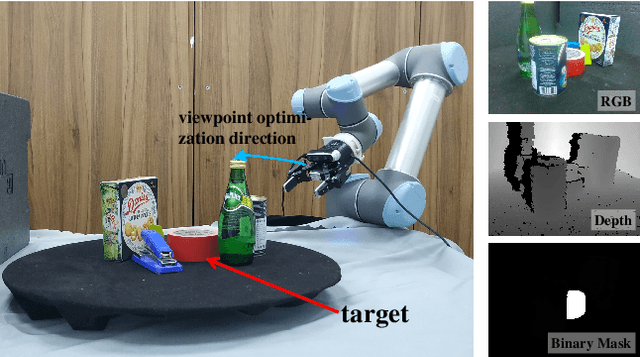

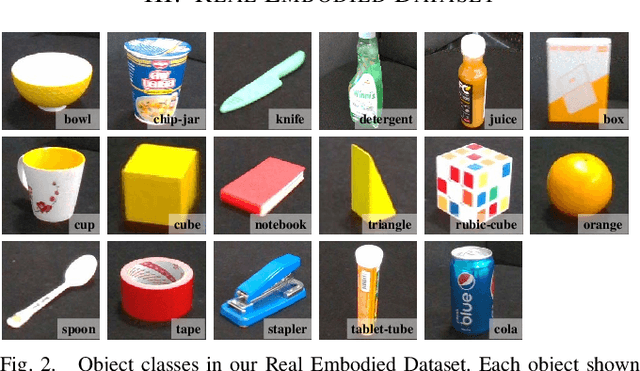

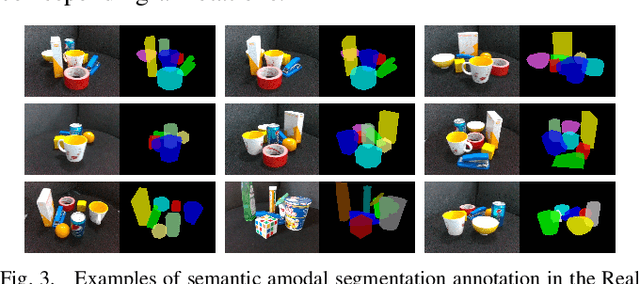

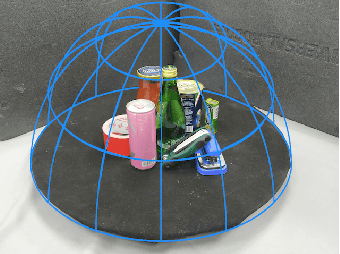

Abstract:Grasping in cluttered scenes is challenging for robot vision systems, as detection accuracy can be hindered by partial occlusion of objects. We adopt a reinforcement learning (RL) framework and 3D vision architectures to search for feasible viewpoints for grasping by the use of hand-mounted RGB-D cameras. To overcome the disadvantages of photo-realistic environment simulation, we propose a large-scale dataset called Real Embodied Dataset (RED), which includes full-viewpoint real samples on the upper hemisphere with amodal annotation and enables a simulator that has real visual feedback. Based on this dataset, a practical 3-stage transferable active grasping pipeline is developed, that is adaptive to unseen clutter scenes. In our pipeline, we propose a novel mask-guided reward to overcome the sparse reward issue in grasping and ensure category-irrelevant behavior. The grasping pipeline and its possible variants are evaluated with extensive experiments both in simulation and on a real-world UR-5 robotic arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge