Fanfei Meng

Inaccuracy of an E-Dictionary and Its Influence on Chinese Language Users

Apr 01, 2025Abstract:Electronic dictionaries have largely replaced paper dictionaries and become central tools for L2 learners seeking to expand their vocabulary. Users often assume these resources are reliable and rarely question the validity of the definitions provided. The accuracy of major E-dictionaries is seldom scrutinized, and little attention has been paid to how their corpora are constructed. Research on dictionary use, particularly the limitations of electronic dictionaries, remains scarce. This study adopts a combined method of experimentation, user survey, and dictionary critique to examine Youdao, one of the most widely used E-dictionaries in China. The experiment involved a translation task paired with retrospective reflection. Participants were asked to translate sentences containing words that are insufficiently or inaccurately defined in Youdao. Their consultation behavior was recorded to analyze how faulty definitions influenced comprehension. Results show that incomplete or misleading definitions can cause serious misunderstandings. Additionally, students exhibited problematic consultation habits. The study further explores how such flawed definitions originate, highlighting issues in data processing and the integration of AI and machine learning technologies in dictionary construction. The findings suggest a need for better training in dictionary literacy for users, as well as improvements in the underlying AI models used to build E-dictionaries.

Hybrid FedGraph: An efficient hybrid federated learning algorithm using graph convolutional neural network

Apr 15, 2024

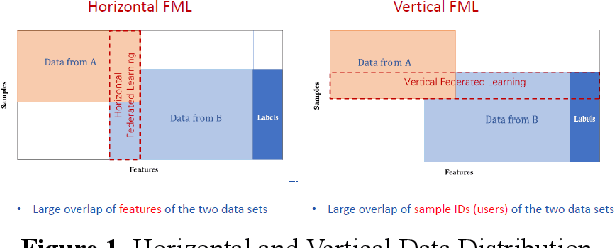

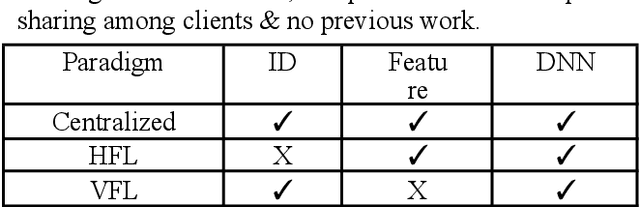

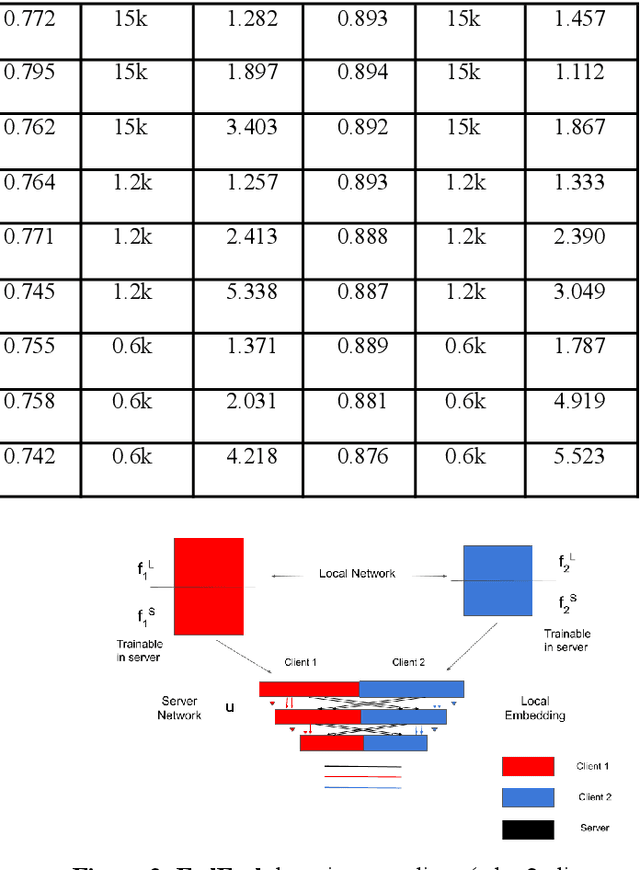

Abstract:Federated learning is an emerging paradigm for decentralized training of machine learning models on distributed clients, without revealing the data to the central server. Most existing works have focused on horizontal or vertical data distributions, where each client possesses different samples with shared features, or each client fully shares only sample indices, respectively. However, the hybrid scheme is much less studied, even though it is much more common in the real world. Therefore, in this paper, we propose a generalized algorithm, FedGraph, that introduces a graph convolutional neural network to capture feature-sharing information while learning features from a subset of clients. We also develop a simple but effective clustering algorithm that aggregates features produced by the deep neural networks of each client while preserving data privacy.

Sample-based Dynamic Hierarchical Transformer with Layer and Head Flexibility via Contextual Bandit

Dec 12, 2023Abstract:Transformer requires a fixed number of layers and heads which makes them inflexible to the complexity of individual samples and expensive in training and inference. To address this, we propose a sample-based Dynamic Hierarchical Transformer (DHT) model whose layers and heads can be dynamically configured with single data samples via solving contextual bandit problems. To determine the number of layers and heads, we use the Uniform Confidence Bound while we deploy combinatorial Thompson Sampling in order to select specific head combinations given their number. Different from previous work that focuses on compressing trained networks for inference only, DHT is not only advantageous for adaptively optimizing the underlying network architecture during training but also has a flexible network for efficient inference. To the best of our knowledge, this is the first comprehensive data-driven dynamic transformer without any additional auxiliary neural networks that implement the dynamic system. According to the experiment results, we achieve up to 74% computational savings for both training and inference with a minimal loss of accuracy.

FedEmb: A Vertical and Hybrid Federated Learning Algorithm using Network And Feature Embedding Aggregation

Dec 04, 2023

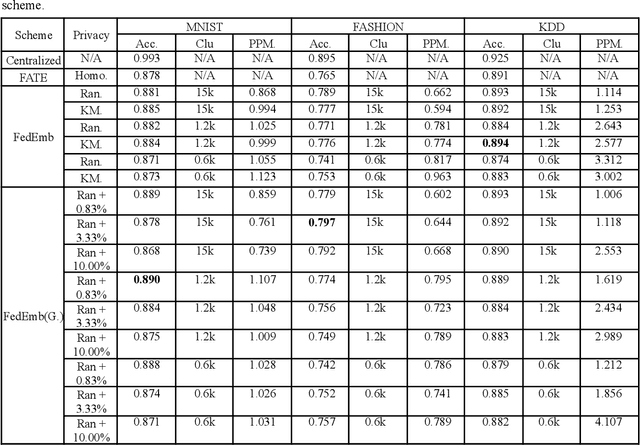

Abstract:Federated learning (FL) is an emerging paradigm for decentralized training of machine learning models on distributed clients, without revealing the data to the central server. The learning scheme may be horizontal, vertical or hybrid (both vertical and horizontal). Most existing research work with deep neural network (DNN) modelling is focused on horizontal data distributions, while vertical and hybrid schemes are much less studied. In this paper, we propose a generalized algorithm FedEmb, for modelling vertical and hybrid DNN-based learning. The idea of our algorithm is characterised by higher inference accuracy, stronger privacy-preserving properties, and lower client-server communication bandwidth demands as compared with existing work. The experimental results show that FedEmb is an effective method to tackle both split feature & subject space decentralized problems, shows 0.3% to 4.2% inference accuracy improvement with limited privacy revealing for datasets stored in local clients, and reduces 88.9 % time complexity over vertical baseline method.

* Accepted by Proceedings on Engineering Sciences

Joint Detection Algorithm for Multiple Cognitive Users in Spectrum Sensing

Dec 01, 2023

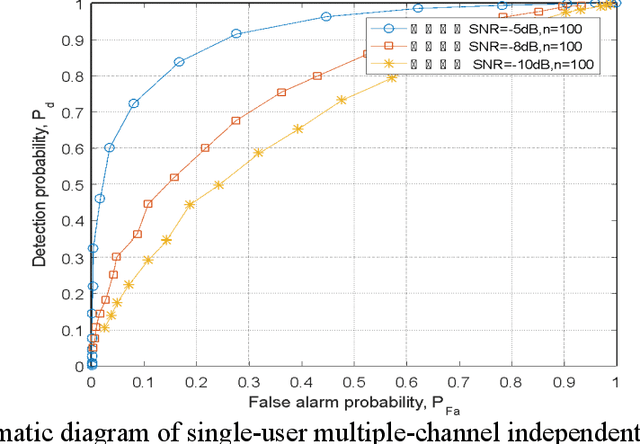

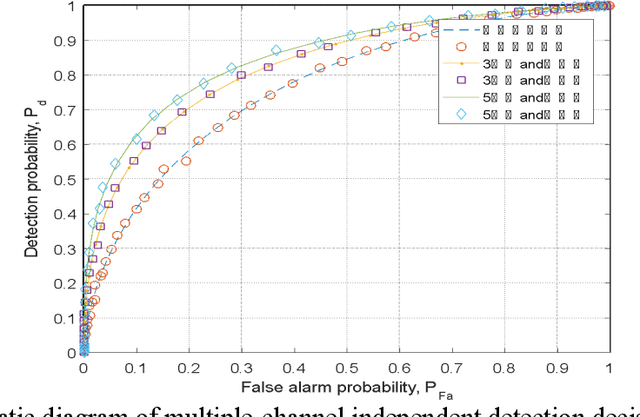

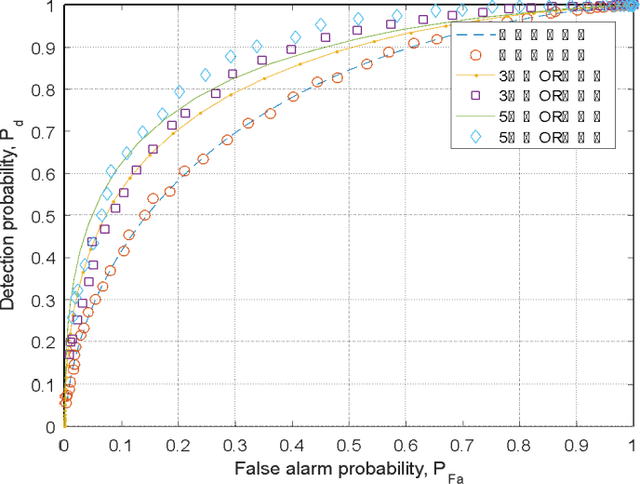

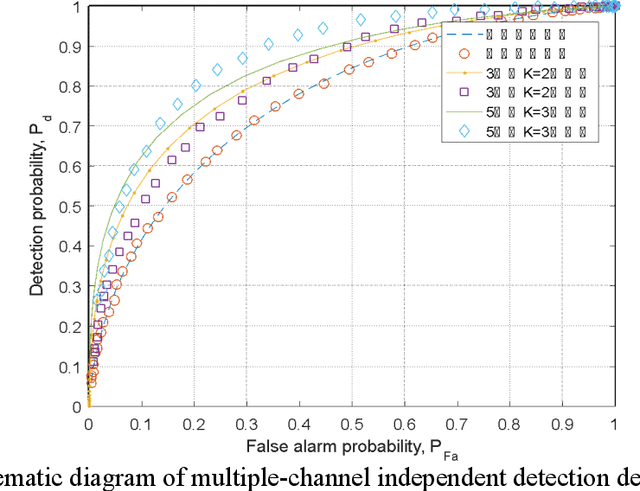

Abstract:Spectrum sensing technology is a crucial aspect of modern communication technology, serving as one of the essential techniques for efficiently utilizing scarce information resources in tight frequency bands. This paper first introduces three common logical circuit decision criteria in hard decisions and analyzes their decision rigor. Building upon hard decisions, the paper further introduces a method for multi-user spectrum sensing based on soft decisions. Then the paper simulates the false alarm probability and detection probability curves corresponding to the three criteria. The simulated results of multi-user collaborative sensing indicate that the simulation process significantly reduces false alarm probability and enhances detection probability. This approach effectively detects spectrum resources unoccupied during idle periods, leveraging the concept of time-division multiplexing and rationalizing the redistribution of information resources. The entire computation process relies on the calculation principles of power spectral density in communication theory, involving threshold decision detection for noise power and the sum of noise and signal power. It provides a secondary decision detection, reflecting the perceptual decision performance of logical detection methods with relative accuracy.

* https://aei.ewapublishing.org/article.html?pk=e24c40d220434209ae2fe2e984bcf2c2

Sentiment analysis with adaptive multi-head attention in Transformer

Oct 23, 2023

Abstract:We propose a novel framework based on the attention mechanism to identify the sentiment of a movie review document. Previous efforts on deep neural networks with attention mechanisms focus on encoder and decoder with fixed numbers of multi-head attention. Therefore, we need a mechanism to stop the attention process automatically if no more useful information can be read from the memory.In this paper, we propose an adaptive multi-head attention architecture (AdaptAttn) which varies the number of attention heads based on length of sentences. AdaptAttn has a data preprocessing step where each document is classified into any one of the three bins small, medium or large based on length of the sentence. The document classified as small goes through two heads in each layer, the medium group passes four heads and the large group is processed by eight heads. We examine the merit of our model on the Stanford large movie review dataset. The experimental results show that the F1 score from our model is on par with the baseline model.

Model-based Reinforcement Learning for Service Mesh Fault Resiliency in a Web Application-level

Oct 21, 2021

Abstract:Microservice-based architectures enable different aspects of web applications to be created and updated independently, even after deployment. Associated technologies such as service mesh provide application-level fault resilience through attribute configurations that govern the behavior of request-response service -- and the interactions among them -- in the presence of failures. While this provides tremendous flexibility, the configured values of these attributes -- and the relationships among them -- can significantly affect the performance and fault resilience of the overall application. Furthermore, it is impossible to determine the best and worst combinations of attribute values with respect to fault resiliency via testing, due to the complexities of the underlying distributed system and the many possible attribute value combinations. In this paper, we present a model-based reinforcement learning workflow towards service mesh fault resiliency. Our approach enables the prediction of the most significant fault resilience behaviors at a web application-level, scratching from single service to aggregated multi-service management with efficient agent collaborations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge