Erion Plaku

Combining Machine Learning and Sampling-Based Search for Multi-Goal Motion Planning with Dynamics

Mar 26, 2025Abstract:This paper considers multi-goal motion planning in unstructured, obstacle-rich environments where a robot is required to reach multiple regions while avoiding collisions. The planned motions must also satisfy the differential constraints imposed by the robot dynamics. To find solutions efficiently, this paper leverages machine learning, Traveling Salesman Problem (TSP), and sampling-based motion planning. The approach expands a motion tree by adding collision-free and dynamically-feasible trajectories as branches. A TSP solver is used to compute a tour for each node to determine the order in which to reach the remaining goals by utilizing a cost matrix. An important aspect of the approach is that it leverages machine learning to construct the cost matrix by combining runtime and distance predictions to single-goal motion-planning problems. During the motion-tree expansion, priority is given to nodes associated with low-cost tours. Experiments with a vehicle model operating in obstacle-rich environments demonstrate the computational efficiency and scalability of the approach.

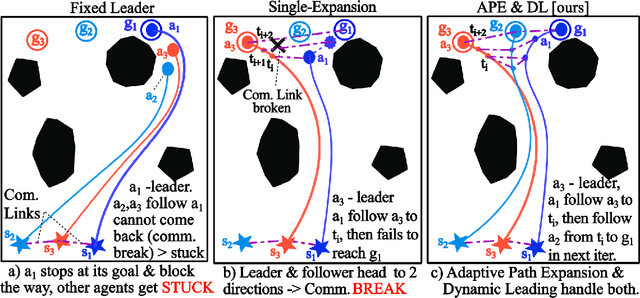

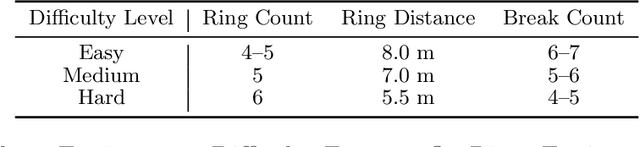

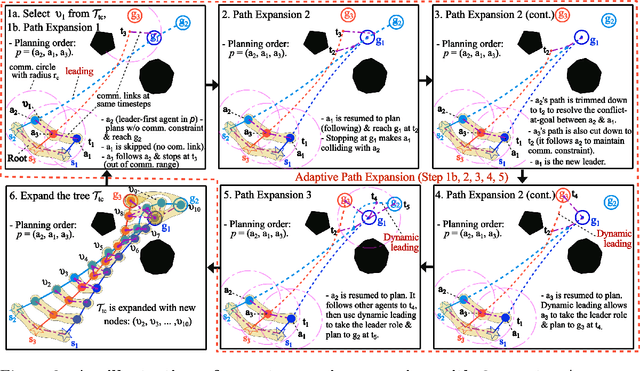

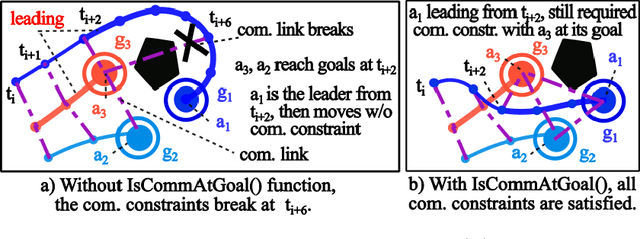

Multi-Agent Path Finding under Limited Communication Range Constraint via Dynamic Leading

Jan 06, 2025

Abstract:This paper proposes a novel framework to handle a multi-agent path finding problem under a limited communication range constraint, where all agents must have a connected communication channel to the rest of the team. Many existing approaches to multi-agent path finding (e.g., leader-follower platooning) overcome computational challenges of planning in this domain by planning one agent at a time in a fixed order. However, fixed leader-follower approaches can become stuck during planning, limiting their practical utility in dense-clutter environments. To overcome this limitation, we develop dynamic leading multi-agent path finding, which allows for dynamic reselection of the leading agent during path planning whenever progress cannot be made. The experiments show the efficiency of our framework, which can handle up to 25 agents with more than 90% success-rate across five environment types where baselines routinely fail.

Evaluating Vision-Language Models as Evaluators in Path Planning

Nov 27, 2024Abstract:Despite their promise to perform complex reasoning, large language models (LLMs) have been shown to have limited effectiveness in end-to-end planning. This has inspired an intriguing question: if these models cannot plan well, can they still contribute to the planning framework as a helpful plan evaluator? In this work, we generalize this question to consider LLMs augmented with visual understanding, i.e., Vision-Language Models (VLMs). We introduce PathEval, a novel benchmark evaluating VLMs as plan evaluators in complex path-planning scenarios. Succeeding in the benchmark requires a VLM to be able to abstract traits of optimal paths from the scenario description, demonstrate precise low-level perception on each path, and integrate this information to decide the better path. Our analysis of state-of-the-art VLMs reveals that these models face significant challenges on the benchmark. We observe that the VLMs can precisely abstract given scenarios to identify the desired traits and exhibit mixed performance in integrating the provided information. Yet, their vision component presents a critical bottleneck, with models struggling to perceive low-level details about a path. Our experimental results show that this issue cannot be trivially addressed via end-to-end fine-tuning; rather, task-specific discriminative adaptation of these vision encoders is needed for these VLMs to become effective path evaluators.

Multi-Goal Motion Memory

Jul 16, 2024Abstract:Autonomous mobile robots (e.g., warehouse logistics robots) often need to traverse complex, obstacle-rich, and changing environments to reach multiple fixed goals (e.g., warehouse shelves). Traditional motion planners need to calculate the entire multi-goal path from scratch in response to changes in the environment, which result in a large consumption of computing resources. This process is not only time-consuming but also may not meet real-time requirements in application scenarios that require rapid response to environmental changes. In this paper, we provide a novel Multi-Goal Motion Memory technique that allows robots to use previous planning experiences to accelerate future multi-goal planning in changing environments. Specifically, our technique predicts collision-free and dynamically-feasible trajectories and distances between goal pairs to guide the sampling process to build a roadmap, to inform a Traveling Salesman Problem (TSP) solver to compute a tour, and to efficiently produce motion plans. Experiments conducted with a vehicle and a snake-like robot in obstacle-rich environments show that the proposed Motion Memory technique can substantially accelerate planning speed by up to 90\%. Furthermore, the solution quality is comparable to state-of-the-art algorithms and even better in some environments.

Look Further Ahead: Testing the Limits of GPT-4 in Path Planning

Jun 17, 2024Abstract:Large Language Models (LLMs) have shown impressive capabilities across a wide variety of tasks. However, they still face challenges with long-horizon planning. To study this, we propose path planning tasks as a platform to evaluate LLMs' ability to navigate long trajectories under geometric constraints. Our proposed benchmark systematically tests path-planning skills in complex settings. Using this, we examined GPT-4's planning abilities using various task representations and prompting approaches. We found that framing prompts as Python code and decomposing long trajectory tasks improve GPT-4's path planning effectiveness. However, while these approaches show some promise toward improving the planning ability of the model, they do not obtain optimal paths and fail at generalizing over extended horizons.

Motion Memory: Leveraging Past Experiences to Accelerate Future Motion Planning

Oct 09, 2023Abstract:When facing a new motion-planning problem, most motion planners solve it from scratch, e.g., via sampling and exploration or starting optimization from a straight-line path. However, most motion planners have to experience a variety of planning problems throughout their lifetimes, which are yet to be leveraged for future planning. In this paper, we present a simple but efficient method called Motion Memory, which allows different motion planners to accelerate future planning using past experiences. Treating existing motion planners as either a closed or open box, we present a variety of ways that Motion Memory can contribute to reduce the planning time when facing a new planning problem. We provide extensive experiment results with three different motion planners on three classes of planning problems with over 30,000 problem instances and show that planning speed can be significantly reduced by up to 89% with the proposed Motion Memory technique and with increasing past planning experiences.

Can Large Language Models be Good Path Planners? A Benchmark and Investigation on Spatial-temporal Reasoning

Oct 05, 2023Abstract:Large language models (LLMs) have achieved remarkable success across a wide spectrum of tasks; however, they still face limitations in scenarios that demand long-term planning and spatial reasoning. To facilitate this line of research, in this work, we propose a new benchmark, termed $\textbf{P}$ath $\textbf{P}$lanning from $\textbf{N}$atural $\textbf{L}$anguage ($\textbf{PPNL}$). Our benchmark evaluates LLMs' spatial-temporal reasoning by formulating ''path planning'' tasks that require an LLM to navigate to target locations while avoiding obstacles and adhering to constraints. Leveraging this benchmark, we systematically investigate LLMs including GPT-4 via different few-shot prompting methodologies and BART and T5 of various sizes via fine-tuning. Our experimental results show the promise of few-shot GPT-4 in spatial reasoning, when it is prompted to reason and act interleavedly, although it still fails to make long-term temporal reasoning. In contrast, while fine-tuned LLMs achieved impressive results on in-distribution reasoning tasks, they struggled to generalize to larger environments or environments with more obstacles.

Guided Sampling-Based Motion Planning with Dynamics in Unknown Environments

Jun 15, 2023Abstract:Despite recent progress improving the efficiency and quality of motion planning, planning collision-free and dynamically-feasible trajectories in partially-mapped environments remains challenging, since constantly replanning as unseen obstacles are revealed during navigation both incurs significant computational expense and can introduce problematic oscillatory behavior. To improve the quality of motion planning in partial maps, this paper develops a framework that augments sampling-based motion planning to leverage a high-level discrete layer and prior solutions to guide motion-tree expansion during replanning, affording both (i) faster planning and (ii) improved solution coherence. Our framework shows significant improvements in runtime and solution distance when compared with other sampling-based motion planners.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge