Erik Weber

A Comprehensive Survey on Legal Summarization: Challenges and Future Directions

Jan 29, 2025

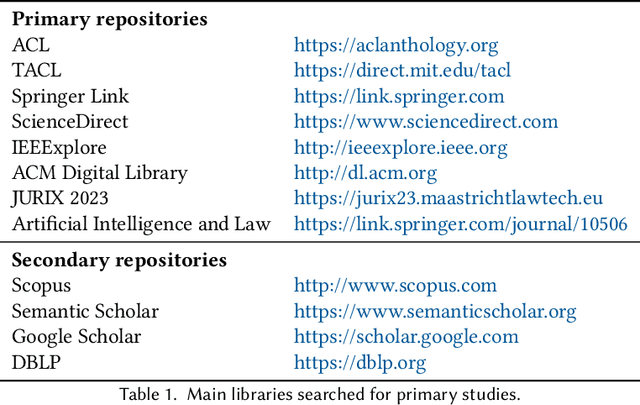

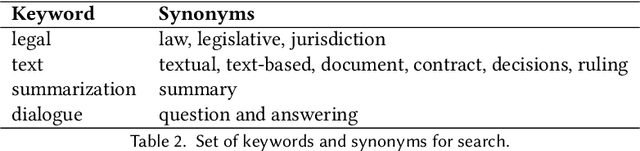

Abstract:This article provides a systematic up-to-date survey of automatic summarization techniques, datasets, models, and evaluation methods in the legal domain. Through specific source selection criteria, we thoroughly review over 120 papers spanning the modern `transformer' era of natural language processing (NLP), thus filling a gap in existing systematic surveys on the matter. We present existing research along several axes and discuss trends, challenges, and opportunities for future research.

Large Physics Models: Towards a collaborative approach with Large Language Models and Foundation Models

Jan 09, 2025

Abstract:This paper explores ideas and provides a potential roadmap for the development and evaluation of physics-specific large-scale AI models, which we call Large Physics Models (LPMs). These models, based on foundation models such as Large Language Models (LLMs) - trained on broad data - are tailored to address the demands of physics research. LPMs can function independently or as part of an integrated framework. This framework can incorporate specialized tools, including symbolic reasoning modules for mathematical manipulations, frameworks to analyse specific experimental and simulated data, and mechanisms for synthesizing theories and scientific literature. We begin by examining whether the physics community should actively develop and refine dedicated models, rather than relying solely on commercial LLMs. We then outline how LPMs can be realized through interdisciplinary collaboration among experts in physics, computer science, and philosophy of science. To integrate these models effectively, we identify three key pillars: Development, Evaluation, and Philosophical Reflection. Development focuses on constructing models capable of processing physics texts, mathematical formulations, and diverse physical data. Evaluation assesses accuracy and reliability by testing and benchmarking. Finally, Philosophical Reflection encompasses the analysis of broader implications of LLMs in physics, including their potential to generate new scientific understanding and what novel collaboration dynamics might arise in research. Inspired by the organizational structure of experimental collaborations in particle physics, we propose a similarly interdisciplinary and collaborative approach to building and refining Large Physics Models. This roadmap provides specific objectives, defines pathways to achieve them, and identifies challenges that must be addressed to realise physics-specific large scale AI models.

Is GPT-4 Less Politically Biased than GPT-3.5? A Renewed Investigation of ChatGPT's Political Biases

Oct 28, 2024

Abstract:This work investigates the political biases and personality traits of ChatGPT, specifically comparing GPT-3.5 to GPT-4. In addition, the ability of the models to emulate political viewpoints (e.g., liberal or conservative positions) is analyzed. The Political Compass Test and the Big Five Personality Test were employed 100 times for each scenario, providing statistically significant results and an insight into the results correlations. The responses were analyzed by computing averages, standard deviations, and performing significance tests to investigate differences between GPT-3.5 and GPT-4. Correlations were found for traits that have been shown to be interdependent in human studies. Both models showed a progressive and libertarian political bias, with GPT-4's biases being slightly, but negligibly, less pronounced. Specifically, on the Political Compass, GPT-3.5 scored -6.59 on the economic axis and -6.07 on the social axis, whereas GPT-4 scored -5.40 and -4.73. In contrast to GPT-3.5, GPT-4 showed a remarkable capacity to emulate assigned political viewpoints, accurately reflecting the assigned quadrant (libertarian-left, libertarian-right, authoritarian-left, authoritarian-right) in all four tested instances. On the Big Five Personality Test, GPT-3.5 showed highly pronounced Openness and Agreeableness traits (O: 85.9%, A: 84.6%). Such pronounced traits correlate with libertarian views in human studies. While GPT-4 overall exhibited less pronounced Big Five personality traits, it did show a notably higher Neuroticism score. Assigned political orientations influenced Openness, Agreeableness, and Conscientiousness, again reflecting interdependencies observed in human studies. Finally, we observed that test sequencing affected ChatGPT's responses and the observed correlations, indicating a form of contextual memory.

Behind the Screen: Investigating ChatGPT's Dark Personality Traits and Conspiracy Beliefs

Feb 06, 2024Abstract:ChatGPT is notorious for its intransparent behavior. This paper tries to shed light on this, providing an in-depth analysis of the dark personality traits and conspiracy beliefs of GPT-3.5 and GPT-4. Different psychological tests and questionnaires were employed, including the Dark Factor Test, the Mach-IV Scale, the Generic Conspiracy Belief Scale, and the Conspiracy Mentality Scale. The responses were analyzed computing average scores, standard deviations, and significance tests to investigate differences between GPT-3.5 and GPT-4. For traits that have shown to be interdependent in human studies, correlations were considered. Additionally, system roles corresponding to groups that have shown distinct answering behavior in the corresponding questionnaires were applied to examine the models' ability to reflect characteristics associated with these roles in their responses. Dark personality traits and conspiracy beliefs were not particularly pronounced in either model with little differences between GPT-3.5 and GPT-4. However, GPT-4 showed a pronounced tendency to believe in information withholding. This is particularly intriguing given that GPT-4 is trained on a significantly larger dataset than GPT-3.5. Apparently, in this case an increased data exposure correlates with a greater belief in the control of information. An assignment of extreme political affiliations increased the belief in conspiracy theories. Test sequencing affected the models' responses and the observed correlations, indicating a form of contextual memory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge