Erick Mas

Streamlining Forest Wildfire Surveillance: AI-Enhanced UAVs Utilizing the FLAME Aerial Video Dataset for Lightweight and Efficient Monitoring

Aug 31, 2024Abstract:In recent years, unmanned aerial vehicles (UAVs) have played an increasingly crucial role in supporting disaster emergency response efforts by analyzing aerial images. While current deep-learning models focus on improving accuracy, they often overlook the limited computing resources of UAVs. This study recognizes the imperative for real-time data processing in disaster response scenarios and introduces a lightweight and efficient approach for aerial video understanding. Our methodology identifies redundant portions within the video through policy networks and eliminates this excess information using frame compression techniques. Additionally, we introduced the concept of a `station point,' which leverages future information in the sequential policy network, thereby enhancing accuracy. To validate our method, we employed the wildfire FLAME dataset. Compared to the baseline, our approach reduces computation costs by more than 13 times while boosting accuracy by 3$\%$. Moreover, our method can intelligently select salient frames from the video, refining the dataset. This feature enables sophisticated models to be effectively trained on a smaller dataset, significantly reducing the time spent during the training process.

Towards Efficient Disaster Response via Cost-effective Unbiased Class Rate Estimation through Neyman Allocation Stratified Sampling Active Learning

May 28, 2024

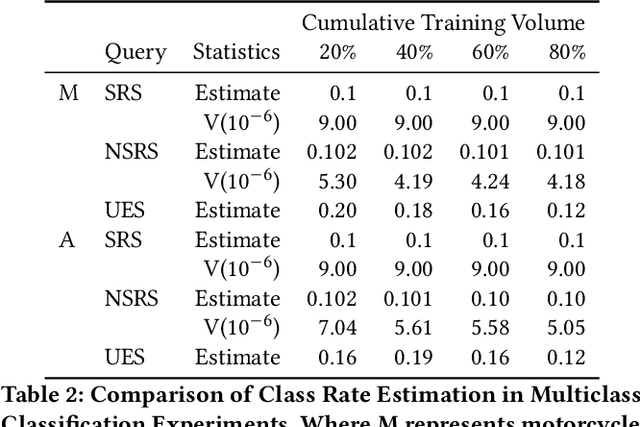

Abstract:With the rapid development of earth observation technology, we have entered an era of massively available satellite remote-sensing data. However, a large amount of satellite remote sensing data lacks a label or the label cost is too high to hinder the potential of AI technology mining satellite data. Especially in such an emergency response scenario that uses satellite data to evaluate the degree of disaster damage. Disaster damage assessment encountered bottlenecks due to excessive focus on the damage of a certain building in a specific geographical space or a certain area on a larger scale. In fact, in the early days of disaster emergency response, government departments were more concerned about the overall damage rate of the disaster area instead of single-building damage, because this helps the government decide the level of emergency response. We present an innovative algorithm that constructs Neyman stratified random sampling trees for binary classification and extends this approach to multiclass problems. Through extensive experimentation on various datasets and model structures, our findings demonstrate that our method surpasses both passive and conventional active learning techniques in terms of class rate estimation and model enhancement with only 30\%-60\% of the annotation cost of simple sampling. It effectively addresses the 'sampling bias' challenge in traditional active learning strategies and mitigates the 'cold start' dilemma. The efficacy of our approach is further substantiated through application to disaster evaluation tasks using Xview2 Satellite imagery, showcasing its practical utility in real-world contexts.

Flood Data Analysis on SpaceNet 8 Using Apache Sedona

Apr 28, 2024

Abstract:With the escalating frequency of floods posing persistent threats to human life and property, satellite remote sensing has emerged as an indispensable tool for monitoring flood hazards. SpaceNet8 offers a unique opportunity to leverage cutting-edge artificial intelligence technologies to assess these hazards. A significant contribution of this research is its application of Apache Sedona, an advanced platform specifically designed for the efficient and distributed processing of large-scale geospatial data. This platform aims to enhance the efficiency of error analysis, a critical aspect of improving flood damage detection accuracy. Based on Apache Sedona, we introduce a novel approach that addresses the challenges associated with inaccuracies in flood damage detection. This approach involves the retrieval of cases from historical flood events, the adaptation of these cases to current scenarios, and the revision of the model based on clustering algorithms to refine its performance. Through the replication of both the SpaceNet8 baseline and its top-performing models, we embark on a comprehensive error analysis. This analysis reveals several main sources of inaccuracies. To address these issues, we employ data visual interpretation and histogram equalization techniques, resulting in significant improvements in model metrics. After these enhancements, our indicators show a notable improvement, with precision up by 5%, F1 score by 2.6%, and IoU by 4.5%. This work highlights the importance of advanced geospatial data processing tools, such as Apache Sedona. By improving the accuracy and efficiency of flood detection, this research contributes to safeguarding public safety and strengthening infrastructure resilience in flood-prone areas, making it a valuable addition to the field of remote sensing and disaster management.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge