Eric Jenn

Certified ML Object Detection for Surveillance Missions

Jun 18, 2024

Abstract:In this paper, we present a development process of a drone detection system involving a machine learning object detection component. The purpose is to reach acceptable performance objectives and provide sufficient evidences, required by the recommendations (soon to be published) of the ED 324 / ARP 6983 standard, to gain confidence in the dependability of the designed system.

White Paper Machine Learning in Certified Systems

Mar 18, 2021

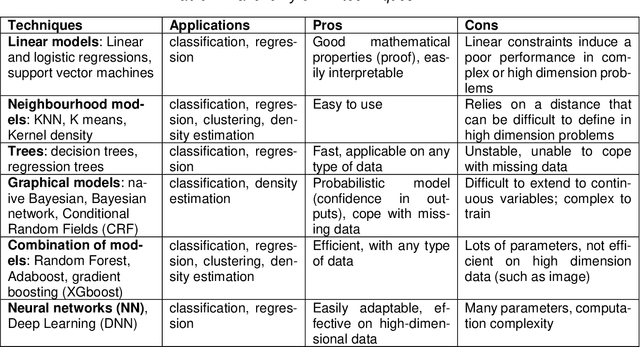

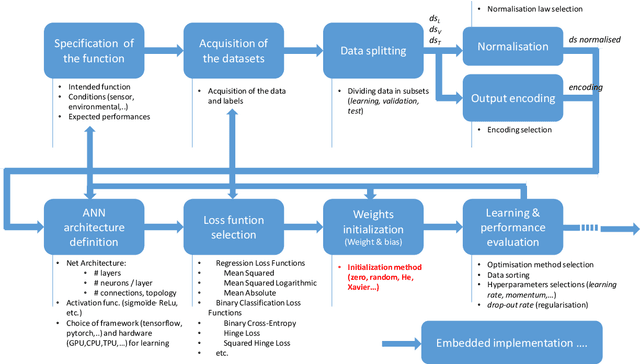

Abstract:Machine Learning (ML) seems to be one of the most promising solution to automate partially or completely some of the complex tasks currently realized by humans, such as driving vehicles, recognizing voice, etc. It is also an opportunity to implement and embed new capabilities out of the reach of classical implementation techniques. However, ML techniques introduce new potential risks. Therefore, they have only been applied in systems where their benefits are considered worth the increase of risk. In practice, ML techniques raise multiple challenges that could prevent their use in systems submitted to certification constraints. But what are the actual challenges? Can they be overcome by selecting appropriate ML techniques, or by adopting new engineering or certification practices? These are some of the questions addressed by the ML Certification 3 Workgroup (WG) set-up by the Institut de Recherche Technologique Saint Exup\'ery de Toulouse (IRT), as part of the DEEL Project.

Dataset Definition Standard (DDS)

Jan 07, 2021

Abstract:This document gives a set of recommendations to build and manipulate the datasets used to develop and/or validate machine learning models such as deep neural networks. This document is one of the 3 documents defined in [1] to ensure the quality of datasets. This is a work in progress as good practices evolve along with our understanding of machine learning. The document is divided into three main parts. Section 2 addresses the data collection activity. Section 3 gives recommendations about the annotation process. Finally, Section 4 gives recommendations concerning the breakdown between train, validation, and test datasets. In each part, we first define the desired properties at stake, then we explain the objectives targeted to meet the properties, finally we state the recommendations to reach these objectives.

Ensuring Dataset Quality for Machine Learning Certification

Nov 03, 2020

Abstract:In this paper, we address the problem of dataset quality in the context of Machine Learning (ML)-based critical systems. We briefly analyse the applicability of some existing standards dealing with data and show that the specificities of the ML context are neither properly captured nor taken into ac-count. As a first answer to this concerning situation, we propose a dataset specification and verification process, and apply it on a signal recognition system from the railway domain. In addi-tion, we also give a list of recommendations for the collection and management of datasets. This work is one step towards the dataset engineering process that will be required for ML to be used on safety critical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge