Christophe Gabreau

IRIT-ARGOS

How to design a dataset compliant with an ML-based system ODD?

Jun 20, 2024Abstract:This paper focuses on a Vision-based Landing task and presents the design and the validation of a dataset that would comply with the Operational Design Domain (ODD) of a Machine-Learning (ML) system. Relying on emerging certification standards, we describe the process for establishing ODDs at both the system and image levels. In the process, we present the translation of high-level system constraints into actionable image-level properties, allowing for the definition of verifiable Data Quality Requirements (DQRs). To illustrate this approach, we use the Landing Approach Runway Detection (LARD) dataset which combines synthetic imagery and real footage, and we focus on the steps required to verify the DQRs. The replicable framework presented in this paper addresses the challenges of designing a dataset compliant with the stringent needs of ML-based systems certification in safety-critical applications.

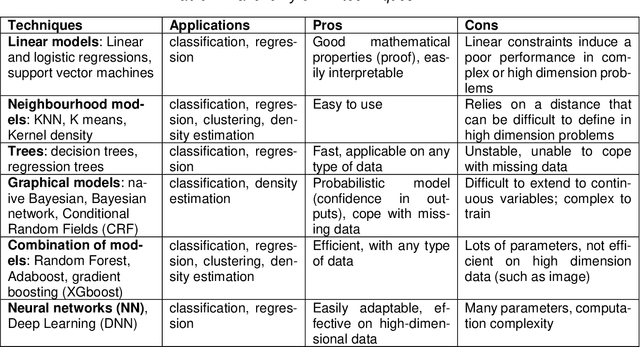

Certification of embedded systems based on Machine Learning: A survey

Jun 14, 2021

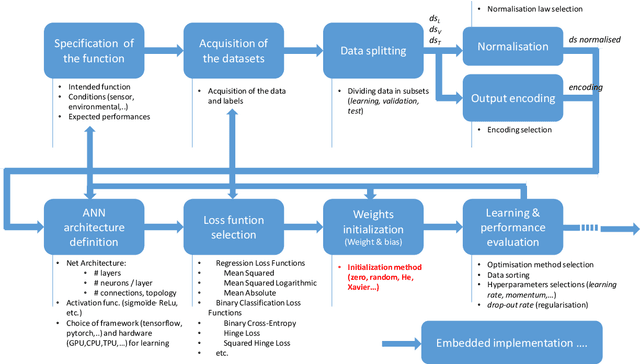

Abstract:Advances in machine learning (ML) open the way to innovating functions in the avionic domain, such as navigation/surveillance assistance (e.g. vision-based navigation, obstacle sensing, virtual sensing), speechto-text applications, autonomous flight, predictive maintenance or cockpit assistance. Current certification standards and practices, which were defined and refined decades over decades with classical programming in mind, do not however support this new development paradigm. This article provides an overview of the main challenges raised by the use ML in the demonstration of compliance with regulation requirements, and a survey of literature relevant to these challenges, with particular focus on the issues of robustness and explainability of ML results.

White Paper Machine Learning in Certified Systems

Mar 18, 2021

Abstract:Machine Learning (ML) seems to be one of the most promising solution to automate partially or completely some of the complex tasks currently realized by humans, such as driving vehicles, recognizing voice, etc. It is also an opportunity to implement and embed new capabilities out of the reach of classical implementation techniques. However, ML techniques introduce new potential risks. Therefore, they have only been applied in systems where their benefits are considered worth the increase of risk. In practice, ML techniques raise multiple challenges that could prevent their use in systems submitted to certification constraints. But what are the actual challenges? Can they be overcome by selecting appropriate ML techniques, or by adopting new engineering or certification practices? These are some of the questions addressed by the ML Certification 3 Workgroup (WG) set-up by the Institut de Recherche Technologique Saint Exup\'ery de Toulouse (IRT), as part of the DEEL Project.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge