Emmanuel Jean

Physically Interpretable Probabilistic Domain Characterization

Nov 22, 2024Abstract:Characterizing domains is essential for models analyzing dynamic environments, as it allows them to adapt to evolving conditions or to hand the task over to backup systems when facing conditions outside their operational domain. Existing solutions typically characterize a domain by solving a regression or classification problem, which limits their applicability as they only provide a limited summarized description of the domain. In this paper, we present a novel approach to domain characterization by characterizing domains as probability distributions. Particularly, we develop a method to predict the likelihood of different weather conditions from images captured by vehicle-mounted cameras by estimating distributions of physical parameters using normalizing flows. To validate our proposed approach, we conduct experiments within the context of autonomous vehicles, focusing on predicting the distribution of weather parameters to characterize the operational domain. This domain is characterized by physical parameters (absolute characterization) and arbitrarily predefined domains (relative characterization). Finally, we evaluate whether a system can safely operate in a target domain by comparing it to multiple source domains where safety has already been established. This approach holds significant potential, as accurate weather prediction and effective domain adaptation are crucial for autonomous systems to adjust to dynamic environmental conditions.

User Preferences for Large Language Model versus Template-Based Explanations of Movie Recommendations: A Pilot Study

Sep 10, 2024

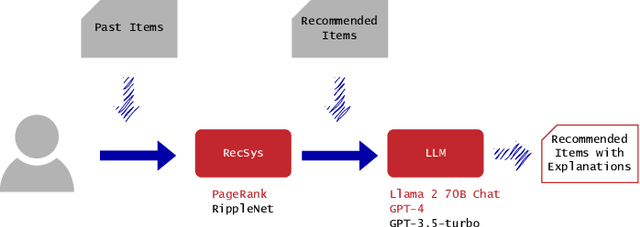

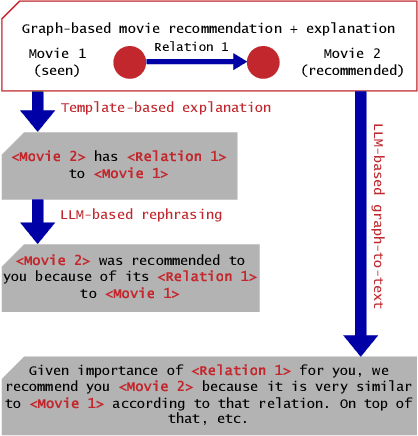

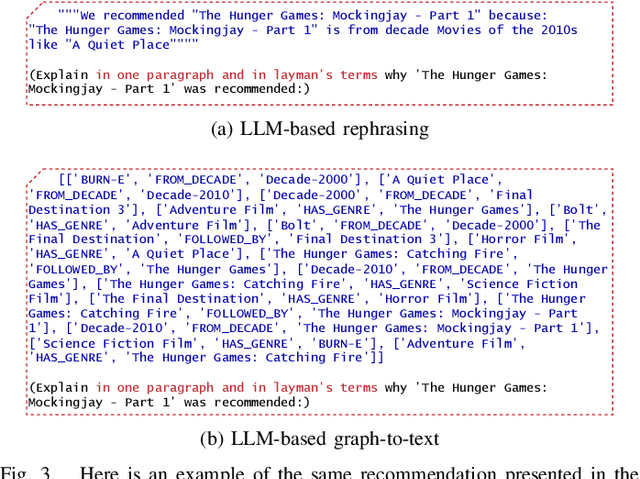

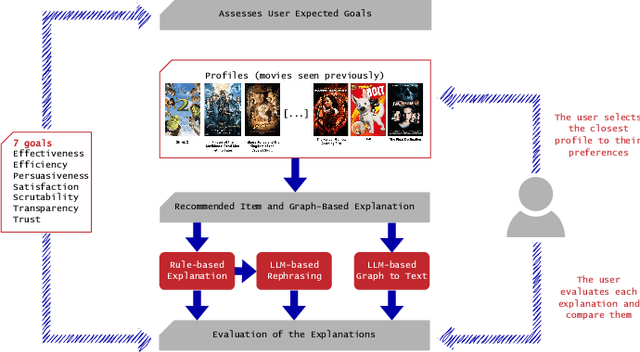

Abstract:Recommender systems have become integral to our digital experiences, from online shopping to streaming platforms. Still, the rationale behind their suggestions often remains opaque to users. While some systems employ a graph-based approach, offering inherent explainability through paths associating recommended items and seed items, non-experts could not easily understand these explanations. A popular alternative is to convert graph-based explanations into textual ones using a template and an algorithm, which we denote here as ''template-based'' explanations. Yet, these can sometimes come across as impersonal or uninspiring. A novel method would be to employ large language models (LLMs) for this purpose, which we denote as ''LLM-based''. To assess the effectiveness of LLMs in generating more resonant explanations, we conducted a pilot study with 25 participants. They were presented with three explanations: (1) traditional template-based, (2) LLM-based rephrasing of the template output, and (3) purely LLM-based explanations derived from the graph-based explanations. Although subject to high variance, preliminary findings suggest that LLM-based explanations may provide a richer and more engaging user experience, further aligning with user expectations. This study sheds light on the potential limitations of current explanation methods and offers promising directions for leveraging large language models to improve user satisfaction and trust in recommender systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge