Emmanuel Baltsavias

Learning a Sensor-invariant Embedding of Satellite Data: A Case Study for Lake Ice Monitoring

Jul 19, 2021

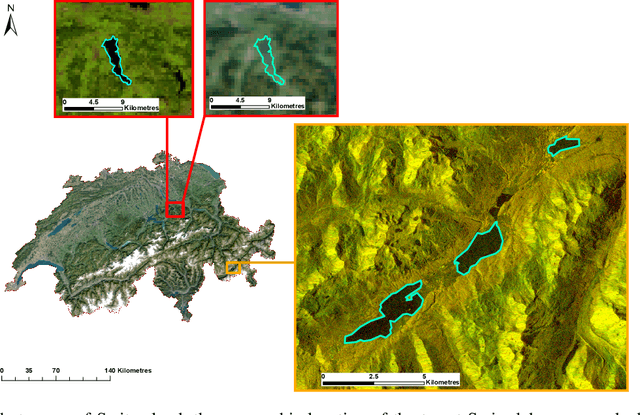

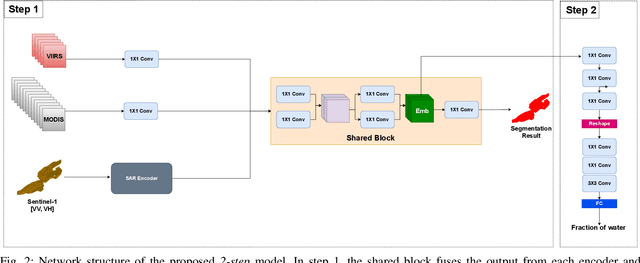

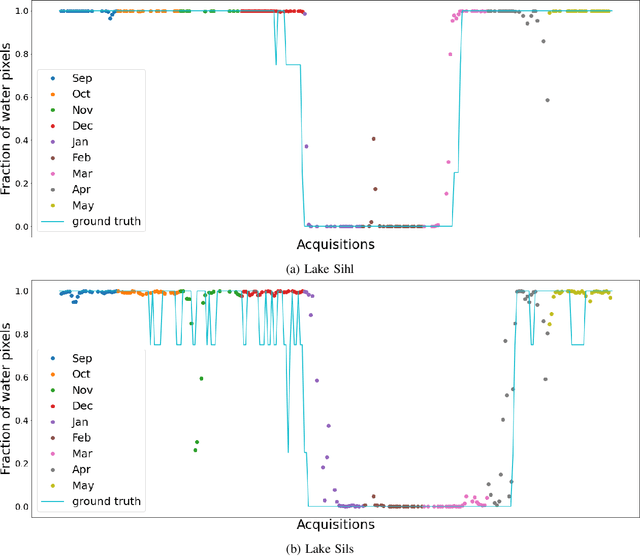

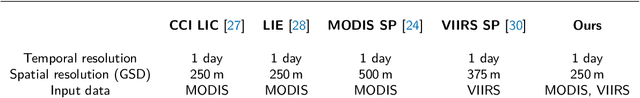

Abstract:Fusing satellite imagery acquired with different sensors has been a long-standing challenge of Earth observation, particularly across different modalities such as optical and Synthetic Aperture Radar (SAR) images. Here, we explore the joint analysis of imagery from different sensors in the light of representation learning: we propose to learn a joint, sensor-invariant embedding (feature representation) within a deep neural network. Our application problem is the monitoring of lake ice on Alpine lakes. To reach the temporal resolution requirement of the Swiss Global Climate Observing System (GCOS) office, we combine three image sources: Sentinel-1 SAR (S1-SAR), Terra MODIS and Suomi-NPP VIIRS. The large gaps between the optical and SAR domains and between the sensor resolutions make this a challenging instance of the sensor fusion problem. Our approach can be classified as a feature-level fusion that is learnt in a data-driven manner. The proposed network architecture has separate encoding branches for each image sensor, which feed into a single latent embedding. I.e., a common feature representation shared by all inputs, such that subsequent processing steps deliver comparable output irrespective of which sort of input image was used. By fusing satellite data, we map lake ice at a temporal resolution of <1.5 days. The network produces spatially explicit lake ice maps with pixel-wise accuracies >91.3% (respectively, mIoU scores >60.7%) and generalises well across different lakes and winters. Moreover, it sets a new state-of-the-art for determining the important ice-on and ice-off dates for the target lakes, in many cases meeting the GCOS requirement.

Recent Ice Trends in Swiss Mountain Lakes: 20-year Analysis of MODIS Imagery

Mar 23, 2021

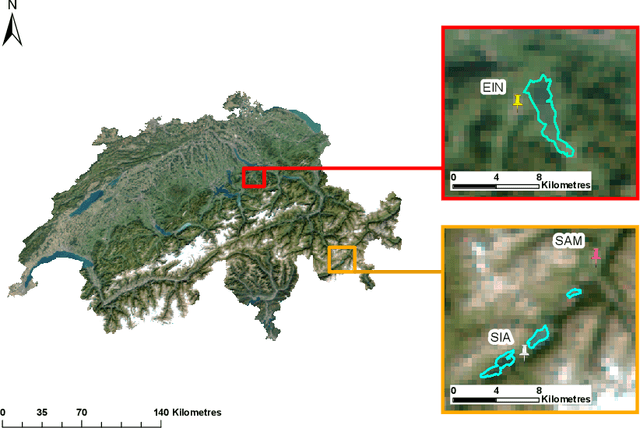

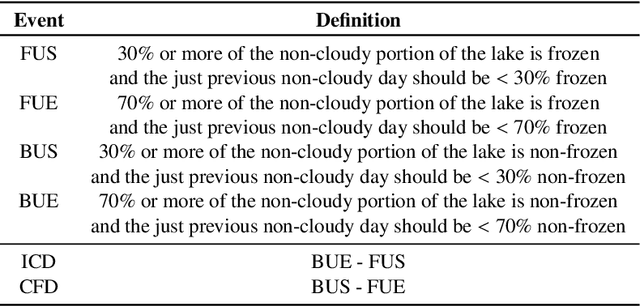

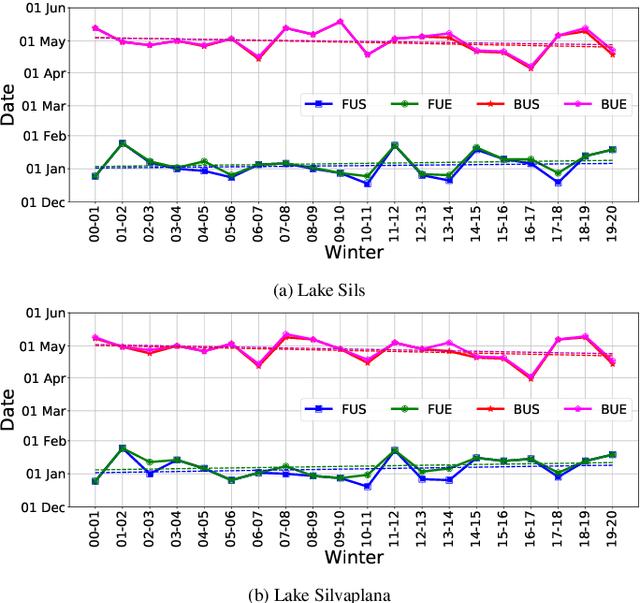

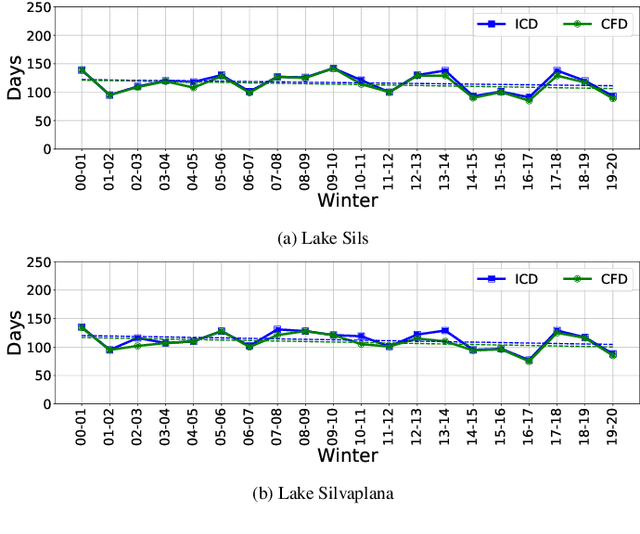

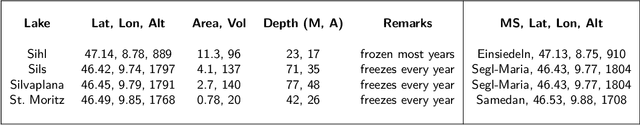

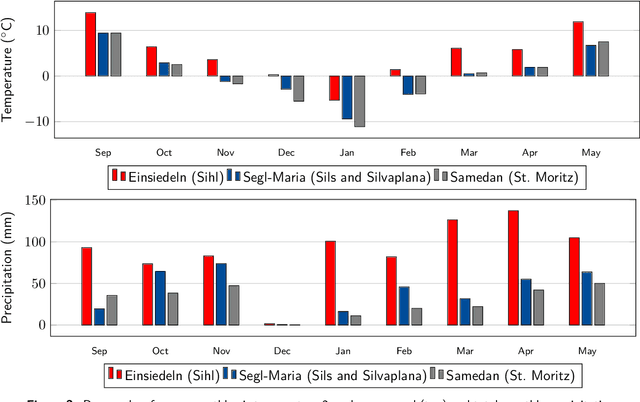

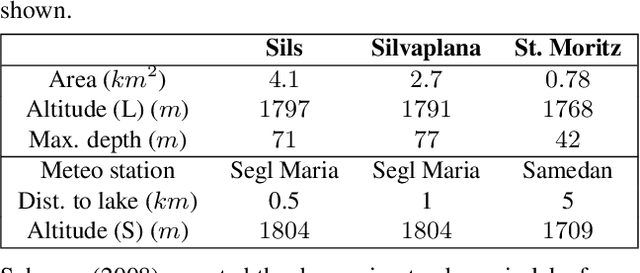

Abstract:Depleting lake ice can serve as an indicator for climate change, just like sea level rise or glacial retreat. Several Lake Ice Phenological (LIP) events serve as sentinels to understand the regional and global climate change. Hence, monitoring the long-term lake freezing and thawing patterns can prove very useful. In this paper, we focus on observing the LIP events such as freeze-up, break-up and temporal freeze extent in the Oberengadin region of Switzerland, where there are several small- and medium-sized mountain lakes, across two decades (2000-2020) from optical satellite images. We analyse time-series of MODIS imagery (and additionally cross-check with VIIRS data when available), by estimating spatially resolved maps of lake ice for these Alpine lakes with supervised machine learning. To train the classifier we rely on reference data annotated manually based on publicly available webcam images. From the ice maps we derive long-term LIP trends. Since the webcam data is only available for two winters, we also validate our results against the operational MODIS and VIIRS snow products. We find a change in Complete Freeze Duration (CFD) of -0.76 and -0.89 days per annum (d/a) for lakes Sils and Silvaplana respectively. Furthermore, we correlate the lake freezing and thawing trends with climate data such as temperature, sunshine, precipitation and wind measured at nearby meteorological stations.

Ice Monitoring in Swiss Lakes from Optical Satellites and Webcams using Machine Learning

Oct 27, 2020

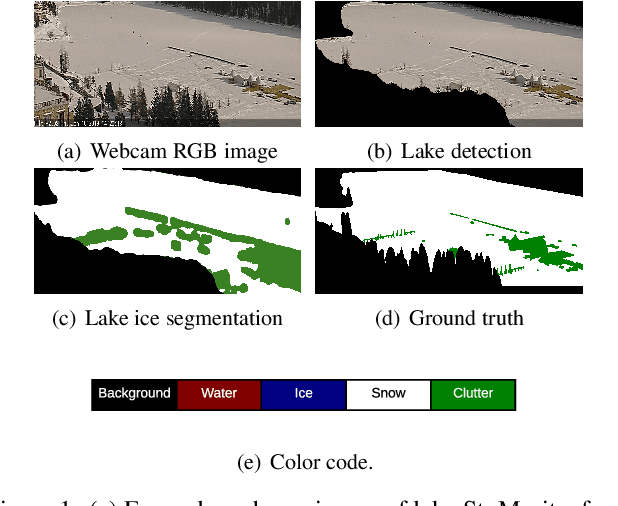

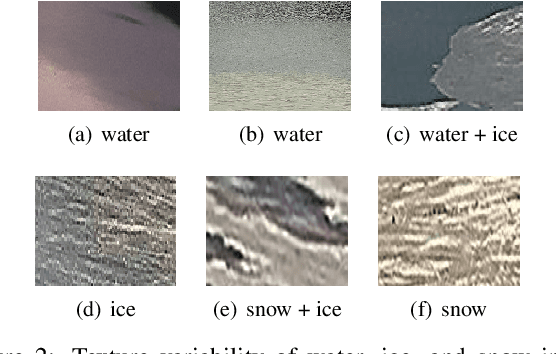

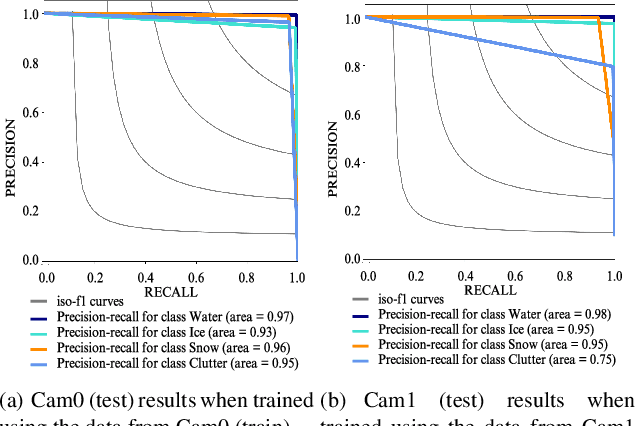

Abstract:Continuous observation of climate indicators, such as trends in lake freezing, is important to understand the dynamics of the local and global climate system. Consequently, lake ice has been included among the Essential Climate Variables (ECVs) of the Global Climate Observing System (GCOS), and there is a need to set up operational monitoring capabilities. Multi-temporal satellite images and publicly available webcam streams are among the viable data sources to monitor lake ice. In this work we investigate machine learning-based image analysis as a tool to determine the spatio-temporal extent of ice on Swiss Alpine lakes as well as the ice-on and ice-off dates, from both multispectral optical satellite images (VIIRS and MODIS) and RGB webcam images. We model lake ice monitoring as a pixel-wise semantic segmentation problem, i.e., each pixel on the lake surface is classified to obtain a spatially explicit map of ice cover. We show experimentally that the proposed system produces consistently good results when tested on data from multiple winters and lakes. Our satellite-based method obtains mean Intersection-over-Union (mIoU) scores >93%, for both sensors. It also generalises well across lakes and winters with mIoU scores >78% and >80% respectively. On average, our webcam approach achieves mIoU values of 87% (approx.) and generalisation scores of 71% (approx.) and 69% (approx.) across different cameras and winters respectively. Additionally, we put forward a new benchmark dataset of webcam images (Photi-LakeIce) which includes data from two winters and three cameras.

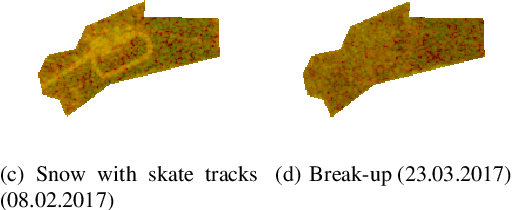

Integrated monitoring of ice in selected Swiss lakes. Final project report

Aug 02, 2020

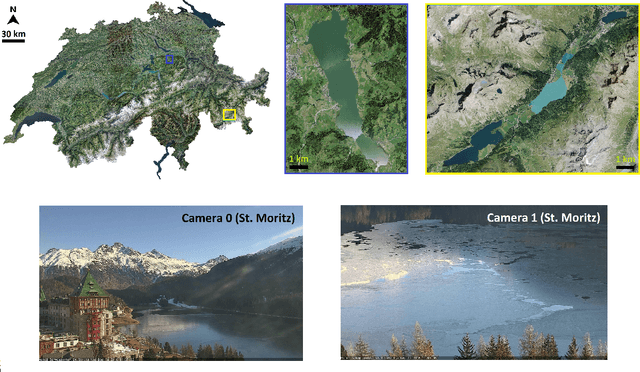

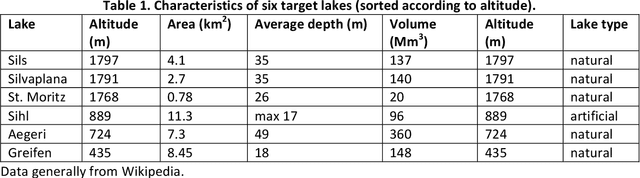

Abstract:Various lake observables, including lake ice, are related to climate and climate change and provide a good opportunity for long-term monitoring. Lakes (and as part of them lake ice) is therefore considered an Essential Climate Variable (ECV) of the Global Climate Observing System (GCOS). Following the need for an integrated multi-temporal monitoring of lake ice in Switzerland, MeteoSwiss in the framework of GCOS Switzerland supported this 2-year project to explore not only the use of satellite images but also the possibilities of Webcams and in-situ measurements. The aim of this project is to monitor some target lakes and detect the extent of ice and especially the ice-on/off dates, with focus on the integration of various input data and processing methods. The target lakes are: St. Moritz, Silvaplana, Sils, Sihl, Greifen and Aegeri, whereby only the first four were mainly frozen during the observation period and thus processed. The observation period was mainly the winter 2016-17. During the project, various approaches were developed, implemented, tested and compared. Firstly, low spatial resolution (250 - 1000 m) but high temporal resolution (1 day) satellite images from the optical sensors MODIS and VIIRS were used. Secondly, and as a pilot project, the use of existing public Webcams was investigated for (a) validation of results from satellite data, and (b) independent estimation of lake ice, especially for small lakes like St. Moritz, that could not be possibly monitored in the satellite images. Thirdly, in-situ measurements were made in order to characterize the development of the temperature profiles and partly pressure before freezing and under the ice-cover until melting. This report presents the results of the project work.

Lake Ice Monitoring with Webcams and Crowd-Sourced Images

Feb 18, 2020

Abstract:Lake ice is a strong climate indicator and has been recognised as part of the Essential Climate Variables (ECV) by the Global Climate Observing System (GCOS). The dynamics of freezing and thawing, and possible shifts of freezing patterns over time, can help in understanding the local and global climate systems. One way to acquire the spatio-temporal information about lake ice formation, independent of clouds, is to analyse webcam images. This paper intends to move towards a universal model for monitoring lake ice with freely available webcam data. We demonstrate good performance, including the ability to generalise across different winters and different lakes, with a state-of-the-art Convolutional Neural Network (CNN) model for semantic image segmentation, Deeplab v3+. Moreover, we design a variant of that model, termed Deep-U-Lab, which predicts sharper, more correct segmentation boundaries. We have tested the model's ability to generalise with data from multiple camera views and two different winters. On average, it achieves intersection-over-union (IoU) values of ~71% across different cameras and ~69% across different winters, greatly outperforming prior work. Going even further, we show that the model even achieves 60% IoU on arbitrary images scraped from photo-sharing web sites. As part of the work, we introduce a new benchmark dataset of webcam images, Photi-LakeIce, from multiple cameras and two different winters, along with pixel-wise ground truth annotations.

Lake Ice Detection from Sentinel-1 SAR with Deep Learning

Feb 17, 2020

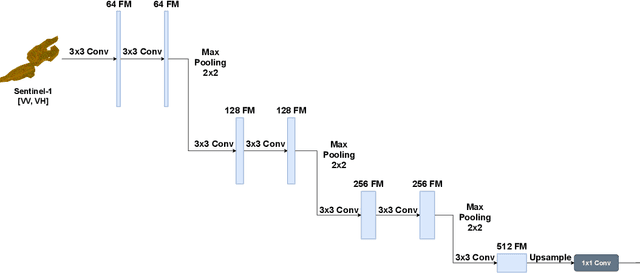

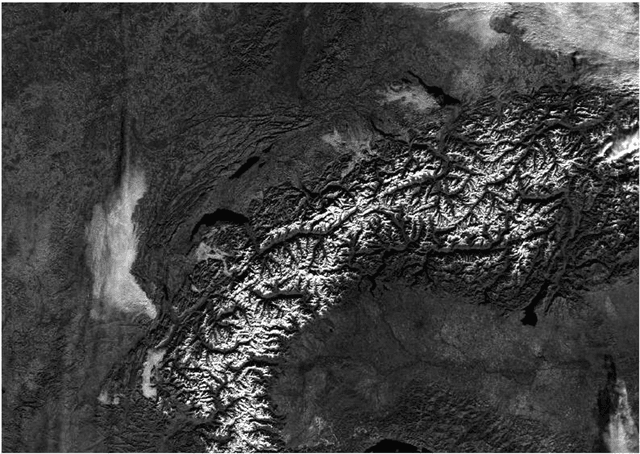

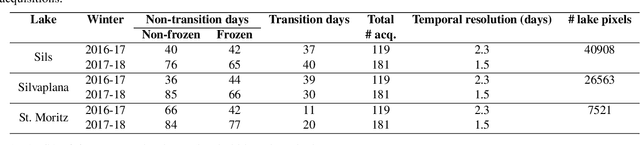

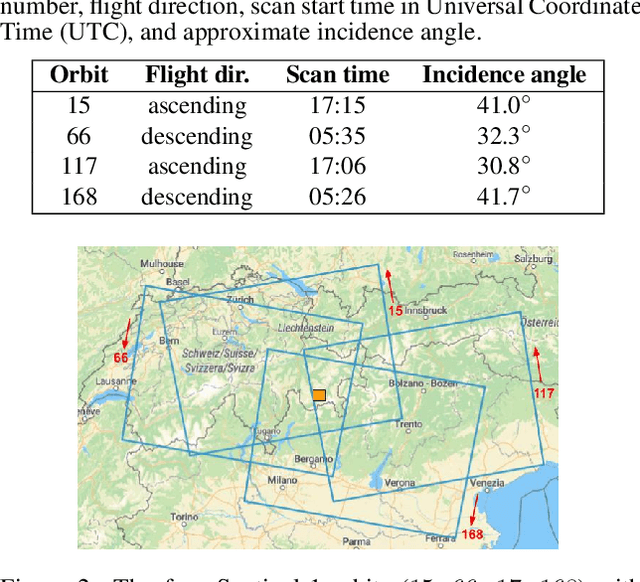

Abstract:Lake ice, as part of the Essential Climate Variable (ECV) lakes, is an important indicator to monitor climate change and global warming. The spatio-temporal extent of lake ice cover, along with the timings of key phenological events such as freeze-up and break-up, provides important cues about the local and global climate. We present a lake ice monitoring system based on the automatic analysis of Sentinel-1 Synthetic Aperture Radar (SAR) data with a deep neural network. In previous studies that used optical satellite imagery for lake ice monitoring, frequent cloud cover was a main limiting factor, which we overcome thanks to the ability of microwave sensors to penetrate clouds and observe the lakes regardless of the weather and illumination conditions. We cast ice detection as a two class (frozen, non-frozen) semantic segmentation problem and solve it using a state-of-the-art deep convolutional network (CNN). We report results on two winters ($2016-17$ and $2017-18$) and three alpine lakes in Switzerland, including cross-validation tests to assess the generalisation to unseen lakes and winters. The proposed model reaches mean Intersection-over-Union (mIoU) scores >90% on average, and >84% even for the most difficult lake.

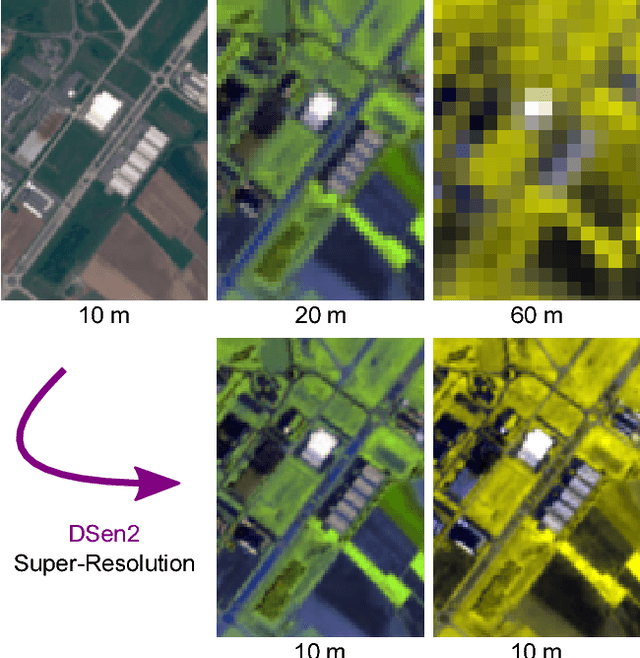

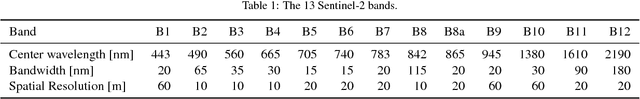

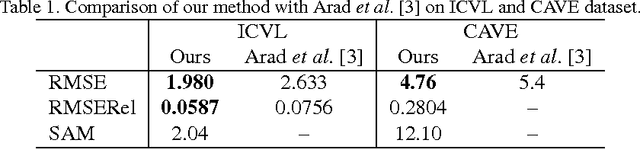

Super-resolution of Sentinel-2 images: Learning a globally applicable deep neural network

Oct 01, 2018

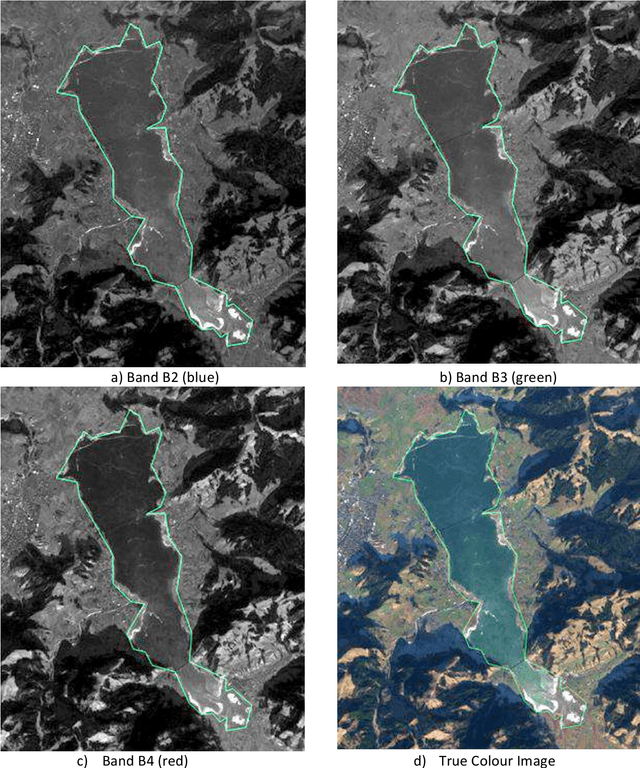

Abstract:The Sentinel-2 satellite mission delivers multi-spectral imagery with 13 spectral bands, acquired at three different spatial resolutions. The aim of this research is to super-resolve the lower-resolution (20 m and 60 m Ground Sampling Distance - GSD) bands to 10 m GSD, so as to obtain a complete data cube at the maximal sensor resolution. We employ a state-of-the-art convolutional neural network (CNN) to perform end-to-end upsampling, which is trained with data at lower resolution, i.e., from 40->20 m, respectively 360->60 m GSD. In this way, one has access to a virtually infinite amount of training data, by downsampling real Sentinel-2 images. We use data sampled globally over a wide range of geographical locations, to obtain a network that generalises across different climate zones and land-cover types, and can super-resolve arbitrary Sentinel-2 images without the need of retraining. In quantitative evaluations (at lower scale, where ground truth is available), our network, which we call DSen2, outperforms the best competing approach by almost 50% in RMSE, while better preserving the spectral characteristics. It also delivers visually convincing results at the full 10 m GSD. The code is available at https://github.com/lanha/DSen2

* 19 pages, 11 figures

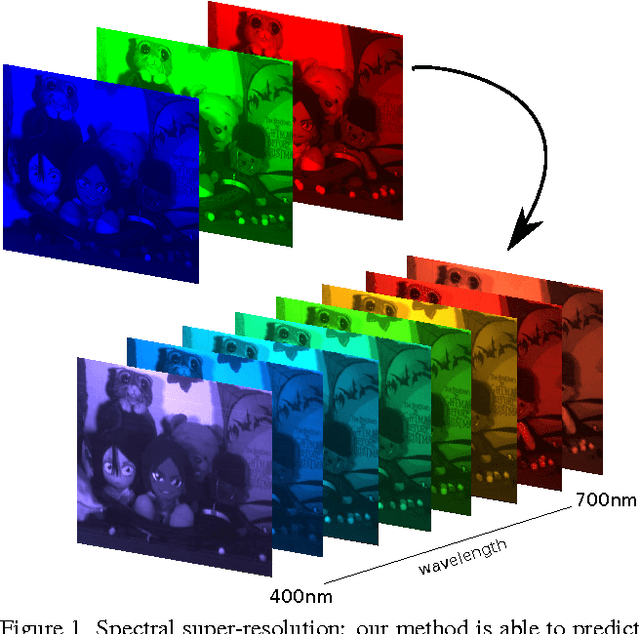

Learned Spectral Super-Resolution

Mar 28, 2017

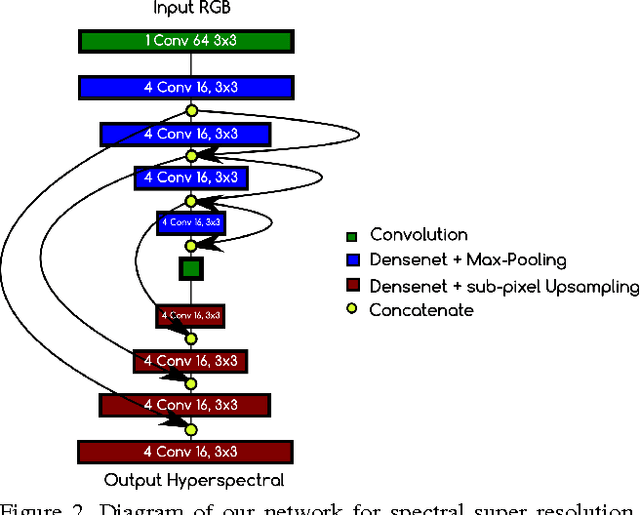

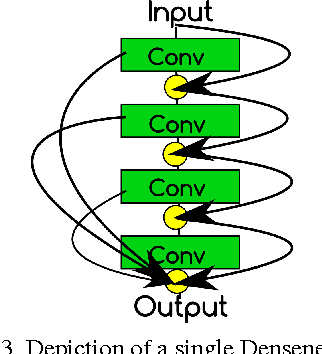

Abstract:We describe a novel method for blind, single-image spectral super-resolution. While conventional super-resolution aims to increase the spatial resolution of an input image, our goal is to spectrally enhance the input, i.e., generate an image with the same spatial resolution, but a greatly increased number of narrow (hyper-spectral) wave-length bands. Just like the spatial statistics of natural images has rich structure, which one can exploit as prior to predict high-frequency content from a low resolution image, the same is also true in the spectral domain: the materials and lighting conditions of the observed world induce structure in the spectrum of wavelengths observed at a given pixel. Surprisingly, very little work exists that attempts to use this diagnosis and achieve blind spectral super-resolution from single images. We start from the conjecture that, just like in the spatial domain, we can learn the statistics of natural image spectra, and with its help generate finely resolved hyper-spectral images from RGB input. Technically, we follow the current best practice and implement a convolutional neural network (CNN), which is trained to carry out the end-to-end mapping from an entire RGB image to the corresponding hyperspectral image of equal size. We demonstrate spectral super-resolution both for conventional RGB images and for multi-spectral satellite data, outperforming the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge