Elma Kerz

MANTIS at TSAR-2022 Shared Task: Improved Unsupervised Lexical Simplification with Pretrained Encoders

Dec 19, 2022Abstract:In this paper we present our contribution to the TSAR-2022 Shared Task on Lexical Simplification of the EMNLP 2022 Workshop on Text Simplification, Accessibility, and Readability. Our approach builds on and extends the unsupervised lexical simplification system with pretrained encoders (LSBert) system in the following ways: For the subtask of simplification candidate selection, it utilizes a RoBERTa transformer language model and expands the size of the generated candidate list. For subsequent substitution ranking, it introduces a new feature weighting scheme and adopts a candidate filtering method based on textual entailment to maximize semantic similarity between the target word and its simplification. Our best-performing system improves LSBert by 5.9% accuracy and achieves second place out of 33 ranked solutions.

(Psycho-)Linguistic Features Meet Transformer Models for Improved Explainable and Controllable Text Simplification

Dec 19, 2022Abstract:State-of-the-art text simplification (TS) systems adopt end-to-end neural network models to directly generate the simplified version of the input text, and usually function as a blackbox. Moreover, TS is usually treated as an all-purpose generic task under the assumption of homogeneity, where the same simplification is suitable for all. In recent years, however, there has been increasing recognition of the need to adapt the simplification techniques to the specific needs of different target groups. In this work, we aim to advance current research on explainable and controllable TS in two ways: First, building on recently proposed work to increase the transparency of TS systems, we use a large set of (psycho-)linguistic features in combination with pre-trained language models to improve explainable complexity prediction. Second, based on the results of this preliminary task, we extend a state-of-the-art Seq2Seq TS model, ACCESS, to enable explicit control of ten attributes. The results of experiments show (1) that our approach improves the performance of state-of-the-art models for predicting explainable complexity and (2) that explicitly conditioning the Seq2Seq model on ten attributes leads to a significant improvement in performance in both within-domain and out-of-domain settings.

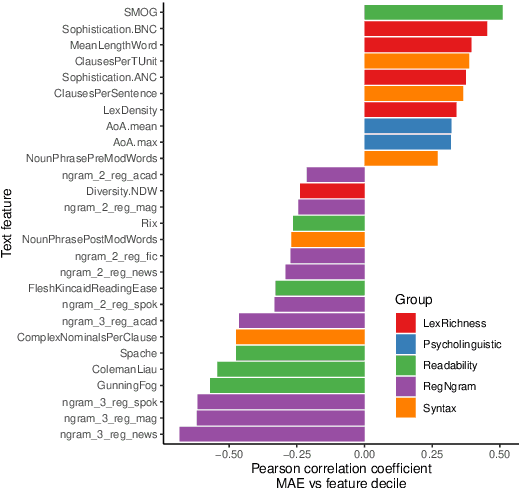

Exploring Hybrid and Ensemble Models for Multiclass Prediction of Mental Health Status on Social Media

Dec 19, 2022Abstract:In recent years, there has been a surge of interest in research on automatic mental health detection (MHD) from social media data leveraging advances in natural language processing and machine learning techniques. While significant progress has been achieved in this interdisciplinary research area, the vast majority of work has treated MHD as a binary classification task. The multiclass classification setup is, however, essential if we are to uncover the subtle differences among the statistical patterns of language use associated with particular mental health conditions. Here, we report on experiments aimed at predicting six conditions (anxiety, attention deficit hyperactivity disorder, bipolar disorder, post-traumatic stress disorder, depression, and psychological stress) from Reddit social media posts. We explore and compare the performance of hybrid and ensemble models leveraging transformer-based architectures (BERT and RoBERTa) and BiLSTM neural networks trained on within-text distributions of a diverse set of linguistic features. This set encompasses measures of syntactic complexity, lexical sophistication and diversity, readability, and register-specific ngram frequencies, as well as sentiment and emotion lexicons. In addition, we conduct feature ablation experiments to investigate which types of features are most indicative of particular mental health conditions.

Improving the Generalizability of Text-Based Emotion Detection by Leveraging Transformers with Psycholinguistic Features

Dec 19, 2022

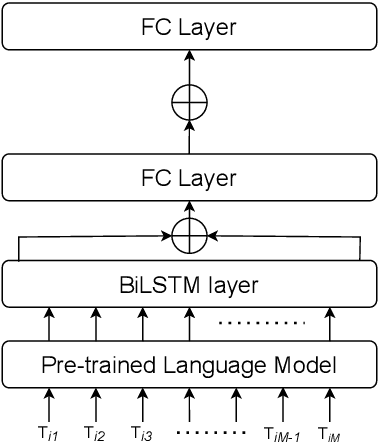

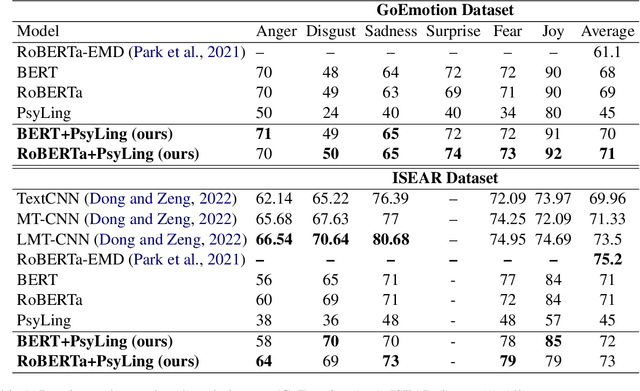

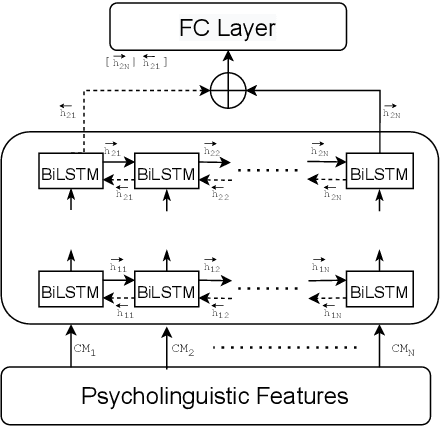

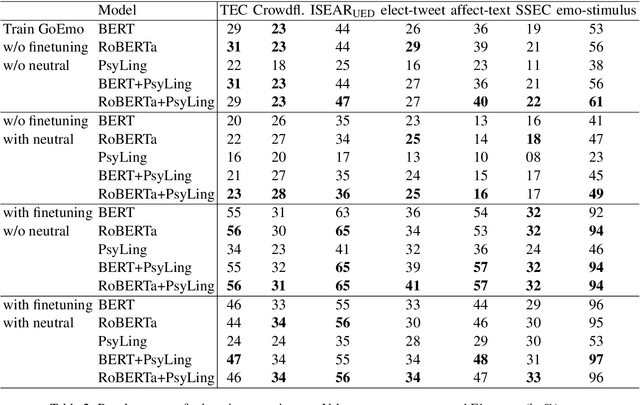

Abstract:In recent years, there has been increased interest in building predictive models that harness natural language processing and machine learning techniques to detect emotions from various text sources, including social media posts, micro-blogs or news articles. Yet, deployment of such models in real-world sentiment and emotion applications faces challenges, in particular poor out-of-domain generalizability. This is likely due to domain-specific differences (e.g., topics, communicative goals, and annotation schemes) that make transfer between different models of emotion recognition difficult. In this work we propose approaches for text-based emotion detection that leverage transformer models (BERT and RoBERTa) in combination with Bidirectional Long Short-Term Memory (BiLSTM) networks trained on a comprehensive set of psycholinguistic features. First, we evaluate the performance of our models within-domain on two benchmark datasets: GoEmotion and ISEAR. Second, we conduct transfer learning experiments on six datasets from the Unified Emotion Dataset to evaluate their out-of-domain robustness. We find that the proposed hybrid models improve the ability to generalize to out-of-distribution data compared to a standard transformer-based approach. Moreover, we observe that these models perform competitively on in-domain data.

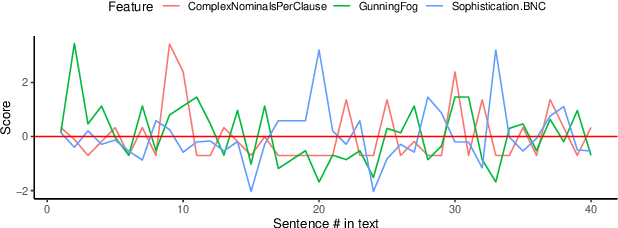

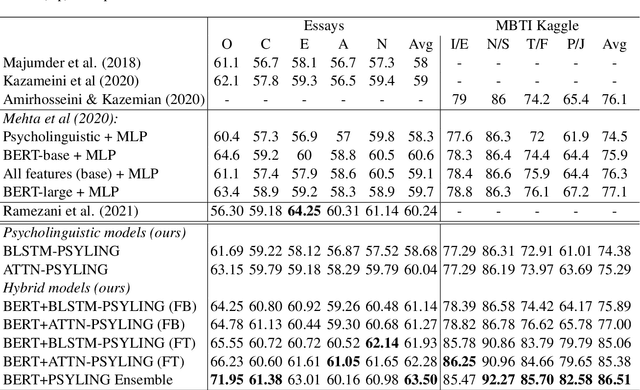

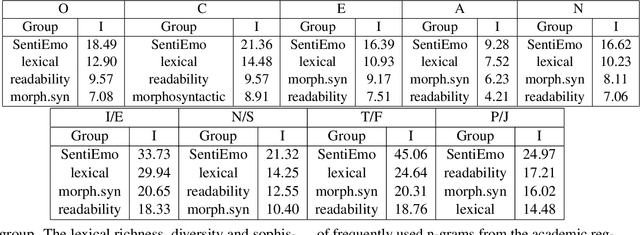

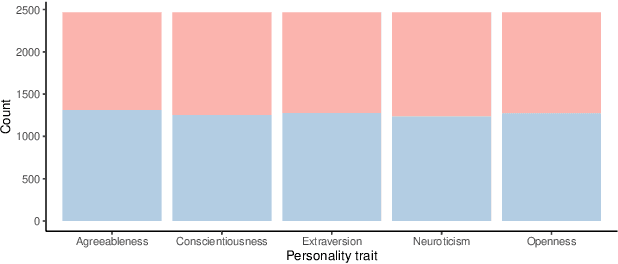

Pushing on Personality Detection from Verbal Behavior: A Transformer Meets Text Contours of Psycholinguistic Features

Apr 10, 2022

Abstract:Research at the intersection of personality psychology, computer science, and linguistics has recently focused increasingly on modeling and predicting personality from language use. We report two major improvements in predicting personality traits from text data: (1) to our knowledge, the most comprehensive set of theory-based psycholinguistic features and (2) hybrid models that integrate a pre-trained Transformer Language Model BERT and Bidirectional Long Short-Term Memory (BLSTM) networks trained on within-text distributions ('text contours') of psycholinguistic features. We experiment with BLSTM models (with and without Attention) and with two techniques for applying pre-trained language representations from the transformer model - 'feature-based' and 'fine-tuning'. We evaluate the performance of the models we built on two benchmark datasets that target the two dominant theoretical models of personality: the Big Five Essay dataset and the MBTI Kaggle dataset. Our results are encouraging as our models outperform existing work on the same datasets. More specifically, our models achieve improvement in classification accuracy by 2.9% on the Essay dataset and 8.28% on the Kaggle MBTI dataset. In addition, we perform ablation experiments to quantify the impact of different categories of psycholinguistic features in the respective personality prediction models.

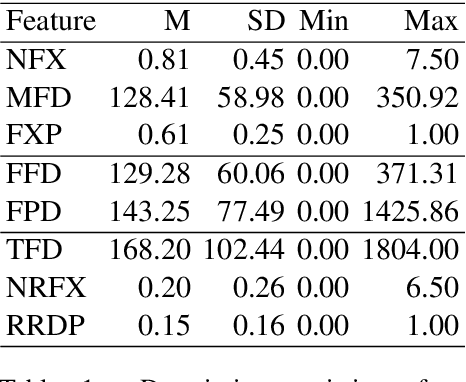

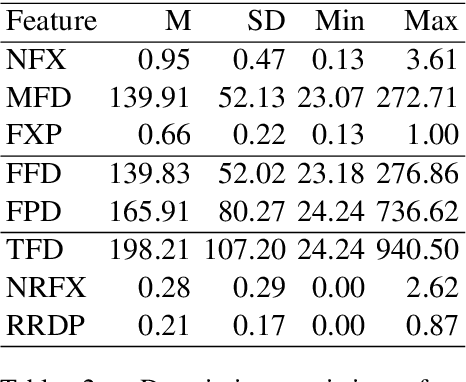

Measuring the Impact of (Psycho-)Linguistic and Readability Features and Their Spill Over Effects on the Prediction of Eye Movement Patterns

Mar 15, 2022

Abstract:There is a growing interest in the combined use of NLP and machine learning methods to predict gaze patterns during naturalistic reading. While promising results have been obtained through the use of transformer-based language models, little work has been undertaken to relate the performance of such models to general text characteristics. In this paper we report on experiments with two eye-tracking corpora of naturalistic reading and two language models (BERT and GPT-2). In all experiments, we test effects of a broad spectrum of features for predicting human reading behavior that fall into five categories (syntactic complexity, lexical richness, register-based multiword combinations, readability and psycholinguistic word properties). Our experiments show that both the features included and the architecture of the transformer-based language models play a role in predicting multiple eye-tracking measures during naturalistic reading. We also report the results of experiments aimed at determining the relative importance of features from different groups using SP-LIME.

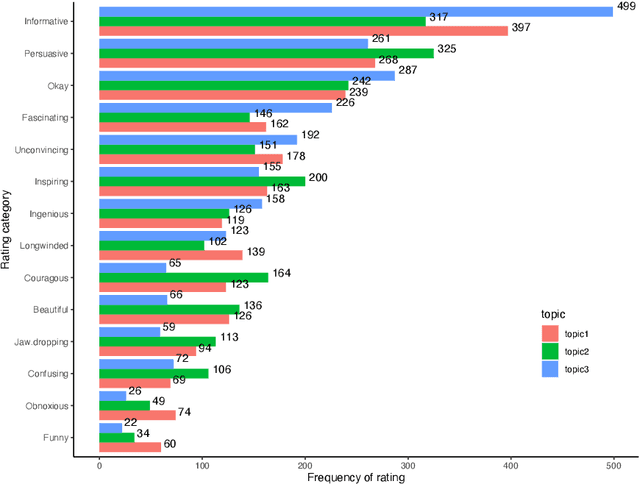

Prediction of Listener Perception of Argumentative Speech in a Crowdsourced Dataset Using (Psycho-)Linguistic and Fluency Features

Nov 30, 2021

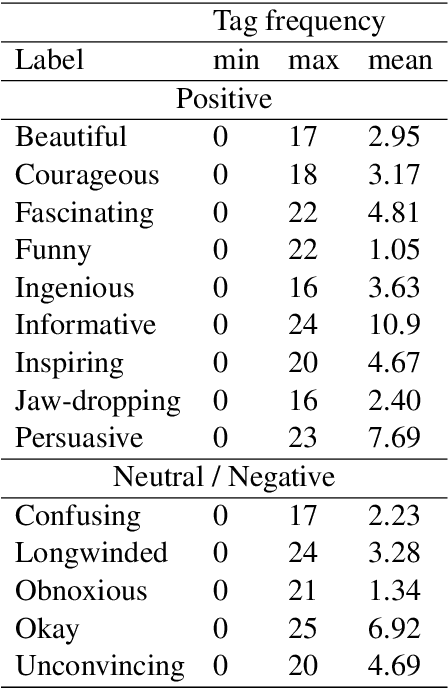

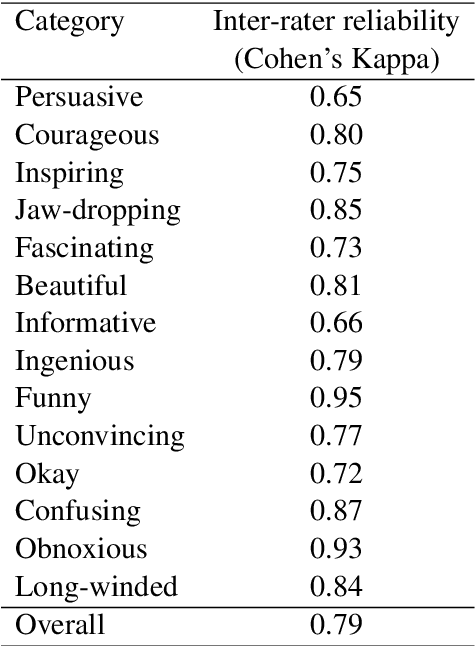

Abstract:One of the key communicative competencies is the ability to maintain fluency in monologic speech and the ability to produce sophisticated language to argue a position convincingly. In this paper we aim to predict TED talk-style affective ratings in a crowdsourced dataset of argumentative speech consisting of 7 hours of speech from 110 individuals. The speech samples were elicited through task prompts relating to three debating topics. The samples received a total of 2211 ratings from 737 human raters pertaining to 14 affective categories. We present an effective approach to the classification task of predicting these categories through fine-tuning a model pre-trained on a large dataset of TED talks public speeches. We use a combination of fluency features derived from a state-of-the-art automatic speech recognition system and a large set of human-interpretable linguistic features obtained from an automatic text analysis system. Classification accuracy was greater than 60% for all 14 rating categories, with a peak performance of 72% for the rating category 'informative'. In a secondary experiment, we determined the relative importance of features from different groups using SP-LIME.

Alzheimer's Disease Detection from Spontaneous Speech through Combining Linguistic Complexity and Fluency Features with Pretrained Language Models

Jun 16, 2021

Abstract:In this paper, we combined linguistic complexity and (dis)fluency features with pretrained language models for the task of Alzheimer's disease detection of the 2021 ADReSSo (Alzheimer's Dementia Recognition through Spontaneous Speech) challenge. An accuracy of 83.1% was achieved on the test set, which amounts to an improvement of 4.23% over the baseline model. Our best-performing model that integrated component models using a stacking ensemble technique performed equally well on cross-validation and test data, indicating that it is robust against overfitting.

The Impact of ASR on the Automatic Analysis of Linguistic Complexity and Sophistication in Spontaneous L2 Speech

Apr 17, 2021

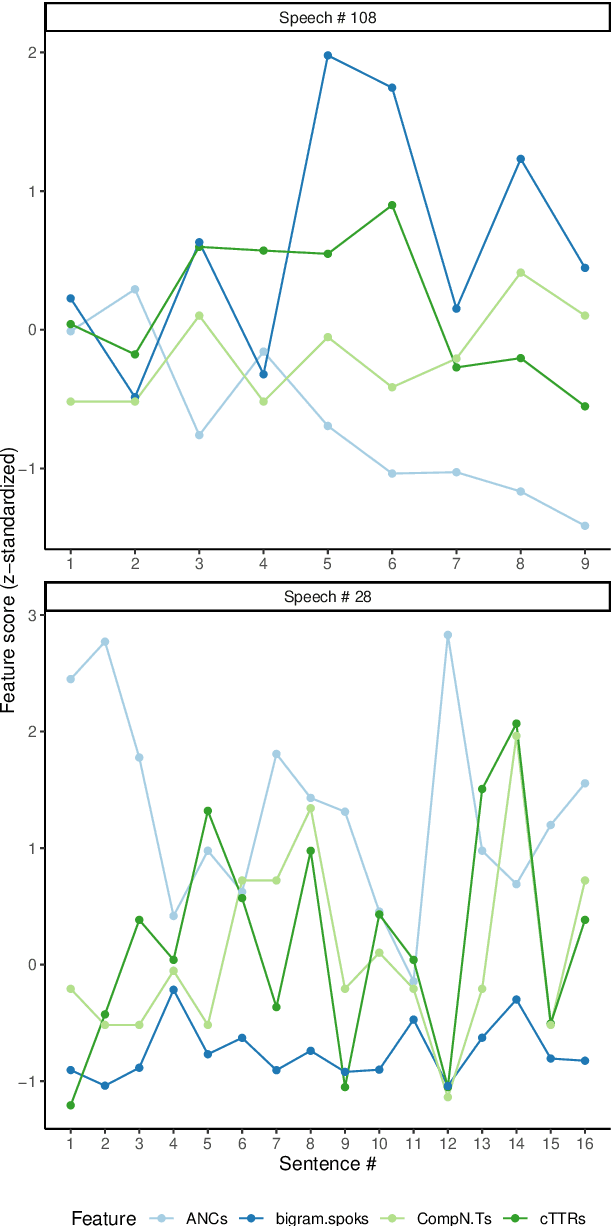

Abstract:In recent years, automated approaches to assessing linguistic complexity in second language (L2) writing have made significant progress in gauging learner performance, predicting human ratings of the quality of learner productions, and benchmarking L2 development. In contrast, there is comparatively little work in the area of speaking, particularly with respect to fully automated approaches to assessing L2 spontaneous speech. While the importance of a well-performing ASR system is widely recognized, little research has been conducted to investigate the impact of its performance on subsequent automatic text analysis. In this paper, we focus on this issue and examine the impact of using a state-of-the-art ASR system for subsequent automatic analysis of linguistic complexity in spontaneously produced L2 speech. A set of 34 selected measures were considered, falling into four categories: syntactic, lexical, n-gram frequency, and information-theoretic measures. The agreement between the scores for these measures obtained on the basis of ASR-generated vs. manual transcriptions was determined through correlation analysis. A more differential effect of ASR performance on specific types of complexity measures when controlling for task type effects is also presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge