Ekagra Ranjan

Command A: An Enterprise-Ready Large Language Model

Apr 01, 2025

Abstract:In this report we describe the development of Command A, a powerful large language model purpose-built to excel at real-world enterprise use cases. Command A is an agent-optimised and multilingual-capable model, with support for 23 languages of global business, and a novel hybrid architecture balancing efficiency with top of the range performance. It offers best-in-class Retrieval Augmented Generation (RAG) capabilities with grounding and tool use to automate sophisticated business processes. These abilities are achieved through a decentralised training approach, including self-refinement algorithms and model merging techniques. We also include results for Command R7B which shares capability and architectural similarities to Command A. Weights for both models have been released for research purposes. This technical report details our original training pipeline and presents an extensive evaluation of our models across a suite of enterprise-relevant tasks and public benchmarks, demonstrating excellent performance and efficiency.

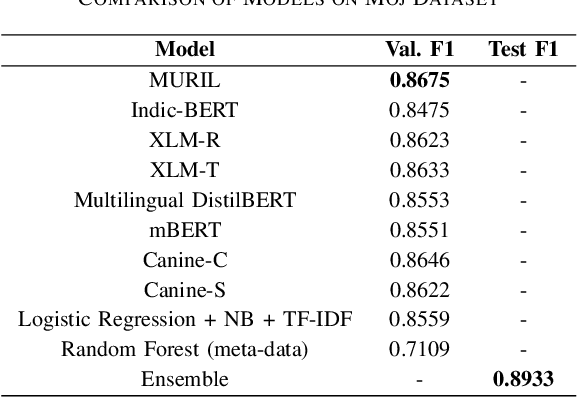

Multilingual Abusiveness Identification on Code-Mixed Social Media Text

Mar 01, 2022

Abstract:Social Media platforms have been seeing adoption and growth in their usage over time. This growth has been further accelerated with the lockdown in the past year when people's interaction, conversation, and expression were limited physically. It is becoming increasingly important to keep the platform safe from abusive content for better user experience. Much work has been done on English social media content but text analysis on non-English social media is relatively underexplored. Non-English social media content have the additional challenges of code-mixing, transliteration and using different scripture in same sentence. In this work, we propose an approach for abusiveness identification on the multilingual Moj dataset which comprises of Indic languages. Our approach tackles the common challenges of non-English social media content and can be extended to other languages as well.

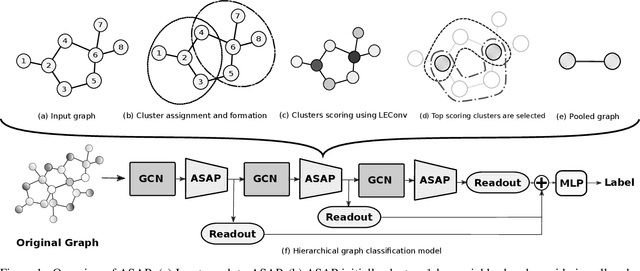

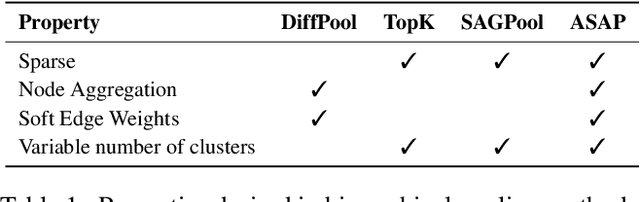

ASAP: Adaptive Structure Aware Pooling for Learning Hierarchical Graph Representations

Nov 18, 2019

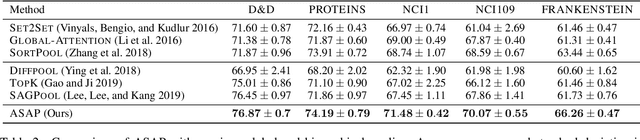

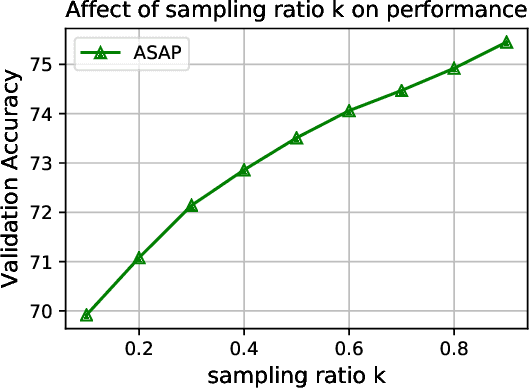

Abstract:Graph Neural Networks (GNN) have been shown to work effectively for modeling graph structured data to solve tasks such as node classification, link prediction and graph classification. There has been some recent progress in defining the notion of pooling in graphs whereby the model tries to generate a graph level representation by downsampling and summarizing the information present in the nodes. Existing pooling methods either fail to effectively capture the graph substructure or do not easily scale to large graphs. In this work, we propose ASAP (Adaptive Structure Aware Pooling), a sparse and differentiable pooling method that addresses the limitations of previous graph pooling architectures. ASAP utilizes a novel self-attention network along with a modified GNN formulation to capture the importance of each node in a given graph. It also learns a sparse soft cluster assignment for nodes at each layer to effectively pool the subgraphs to form the pooled graph. Through extensive experiments on multiple datasets and theoretical analysis, we motivate our choice of the components used in ASAP. Our experimental results show that combining existing GNN architectures with ASAP leads to state-of-the-art results on multiple graph classification benchmarks. ASAP has an average improvement of 4\%, compared to current sparse hierarchical state-of-the-art method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge