Naman Poddar

Multilingual Abusiveness Identification on Code-Mixed Social Media Text

Mar 01, 2022

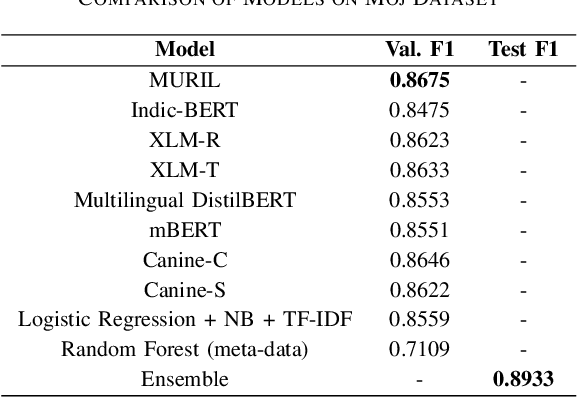

Abstract:Social Media platforms have been seeing adoption and growth in their usage over time. This growth has been further accelerated with the lockdown in the past year when people's interaction, conversation, and expression were limited physically. It is becoming increasingly important to keep the platform safe from abusive content for better user experience. Much work has been done on English social media content but text analysis on non-English social media is relatively underexplored. Non-English social media content have the additional challenges of code-mixing, transliteration and using different scripture in same sentence. In this work, we propose an approach for abusiveness identification on the multilingual Moj dataset which comprises of Indic languages. Our approach tackles the common challenges of non-English social media content and can be extended to other languages as well.

Dis-entangling Mixture of Interventions on a Causal Bayesian Network Using Aggregate Observations

Jan 15, 2020

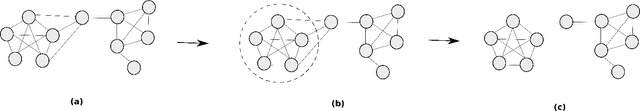

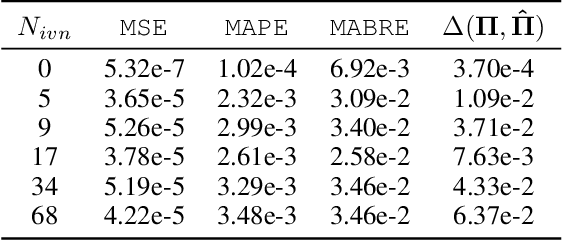

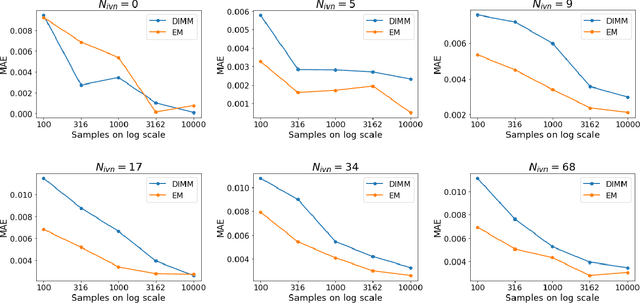

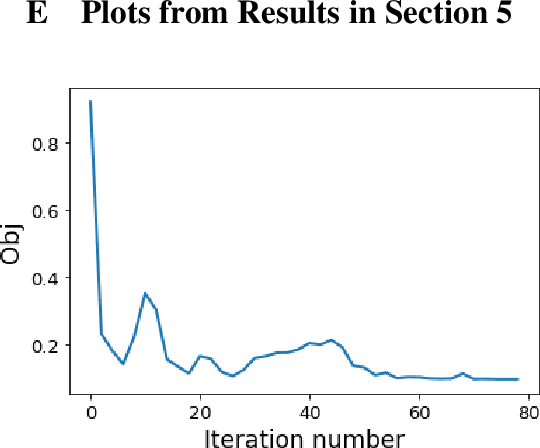

Abstract:We study the problem of separating a mixture of distributions, all of which come from interventions on a known causal bayesian network. Given oracle access to marginals of all distributions resulting from interventions on the network, and estimates of marginals from the mixture distribution, we want to recover the mixing proportions of different mixture components. We show that in the worst case, mixing proportions cannot be identified using marginals only. If exact marginals of the mixture distribution were known, under a simple assumption of excluding a few distributions from the mixture, we show that the mixing proportions become identifiable. Our identifiability proof is constructive and gives an efficient algorithm recovering the mixing proportions exactly. When exact marginals are not available, we design an optimization framework to estimate the mixing proportions. Our problem is motivated from a real-world scenario of an e-commerce business, where multiple interventions occur at a given time, leading to deviations in expected metrics. We conduct experiments on the well known publicly available ALARM network and on a proprietary dataset from a large e-commerce company validating the performance of our method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge