Eduardo Fidalgo

Overcoming Occlusions in the Wild: A Multi-Task Age Head Approach to Age Estimation

Jun 16, 2025Abstract:Facial age estimation has achieved considerable success under controlled conditions. However, in unconstrained real-world scenarios, which are often referred to as 'in the wild', age estimation remains challenging, especially when faces are partially occluded, which may obscure their visibility. To address this limitation, we propose a new approach integrating generative adversarial networks (GANs) and transformer architectures to enable robust age estimation from occluded faces. We employ an SN-Patch GAN to effectively remove occlusions, while an Attentive Residual Convolution Module (ARCM), paired with a Swin Transformer, enhances feature representation. Additionally, we introduce a Multi-Task Age Head (MTAH) that combines regression and distribution learning, further improving age estimation under occlusion. Experimental results on the FG-NET, UTKFace, and MORPH datasets demonstrate that our proposed approach surpasses existing state-of-the-art techniques for occluded facial age estimation by achieving an MAE of $3.00$, $4.54$, and $2.53$ years, respectively.

Underage Detection through a Multi-Task and MultiAge Approach for Screening Minors in Unconstrained Imagery

Jun 12, 2025Abstract:Accurate automatic screening of minors in unconstrained images demands models that are robust to distribution shift and resilient to the children under-representation in publicly available data. To overcome these issues, we propose a multi-task architecture with dedicated under/over-age discrimination tasks based on a frozen FaRL vision-language backbone joined with a compact two-layer MLP that shares features across one age-regression head and four binary under-age heads for age thresholds of 12, 15, 18, and 21 years, focusing on the legally critical age range. To address the severe class imbalance, we introduce an $\alpha$-reweighted focal-style loss and age-balanced mini-batch sampling, which equalizes twelve age bins during stochastic optimization. Further improvement is achieved with an age gap that removes edge cases from the loss. Moreover, we set a rigorous evaluation by proposing the Overall Under-Age Benchmark, with 303k cleaned training images and 110k test images, defining both the "ASORES-39k" restricted overall test, which removes the noisiest domains, and the age estimation wild shifts test "ASWIFT-20k" of 20k-images, stressing extreme pose ($>$45{\deg}), expression, and low image quality to emulate real-world shifts. Trained on the cleaned overall set with resampling and age gap, our multiage model "F" lowers the root-mean-square-error on the ASORES-39k restricted test from 5.733 (age-only baseline) to 5.656 years and lifts under-18 detection from F2 score of 0.801 to 0.857 at 1% false-adult rate. Under the domain shift to the wild data of ASWIFT-20k, the same configuration nearly sustains 0.99 recall while boosting F2 from 0.742 to 0.833 with respect to the age-only baseline, demonstrating strong generalization under distribution shift. For the under-12 and under-15 tasks, the respective boosts in F2 are from 0.666 to 0.955 and from 0.689 to 0.916, respectively.

MeWEHV: Mel and Wave Embeddings for Human Voice Tasks

Sep 28, 2022

Abstract:A recent trend in speech processing is the use of embeddings created through machine learning models trained on a specific task with large datasets. By leveraging the knowledge already acquired, these models can be reused in new tasks where the amount of available data is small. This paper proposes a pipeline to create a new model, called Mel and Wave Embeddings for Human Voice Tasks (MeWEHV), capable of generating robust embeddings for speech processing. MeWEHV combines the embeddings generated by a pre-trained raw audio waveform encoder model, and deep features extracted from Mel Frequency Cepstral Coefficients (MFCCs) using Convolutional Neural Networks (CNNs). We evaluate the performance of MeWEHV on three tasks: speaker, language, and accent identification. For the first one, we use the VoxCeleb1 dataset and present YouSpeakers204, a new and publicly available dataset for English speaker identification that contains 19607 audio clips from 204 persons speaking in six different accents, allowing other researchers to work with a very balanced dataset, and to create new models that are robust to multiple accents. For evaluating the language identification task, we use the VoxForge and Common Language datasets. Finally, for accent identification, we use the Latin American Spanish Corpora (LASC) and Common Voice datasets. Our approach allows a significant increase in the performance of state-of-the-art models on all the tested datasets, with a low additional computational cost.

Efficient Detection of Botnet Traffic by features selection and Decision Trees

Jun 30, 2021Abstract:Botnets are one of the online threats with the biggest presence, causing billionaire losses to global economies. Nowadays, the increasing number of devices connected to the Internet makes it necessary to analyze large amounts of network traffic data. In this work, we focus on increasing the performance on botnet traffic classification by selecting those features that further increase the detection rate. For this purpose we use two feature selection techniques, Information Gain and Gini Importance, which led to three pre-selected subsets of five, six and seven features. Then, we evaluate the three feature subsets along with three models, Decision Tree, Random Forest and k-Nearest Neighbors. To test the performance of the three feature vectors and the three models we generate two datasets based on the CTU-13 dataset, namely QB-CTU13 and EQB-CTU13. We measure the performance as the macro averaged F1 score over the computational time required to classify a sample. The results show that the highest performance is achieved by Decision Trees using a five feature set which obtained a mean F1 score of 85% classifying each sample in an average time of 0.78 microseconds.

Short Text Classification Approach to Identify Child Sexual Exploitation Material

Nov 13, 2020

Abstract:Producing or sharing Child Sexual Exploitation Material (CSEM) is a serious crime fought vigorously by Law Enforcement Agencies (LEAs). When an LEA seizes a computer from a potential producer or consumer of CSEM, they need to analyze the suspect's hard disk's files looking for pieces of evidence. However, a manual inspection of the file content looking for CSEM is a time-consuming task. In most cases, it is unfeasible in the amount of time available for the Spanish police using a search warrant. Instead of analyzing its content, another approach that can be used to speed up the process is to identify CSEM by analyzing the file names and their absolute paths. The main challenge for this task lies behind dealing with short text distorted deliberately by the owners of this material using obfuscated words and user-defined naming patterns. This paper presents and compares two approaches based on short text classification to identify CSEM files. The first one employs two independent supervised classifiers, one for the file name and the other for the path, and their outputs are later on fused into a single score. Conversely, the second approach uses only the file name classifier to iterate over the file's absolute path. Both approaches operate at the character n-grams level, while binary and orthographic features enrich the file name representation, and a binary Logistic Regression model is used for classification. The presented file classifier achieved an average class recall of 0.98. This solution could be integrated into forensic tools and services to support Law Enforcement Agencies to identify CSEM without tackling every file's visual content, which is computationally much more highly demanding.

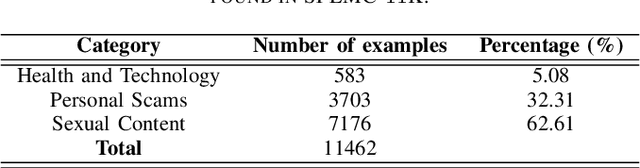

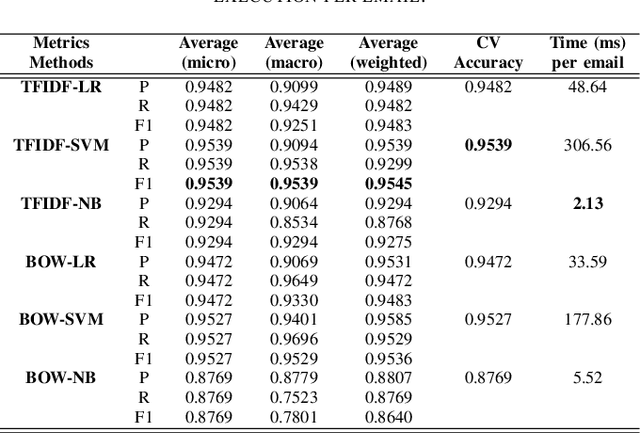

Classification of Spam Emails through Hierarchical Clustering and Supervised Learning

May 28, 2020

Abstract:Spammers take advantage of email popularity to send indiscriminately unsolicited emails. Although researchers and organizations continuously develop anti-spam filters based on binary classification, spammers bypass them through new strategies, like word obfuscation or image-based spam. For the first time in literature, we propose to classify spam email in categories to improve the handle of already detected spam emails, instead of just using a binary model. First, we applied a hierarchical clustering algorithm to create SPEMC-$11$K (SPam EMail Classification), the first multi-class dataset, which contains three types of spam emails: Health and Technology, Personal Scams, and Sexual Content. Then, we used SPEMC-$11$K to evaluate the combination of TF-IDF and BOW encodings with Na\"ive Bayes, Decision Trees and SVM classifiers. Finally, we recommend for the task of multi-class spam classification the use of (i) TF-IDF combined with SVM for the best micro F1 score performance, $95.39\%$, and (ii) TD-IDF along with NB for the fastest spam classification, analyzing an email in $2.13$ms.

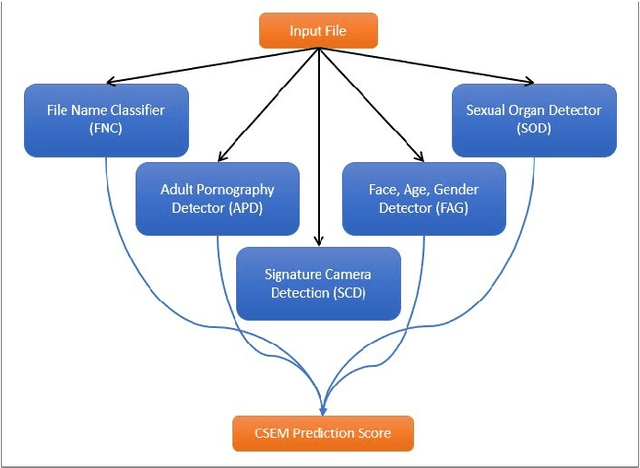

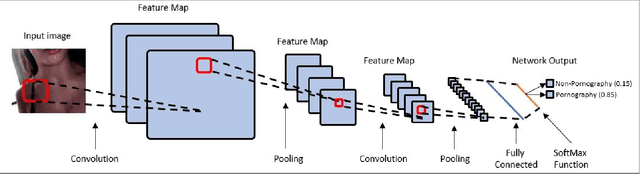

Evaluating Performance of an Adult Pornography Classifier for Child Sexual Abuse Detection

May 18, 2020

Abstract:The information technology revolution has facilitated reaching pornographic material for everyone, including minors who are the most vulnerable in case they were abused. Accuracy and time performance are features desired by forensic tools oriented to child sexual abuse detection, whose main components may rely on image or video classifiers. In this paper, we identify which are the hardware and software requirements that may affect the performance of a forensic tool. We evaluated the adult porn classifier proposed by Yahoo, based on Deep Learning, into two different OS and four Hardware configurations, with two and four different CPU and GPU, respectively. The classification speed on Ubuntu Operating System is $~5$ and $~2$ times faster than on Windows 10, when a CPU and GPU are used, respectively. We demonstrate the superiority of a GPU-based machine rather than a CPU-based one, being $7$ to $8$ times faster. Finally, we prove that the upward and downward interpolation process conducted while resizing the input images do not influence the performance of the selected prediction model.

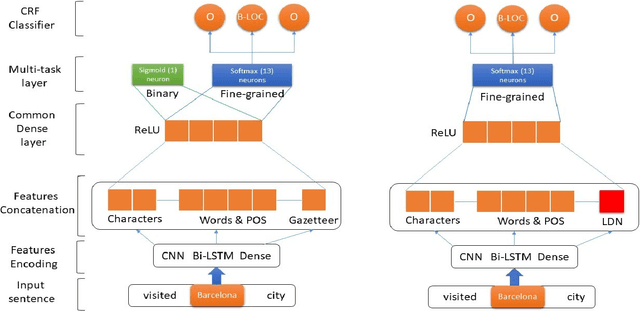

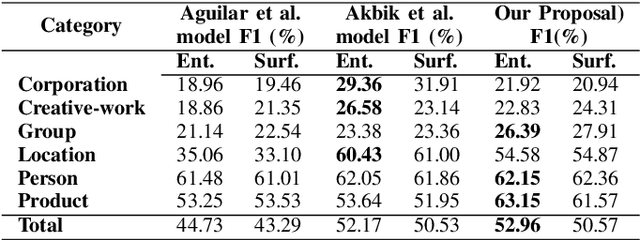

Improving Named Entity Recognition in Tor Darknet with Local Distance Neighbor Feature

May 18, 2020

Abstract:Name entity recognition in noisy user-generated texts is a difficult task usually enhanced by incorporating an external resource of information, such as gazetteers. However, gazetteers are task-specific, and they are expensive to build and maintain. This paper adopts and improves the approach of Aguilar et al. by presenting a novel feature, called Local Distance Neighbor, which substitutes gazetteers. We tested the new approach on the W-NUT-2017 dataset, obtaining state-of-the-art results for the Group, Person and Product categories of Named Entities. Next, we added 851 manually labeled samples to the W-NUT-2017 dataset to account for named entities in the Tor Darknet related to weapons and drug selling. Finally, our proposal achieved an entity and surface F1 scores of 52.96% and 50.57% on this extended dataset, demonstrating its usefulness for Law Enforcement Agencies to detect named entities in the Tor hidden services.

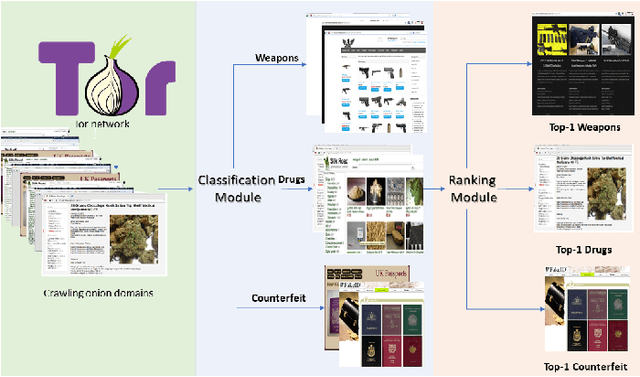

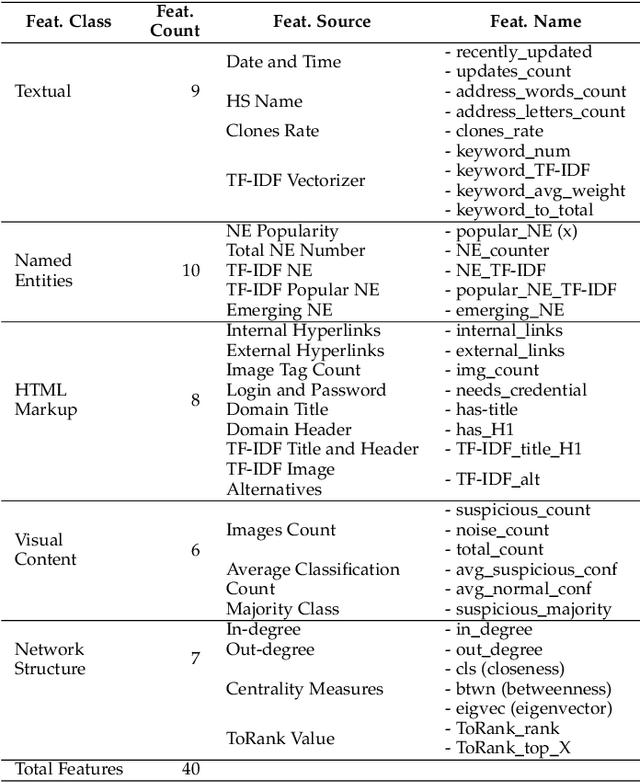

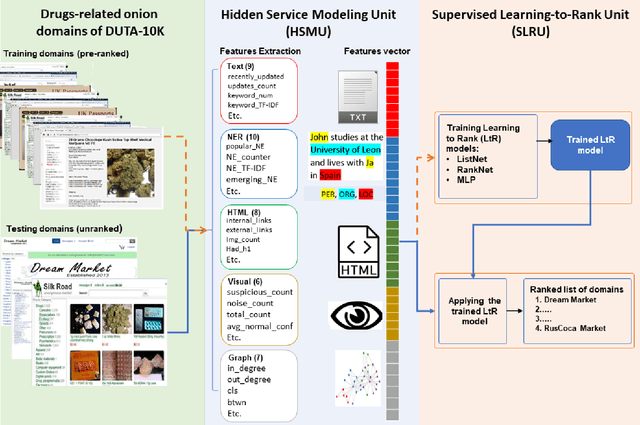

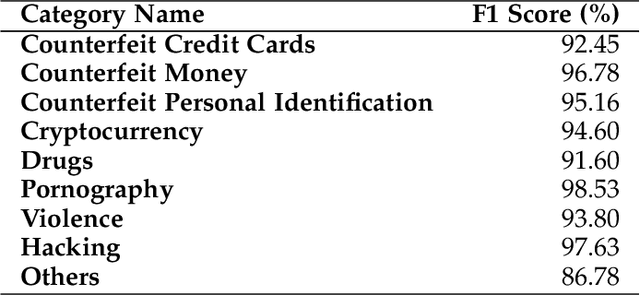

Content-Based Features to Rank Influential Hidden Services of the Tor Darknet

Oct 05, 2019

Abstract:The unevenness importance of criminal activities in the onion domains of the Tor Darknet and the different levels of their appeal to the end-user make them tangled to measure their influence. To this end, this paper presents a novel content-based ranking framework to detect the most influential onion domains. Our approach comprises a modeling unit that represents an onion domain using forty features extracted from five different resources: user-visible text, HTML markup, Named Entities, network topology, and visual content. And also, a ranking unit that, using the Learning-to-Rank (LtR) approach, automatically learns a ranking function by integrating the previously obtained features. Using a case-study based on drugs-related onion domains, we obtained the following results. (1) Among the explored LtR schemes, the listwise approach outperforms the benchmarked methods with an NDCG of 0.95 for the top-10 ranked domains. (2) We proved quantitatively that our framework surpasses the link-based ranking techniques. Also, (3) with the selected feature, we observed that the textual content, composed by text, NER, and HTML features, is the most balanced approach, in terms of efficiency and score obtained. The proposed framework might support Law Enforcement Agencies in detecting the most influential domains related to possible suspicious activities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge