Duc Vu

Learning Graph Filters for Structure-Function Coupling based Hub Node Identification

Oct 22, 2024

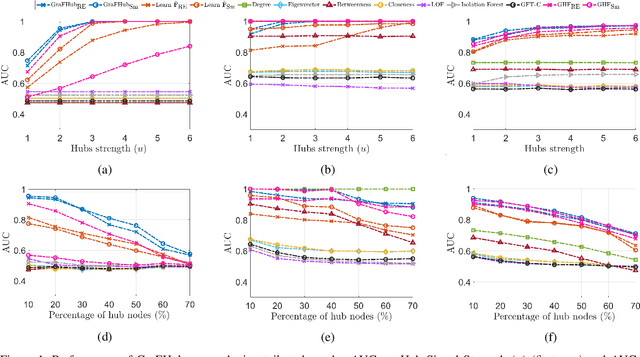

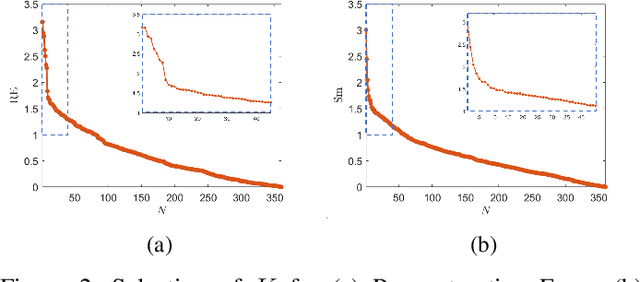

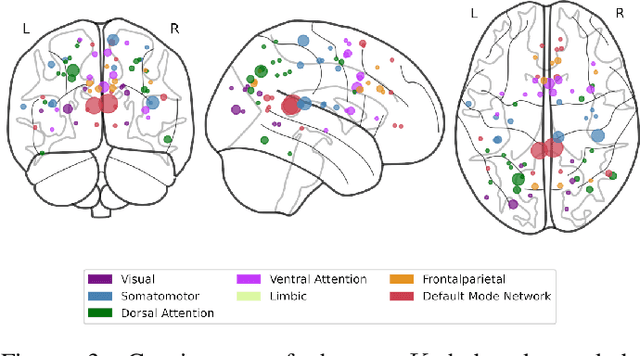

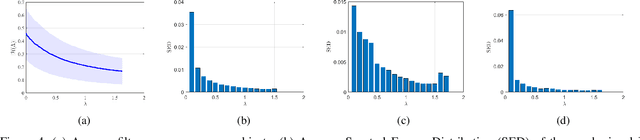

Abstract:Over the past two decades, tools from network science have been leveraged to characterize the organization of both structural and functional networks of the brain. One such measure of network organization is hub node identification. Hubs are specialized nodes within a network that link distinct brain units corresponding to specialized functional processes. Conventional methods for identifying hub nodes utilize different types of centrality measures and participation coefficient to profile various aspects of nodal importance. These methods solely rely on the functional connectivity networks constructed from functional magnetic resonance imaging (fMRI), ignoring the structure-function coupling in the brain. In this paper, we introduce a graph signal processing (GSP) based hub detection framework that utilizes both the structural connectivity and the functional activation to identify hub nodes. The proposed framework models functional activity as graph signals on the structural connectivity. Hub nodes are then detected based on the premise that hub nodes are sparse, have higher level of activity compared to their neighbors, and the non-hub nodes' activity can be modeled as the output of a graph-based filter. Based on these assumptions, an optimization framework, GraFHub, is formulated to learn the coefficients of the optimal polynomial graph filter and detect the hub nodes. The proposed framework is evaluated on both simulated data and resting state fMRI (rs-fMRI) data from Human Connectome Project (HCP).

SwiftBrush v2: Make Your One-step Diffusion Model Better Than Its Teacher

Aug 27, 2024Abstract:In this paper, we aim to enhance the performance of SwiftBrush, a prominent one-step text-to-image diffusion model, to be competitive with its multi-step Stable Diffusion counterpart. Initially, we explore the quality-diversity trade-off between SwiftBrush and SD Turbo: the former excels in image diversity, while the latter excels in image quality. This observation motivates our proposed modifications in the training methodology, including better weight initialization and efficient LoRA training. Moreover, our introduction of a novel clamped CLIP loss enhances image-text alignment and results in improved image quality. Remarkably, by combining the weights of models trained with efficient LoRA and full training, we achieve a new state-of-the-art one-step diffusion model, achieving an FID of 8.14 and surpassing all GAN-based and multi-step Stable Diffusion models. The project page is available at https://swiftbrushv2.github.io.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge