Dongwon Son

DEF-oriCORN: efficient 3D scene understanding for robust language-directed manipulation without demonstrations

Jul 31, 2024

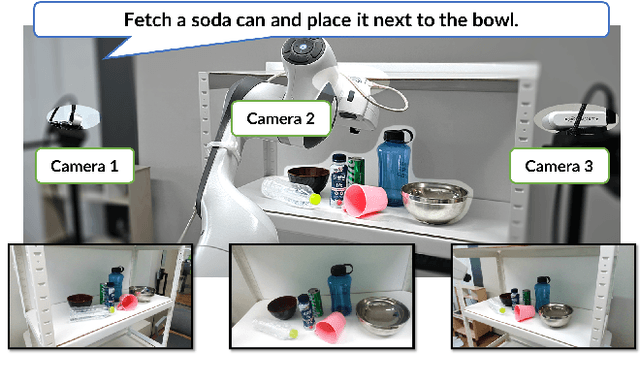

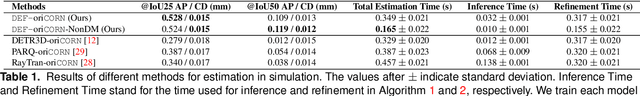

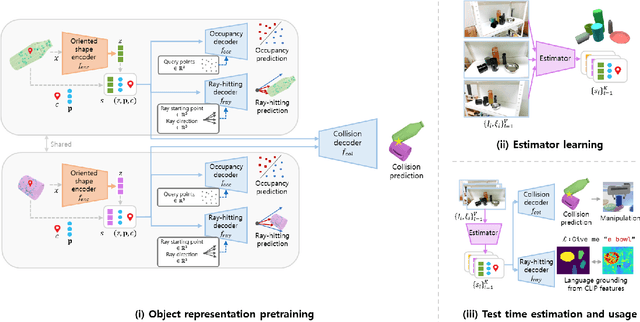

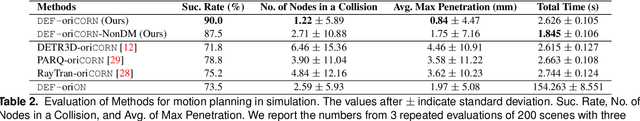

Abstract:We present DEF-oriCORN, a framework for language-directed manipulation tasks. By leveraging a novel object-based scene representation and diffusion-model-based state estimation algorithm, our framework enables efficient and robust manipulation planning in response to verbal commands, even in tightly packed environments with sparse camera views without any demonstrations. Unlike traditional representations, our representation affords efficient collision checking and language grounding. Compared to state-of-the-art baselines, our framework achieves superior estimation and motion planning performance from sparse RGB images and zero-shot generalizes to real-world scenarios with diverse materials, including transparent and reflective objects, despite being trained exclusively in simulation. Our code for data generation, training, inference, and pre-trained weights are publicly available at: https://sites.google.com/view/def-oricorn/home.

Local object crop collision network for efficient simulation of non-convex objects in GPU-based simulators

Apr 19, 2023Abstract:Our goal is to develop an efficient contact detection algorithm for large-scale GPU-based simulation of non-convex objects. Current GPU-based simulators such as IsaacGym and Brax must trade-off speed with fidelity, generality, or both when simulating non-convex objects. Their main issue lies in contact detection (CD): existing CD algorithms, such as Gilbert-Johnson-Keerthi (GJK), must trade off their computational speed with accuracy which becomes expensive as the number of collisions among non-convex objects increases. We propose a data-driven approach for CD, whose accuracy depends only on the quality and quantity of offline dataset rather than online computation time. Unlike GJK, our method inherently has a uniform computational flow, which facilitates efficient GPU usage based on advanced compilers such as XLA (Accelerated Linear Algebra). Further, we offer a data-efficient solution by learning the patterns of colliding local crop object shapes, rather than global object shapes which are harder to learn. We demonstrate our approach improves the efficiency of existing CD methods by a factor of 5-10 for non-convex objects with comparable accuracy. Using the previous work on contact resolution for a neural-network-based contact detector, we integrate our CD algorithm into the open-source GPU-based simulator, Brax, and show that we can improve the efficiency over IsaacGym and generality over standard Brax. We highly recommend the videos of our simulator included in the supplementary materials.

Reinforcement Learning for Vision-based Object Manipulation with Non-parametric Policy and Action Primitives

Jun 12, 2022

Abstract:The object manipulation is a crucial ability for a service robot, but it is hard to solve with reinforcement learning due to some reasons such as sample efficiency. In this paper, to tackle this object manipulation, we propose a novel framework, AP-NPQL (Non-Parametric Q Learning with Action Primitives), that can efficiently solve the object manipulation with visual input and sparse reward, by utilizing a non-parametric policy for reinforcement learning and appropriate behavior prior for the object manipulation. We evaluate the efficiency and the performance of the proposed AP-NPQL for four object manipulation tasks on simulation (pushing plate, stacking box, flipping cup, and picking and placing plate), and it turns out that our AP-NPQL outperforms the state-of-the-art algorithms based on parametric policy and behavior prior in terms of learning time and task success rate. We also successfully transfer and validate the learned policy of the plate pick-and-place task to the real robot in a sim-to-real manner.

Grasping as Inference: Reactive Grasping in Heavily Cluttered Environment

Jun 03, 2022

Abstract:Although, in the task of grasping via a data-driven method, closed-loop feedback and predicting 6 degrees of freedom (DoF) grasp rather than conventionally used 4DoF top-down grasp are demonstrated to improve performance individually, few systems have both. Moreover, the sequential property of that task is hardly dealt with, while the approaching motion necessarily generates a series of observations. Therefore, this paper synthesizes three approaches and suggests a closed-loop framework that can predict the 6DoF grasp in a heavily cluttered environment from continuously received vision observations. This can be realized by formulating the grasping problem as Hidden Markov Model and applying a particle filter to infer grasp. Additionally, we introduce a novel lightweight Convolutional Neural Network (CNN) model that evaluates and initializes grasp samples in real-time, making the particle filter process possible. The experiments, which are conducted on a real robot with a heavily cluttered environment, show that our framework not only quantitatively improves the grasping success rate significantly compared to the baseline algorithms, but also qualitatively reacts to a dynamic change in the environment and cleans up the table.

Fast and Accurate Data-Driven Simulation Framework for Contact-Intensive Tight-Tolerance Robotic Assembly Tasks

Feb 26, 2022

Abstract:We propose a novel fast and accurate simulation framework for contact-intensive tight-tolerance robotic assembly tasks. The key components of our framework are as follows: 1) data-driven contact point clustering with a certain variable-input network, which is explicitly trained for simulation accuracy (with real experimental data) and able to accommodate complex/non-convex object shapes; 2) contact force solving, which precisely/robustly enforces physics of contact (i.e., no penetration, Coulomb friction, maximum energy dissipation) with contact mechanics of contact nodes augmented with that of their object; 3) contact detection with a neural network, which is parallelized for each contact point, thus, can be computed very quickly even for complex shape objects with no exhaust pair-wise test; and 4) time integration with PMI (passive mid-point integration), whose discrete-time passivity improves overall simulation accuracy, stability, and speed. We then implement our proposed framework for two widely-encountered/benchmarked contact-intensive tight-tolerance tasks, namely, peg-in-hole assembly and bolt-nut assembly, and validate its speed and accuracy against real experimental data. It is worthwhile to mention that our proposed simulation framework is applicable to other general contact-intensive tight-tolerance robotic assembly tasks as well. We also compare its performance with other physics engines and manifest its robustness via haptic rendering of virtual bolting task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge