Dong Jin

Rethinking Table Pruning in TableQA: From Sequential Revisions to Gold Trajectory-Supervised Parallel Search

Jan 07, 2026Abstract:Table Question Answering (TableQA) benefits significantly from table pruning, which extracts compact sub-tables by eliminating redundant cells to streamline downstream reasoning. However, existing pruning methods typically rely on sequential revisions driven by unreliable critique signals, often failing to detect the loss of answer-critical data. To address this limitation, we propose TabTrim, a novel table pruning framework which transforms table pruning from sequential revisions to gold trajectory-supervised parallel search. TabTrim derives a gold pruning trajectory using the intermediate sub-tables in the execution process of gold SQL queries, and trains a pruner and a verifier to make the step-wise pruning result align with the gold pruning trajectory. During inference, TabTrim performs parallel search to explore multiple candidate pruning trajectories and identify the optimal sub-table. Extensive experiments demonstrate that TabTrim achieves state-of-the-art performance across diverse tabular reasoning tasks: TabTrim-8B reaches 73.5% average accuracy, outperforming the strongest baseline by 3.2%, including 79.4% on WikiTQ and 61.2% on TableBench.

SQLForge: Synthesizing Reliable and Diverse Data to Enhance Text-to-SQL Reasoning in LLMs

May 19, 2025Abstract:Large Language models (LLMs) have demonstrated significant potential in text-to-SQL reasoning tasks, yet a substantial performance gap persists between existing open-source models and their closed-source counterparts. In this paper, we introduce SQLForge, a novel approach for synthesizing reliable and diverse data to enhance text-to-SQL reasoning in LLMs. We improve data reliability through SQL syntax constraints and SQL-to-question reverse translation, ensuring data logic at both structural and semantic levels. We also propose an SQL template enrichment and iterative data domain exploration mechanism to boost data diversity. Building on the augmented data, we fine-tune a variety of open-source models with different architectures and parameter sizes, resulting in a family of models termed SQLForge-LM. SQLForge-LM achieves the state-of-the-art performance on the widely recognized Spider and BIRD benchmarks among the open-source models. Specifically, SQLForge-LM achieves EX accuracy of 85.7% on Spider Dev and 59.8% on BIRD Dev, significantly narrowing the performance gap with closed-source methods.

Deep Medical Image Analysis with Representation Learning and Neuromorphic Computing

May 11, 2020

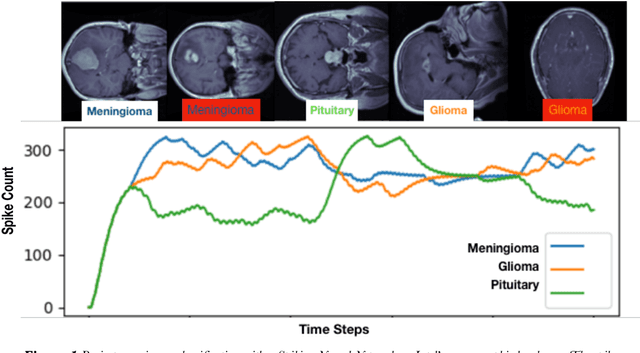

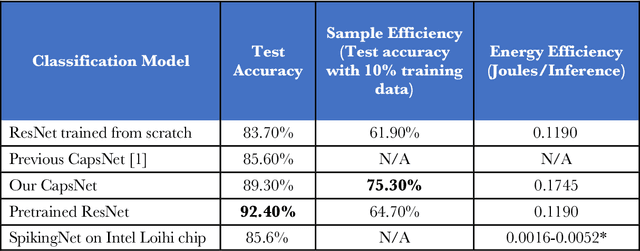

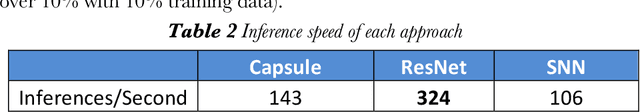

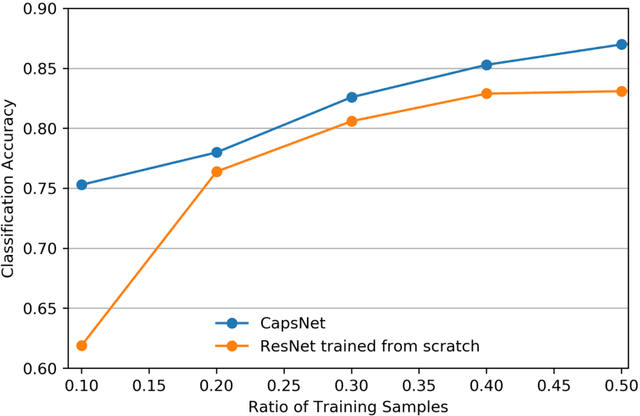

Abstract:We explore three representative lines of research and demonstrate the utility of our methods on a classification benchmark of brain cancer MRI data. First, we present a capsule network that explicitly learns a representation robust to rotation and affine transformation. This model requires less training data and outperforms both the original convolutional baseline and a previous capsule network implementation. Second, we leverage the latest domain adaptation techniques to achieve a new state-of-the-art accuracy. Our experiments show that non-medical images can be used to improve model performance. Finally, we design a spiking neural network trained on the Intel Loihi neuromorphic chip (Fig. 1 shows an inference snapshot). This model consumes much lower power while achieving reasonable accuracy given model reduction. We posit that more research in this direction combining hardware and learning advancements will power future medical imaging (on-device AI, few-shot prediction, adaptive scanning).

Real-time Anomaly Detection and Classification in Streaming PMU Data

Nov 14, 2019

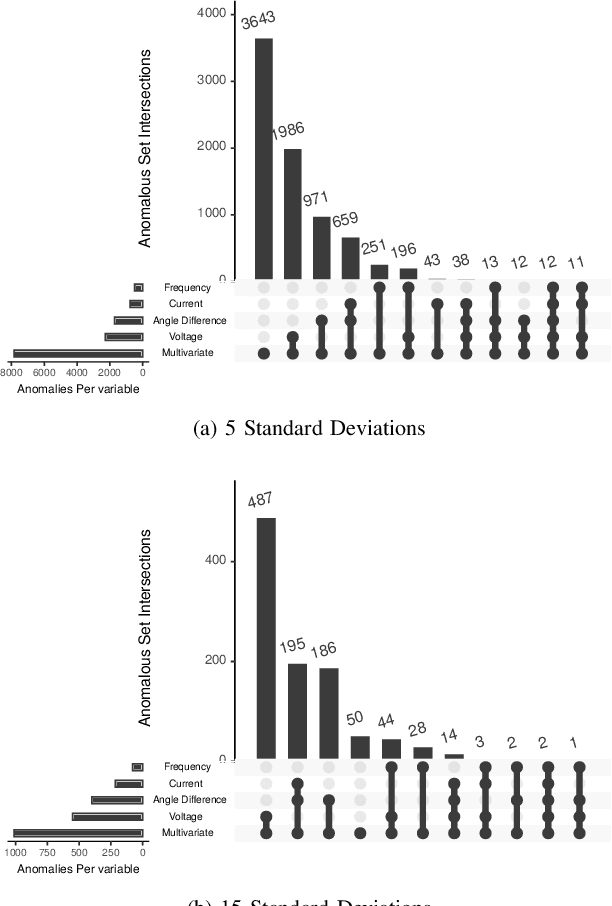

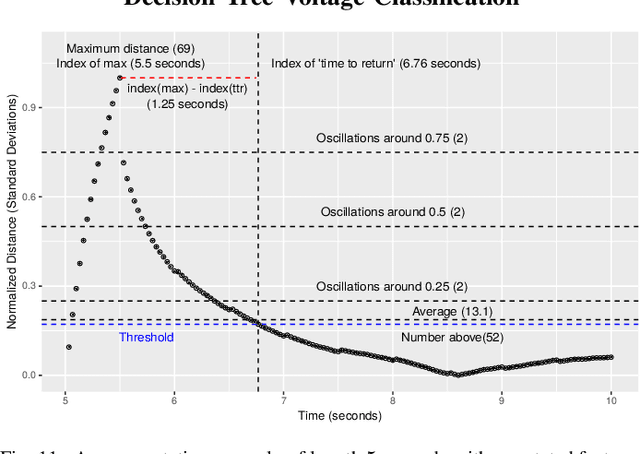

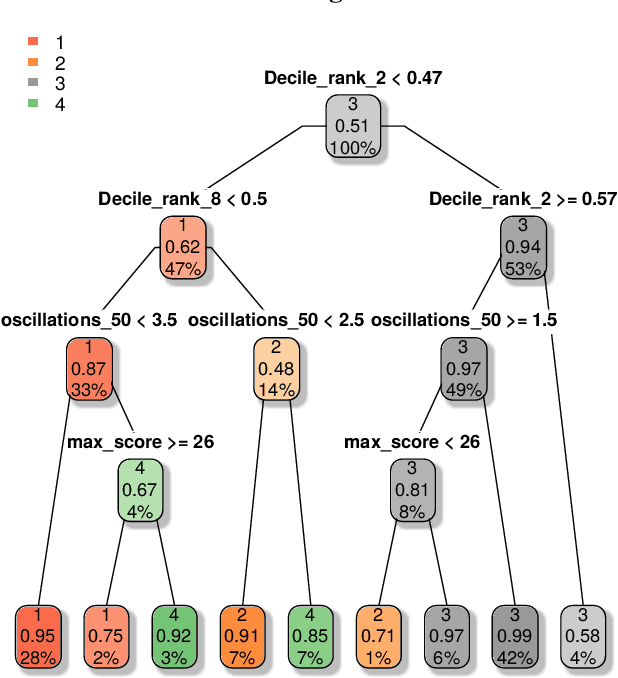

Abstract:Ensuring secure and reliable operations of the power grid is a primary concern of system operators. Phasor measurement units (PMUs) are rapidly being deployed in the grid to provide fast-sampled operational data that should enable quicker decision-making. This work presents a general interpretable framework for analyzing real-time PMU data, and thus enabling grid operators to understand the current state and to identify anomalies on the fly. Applying statistical learning tools on the streaming data, we first learn an effective dynamical model to describe the current behavior of the system. Next, we use the probabilistic predictions of our learned model to define in a principled way an efficient anomaly detection tool. Finally, the last module of our framework produces on-the-fly classification of the detected anomalies into common occurrence classes using features that grid operators are familiar with. We demonstrate the efficacy of our interpretable approach through extensive numerical experiments on real PMU data collected from a transmission operator in the USA.

Learning to Navigate in Indoor Environments: from Memorizing to Reasoning

Apr 21, 2019

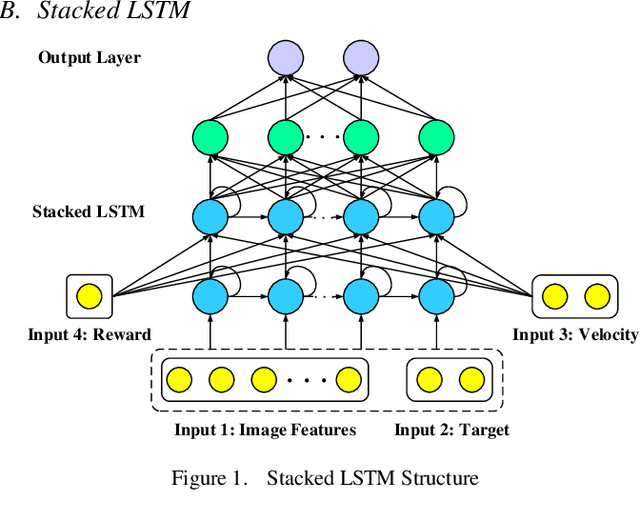

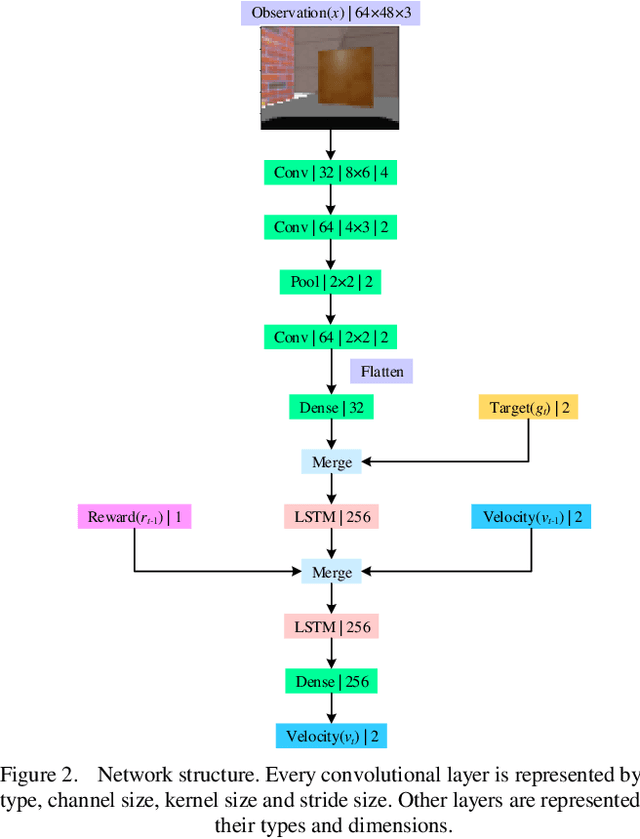

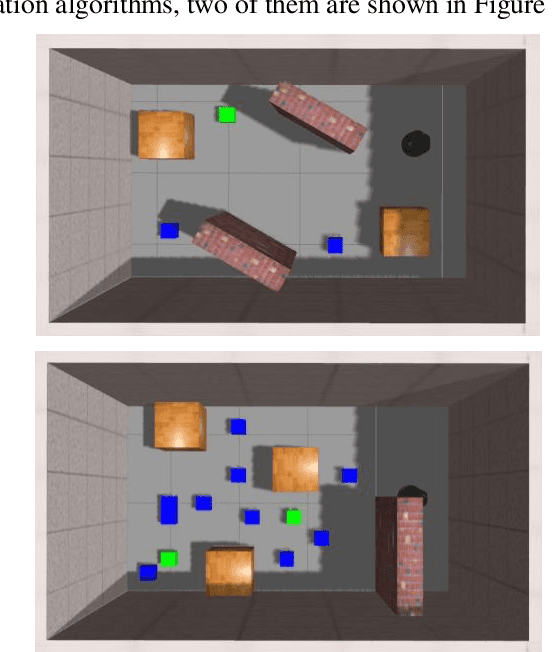

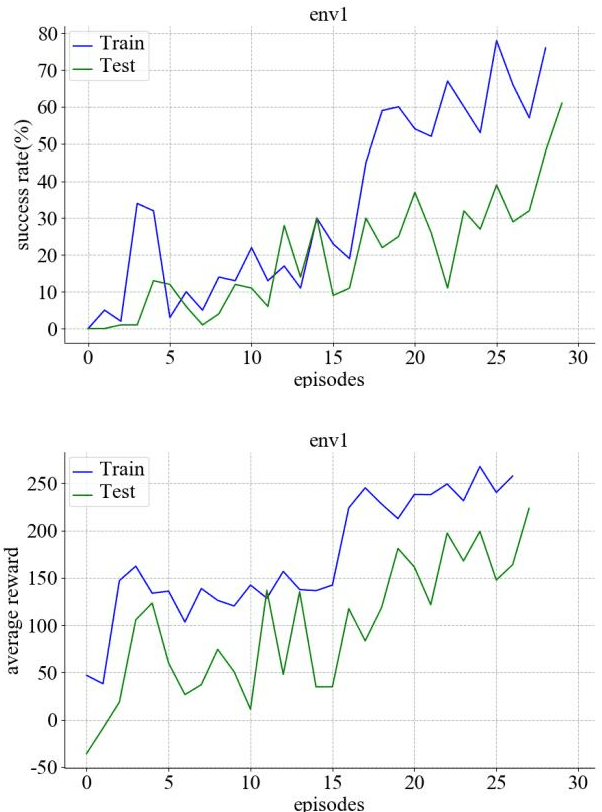

Abstract:Autonomous navigation is an essential capability of smart mobility for mobile robots. Traditional methods must have the environment map to plan a collision-free path in workspace. Deep reinforcement learning (DRL) is a promising technique to realize the autonomous navigation task without a map, with which deep neural network can fit the mapping from observation to reasonable action through explorations. It should not only memorize the trained target, but more importantly, the planner can reason out the unseen goal. We proposed a new motion planner based on deep reinforcement learning that can arrive at new targets that have not been trained before in the indoor environment with RGB image and odometry only. The model has a structure of stacked Long Short-Term memory (LSTM). Finally, experiments were implemented in both simulated and real environments. The source code is available: https://github.com/marooncn/navbot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge