Dominic Kafka

GOALS: Gradient-Only Approximations for Line Searches Towards Robust and Consistent Training of Deep Neural Networks

May 23, 2021

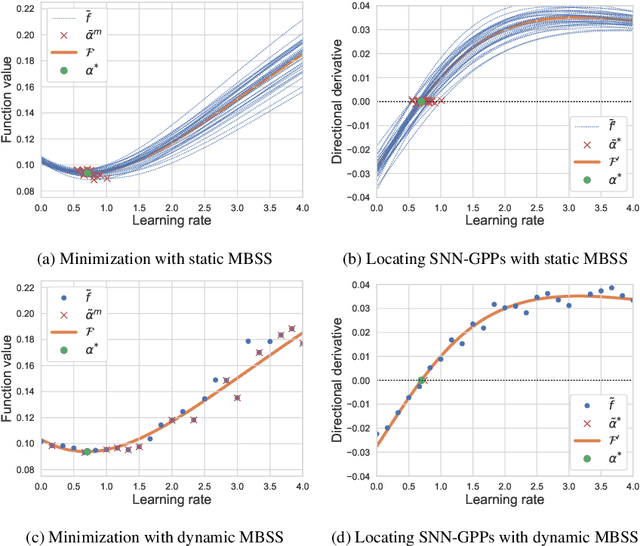

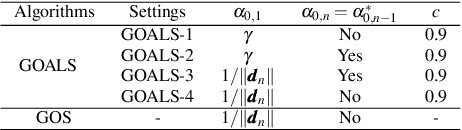

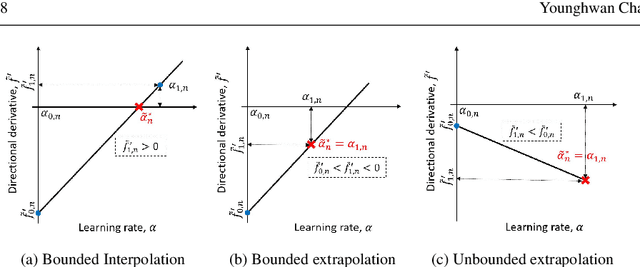

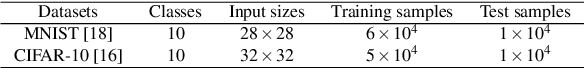

Abstract:Mini-batch sub-sampling (MBSS) is favored in deep neural network training to reduce the computational cost. Still, it introduces an inherent sampling error, making the selection of appropriate learning rates challenging. The sampling errors can manifest either as a bias or variances in a line search. Dynamic MBSS re-samples a mini-batch at every function evaluation. Hence, dynamic MBSS results in point-wise discontinuous loss functions with smaller bias but larger variance than static sampled loss functions. However, dynamic MBSS has the advantage of having larger data throughput during training but requires the complexity regarding discontinuities to be resolved. This study extends the gradient-only surrogate (GOS), a line search method using quadratic approximation models built with only directional derivative information, for dynamic MBSS loss functions. We propose a gradient-only approximation line search (GOALS) with strong convergence characteristics with defined optimality criterion. We investigate GOALS's performance by applying it on various optimizers that include SGD, RMSprop and Adam on ResNet-18 and EfficientNetB0. We also compare GOALS's against the other existing learning rate methods. We quantify both the best performing and most robust algorithms. For the latter, we introduce a relative robust criterion that allows us to quantify the difference between an algorithm and the best performing algorithm for a given problem. The results show that training a model with the recommended learning rate for a class of search directions helps to reduce the model errors in multimodal cases.

Gradient-only line searches to automatically determine learning rates for a variety of stochastic training algorithms

Jun 29, 2020

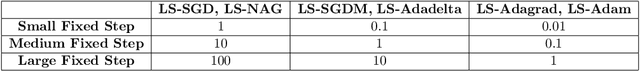

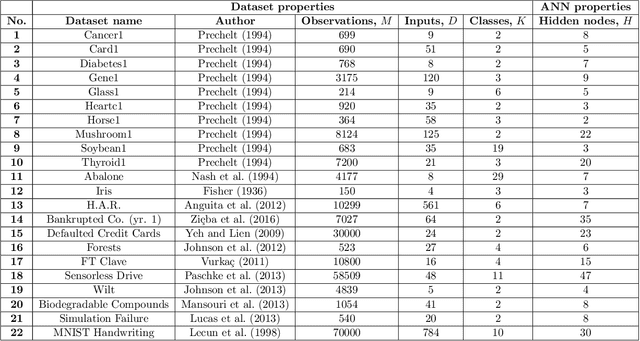

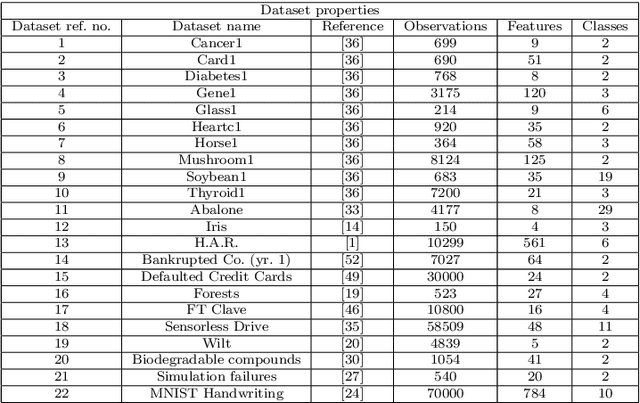

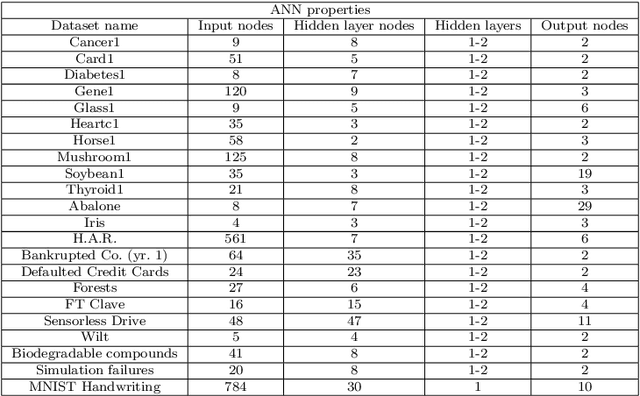

Abstract:Gradient-only and probabilistic line searches have recently reintroduced the ability to adaptively determine learning rates in dynamic mini-batch sub-sampled neural network training. However, stochastic line searches are still in their infancy and thus call for an ongoing investigation. We study the application of the Gradient-Only Line Search that is Inexact (GOLS-I) to automatically determine the learning rate schedule for a selection of popular neural network training algorithms, including NAG, Adagrad, Adadelta, Adam and LBFGS, with numerous shallow, deep and convolutional neural network architectures trained on different datasets with various loss functions. We find that GOLS-I's learning rate schedules are competitive with manually tuned learning rates, over seven optimization algorithms, three types of neural network architecture, 23 datasets and two loss functions. We demonstrate that algorithms, which include dominant momentum characteristics, are not well suited to be used with GOLS-I. However, we find GOLS-I to be effective in automatically determining learning rate schedules over 15 orders of magnitude, for most popular neural network training algorithms, effectively removing the need to tune the sensitive hyperparameters of learning rate schedules in neural network training.

Resolving learning rates adaptively by locating Stochastic Non-Negative Associated Gradient Projection Points using line searches

Jan 15, 2020

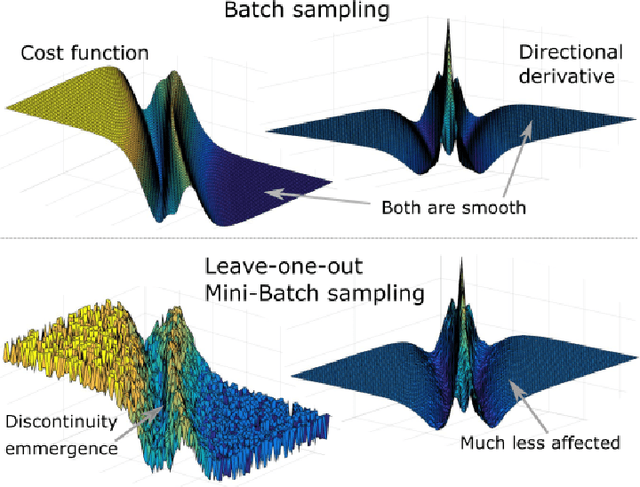

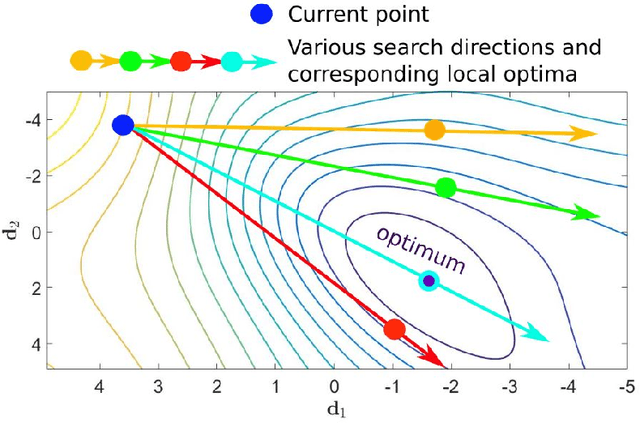

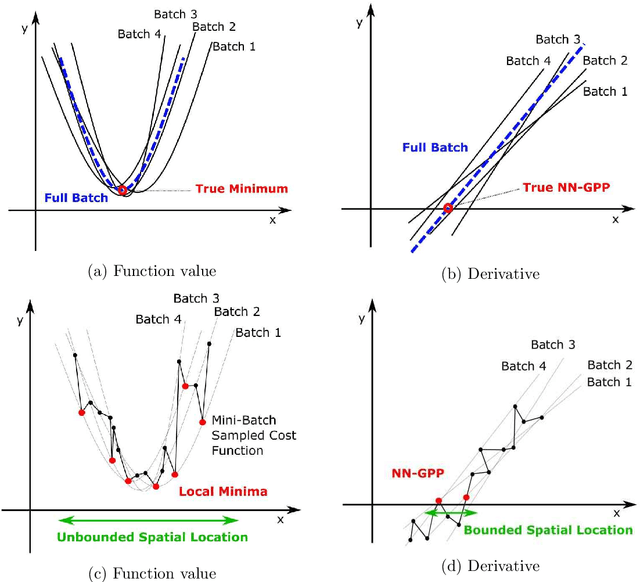

Abstract:Learning rates in stochastic neural network training are currently determined a priori to training, using expensive manual or automated iterative tuning. This study proposes gradient-only line searches to resolve the learning rate for neural network training algorithms. Stochastic sub-sampling during training decreases computational cost and allows the optimization algorithms to progress over local minima. However, it also results in discontinuous cost functions. Minimization line searches are not effective in this context, as they use a vanishing derivative (first order optimality condition), which often do not exist in a discontinuous cost function and therefore converge to discontinuities as opposed to minima from the data trends. Instead, we base candidate solutions along a search direction purely on gradient information, in particular by a directional derivative sign change from negative to positive (a Non-negative Associative Gradient Projection Point (NN- GPP)). Only considering a sign change from negative to positive always indicates a minimum, thus NN-GPPs contain second order information. Conversely, a vanishing gradient is purely a first order condition, which may indicate a minimum, maximum or saddle point. This insight allows the learning rate of an algorithm to be reliably resolved as the step size along a search direction, increasing convergence performance and eliminating an otherwise expensive hyperparameter.

Gradient-only line searches: An Alternative to Probabilistic Line Searches

Mar 22, 2019

Abstract:Step sizes in neural network training are largely determined using predetermined rules such as fixed learning rates and learning rate schedules, which require user input to determine their functional form and associated hyperparameters. Global optimization strategies to resolve these hyperparameters are computationally expensive. Line searches are capable of adaptively resolving learning rate schedules. However, due to discontinuities induced by mini-batch sampling, they have largely fallen out of favor. Notwithstanding, probabilistic line searches have recently demonstrated viability in resolving learning rates for stochastic loss functions. This method creates surrogates with confidence intervals, where restrictions are placed on the rate at which the search domain can grow along a search direction. This paper introduces an alternative paradigm, Gradient-Only Line Searches that are inexact (GOLS-I), as an alternative strategy to automatically resolve learning rates in stochastic cost functions over a range of 15 orders of magnitude without the use of surrogates. We show that GOLS-I is a competitive strategy to reliably resolve step sizes, adding high value in terms of performance, while being easy to implement. Considering mini-batch sampling, we open the discussion on how to split the effort to resolve quality search directions from quality step size estimates along a search direction.

Visual interpretation of the robustness of Non-Negative Associative Gradient Projection Points over function minimizers in mini-batch sampled loss functions

Mar 20, 2019

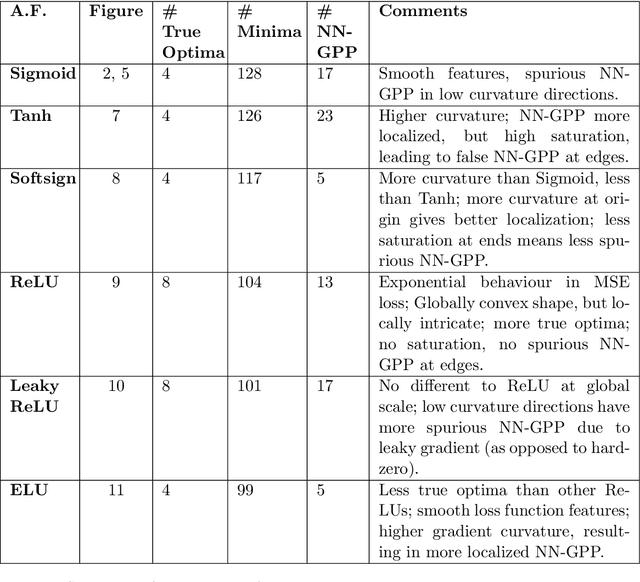

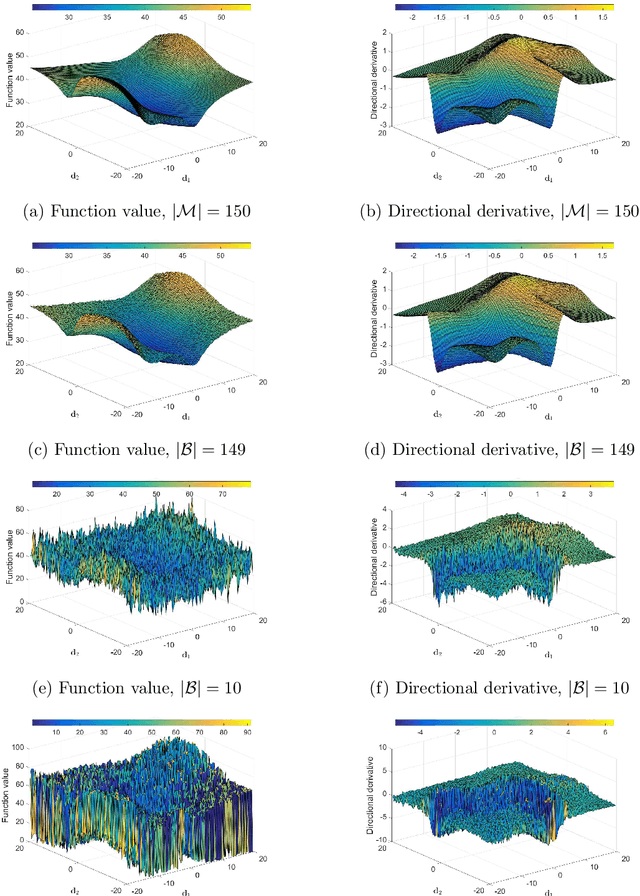

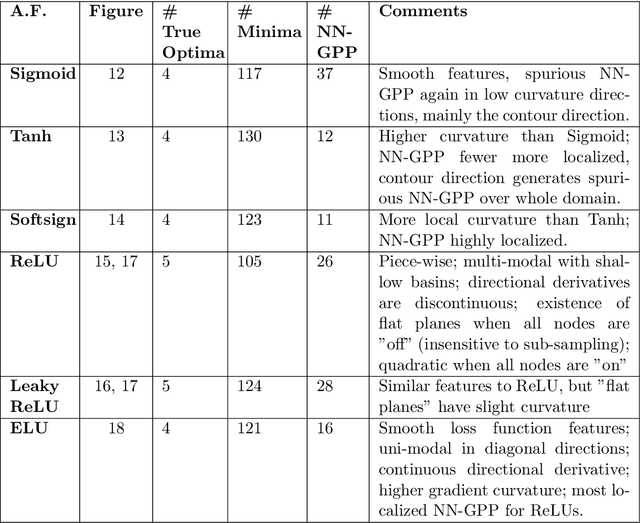

Abstract:Mini-batch sub-sampling is likely here to stay, due to growing data demands, memory-limited computational resources such as graphical processing units (GPUs), and the dynamics of on-line learning. Sampling a new mini-batch at every loss evaluation brings a number of benefits, but also one significant drawback: The loss function becomes discontinuous. These discontinuities are generally not problematic when using fixed learning rates or learning rate schedules typical of subgradient methods. However, they hinder attempts to directly minimize the loss function by solving for critical points, since function minimizers find spurious minima induced by discontinuities, while critical points may not even exist. Therefore, finding function minimizers and critical points in stochastic optimization is ineffective. As a result, attention has been given to reducing the effect of these discontinuities by means such as gradient averaging or adaptive and dynamic sampling. This paper offers an alternative paradigm: Recasting the optimization problem to rather find Non-Negative Associated Gradient Projection Points (NN-GPPs). In this paper, we demonstrate the NN-GPP interpretation of gradient information is more robust than critical points or minimizers, being less susceptible to sub-sampling induced variance and eliminating spurious function minimizers. We conduct a visual investigation, where we compare function value and gradient information for a variety of popular activation functions as applied to a simple neural network training problem. Based on the improved description offered by NN-GPPs over minimizers to identify true optima, in particular when using smooth activation functions with high curvature characteristics, we postulate that locating NN-GPPs can contribute significantly to automating neural network training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge