Daniel Nicolas Wilke

Gradient-only line searches to automatically determine learning rates for a variety of stochastic training algorithms

Jun 29, 2020

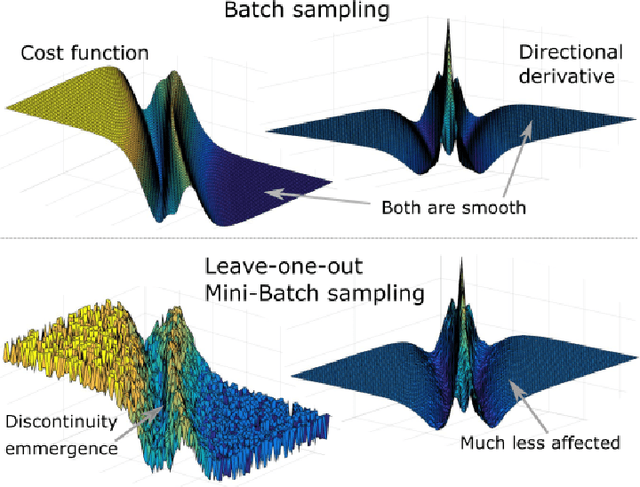

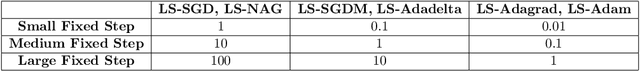

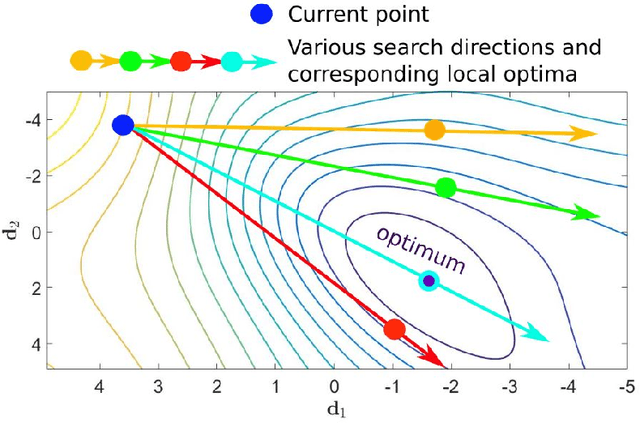

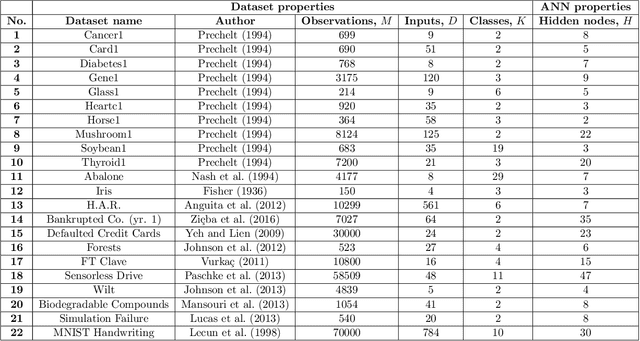

Abstract:Gradient-only and probabilistic line searches have recently reintroduced the ability to adaptively determine learning rates in dynamic mini-batch sub-sampled neural network training. However, stochastic line searches are still in their infancy and thus call for an ongoing investigation. We study the application of the Gradient-Only Line Search that is Inexact (GOLS-I) to automatically determine the learning rate schedule for a selection of popular neural network training algorithms, including NAG, Adagrad, Adadelta, Adam and LBFGS, with numerous shallow, deep and convolutional neural network architectures trained on different datasets with various loss functions. We find that GOLS-I's learning rate schedules are competitive with manually tuned learning rates, over seven optimization algorithms, three types of neural network architecture, 23 datasets and two loss functions. We demonstrate that algorithms, which include dominant momentum characteristics, are not well suited to be used with GOLS-I. However, we find GOLS-I to be effective in automatically determining learning rate schedules over 15 orders of magnitude, for most popular neural network training algorithms, effectively removing the need to tune the sensitive hyperparameters of learning rate schedules in neural network training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge