Doğa Yılmaz

Learned Single-Pass Multitasking Perceptual Graphics for Immersive Displays

Jul 31, 2024

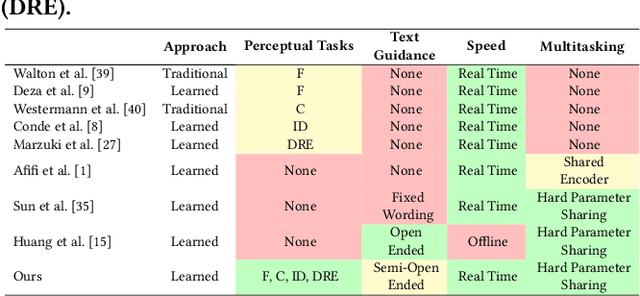

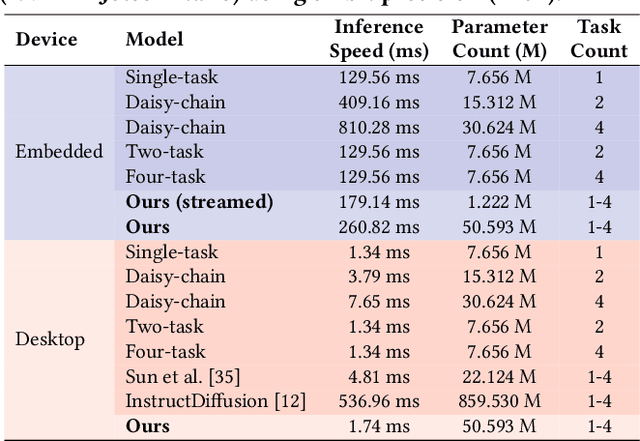

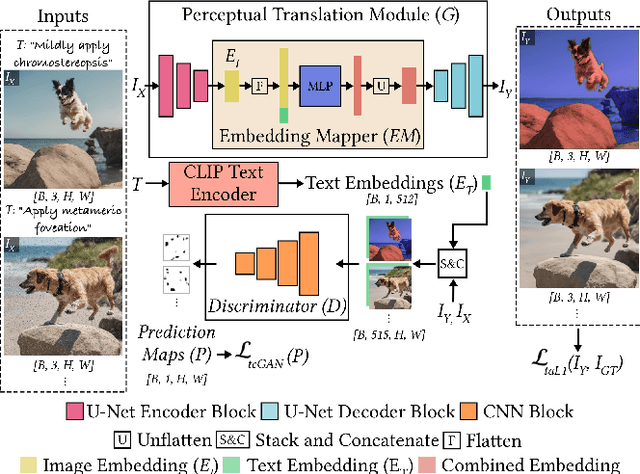

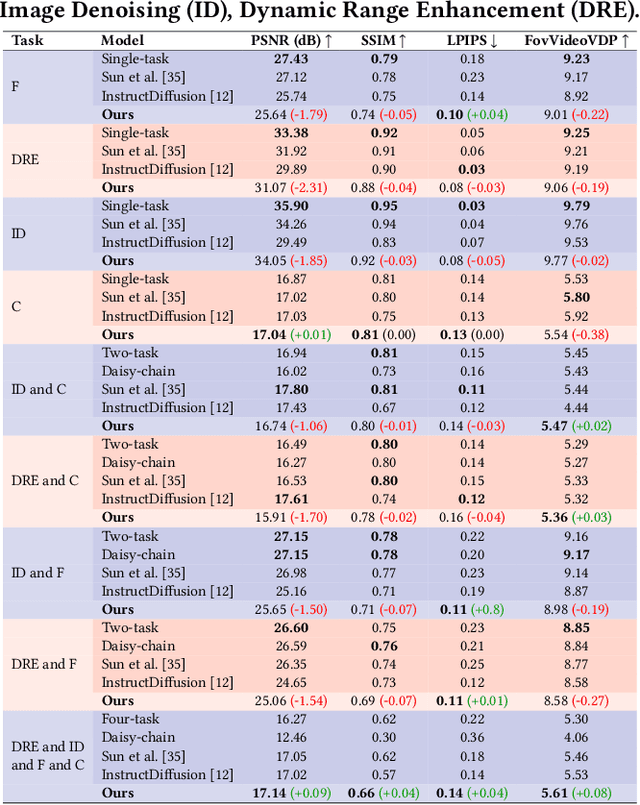

Abstract:Immersive displays are advancing rapidly in terms of delivering perceptually realistic images by utilizing emerging perceptual graphics methods such as foveated rendering. In practice, multiple such methods need to be performed sequentially for enhanced perceived quality. However, the limited power and computational resources of the devices that drive immersive displays make it challenging to deploy multiple perceptual models simultaneously. We address this challenge by proposing a computationally-lightweight, text-guided, learned multitasking perceptual graphics model. Given RGB input images, our model outputs perceptually enhanced images by performing one or more perceptual tasks described by the provided text prompts. Our model supports a variety of perceptual tasks, including foveated rendering, dynamic range enhancement, image denoising, and chromostereopsis, through multitask learning. Uniquely, a single inference step of our model supports different permutations of these perceptual tasks at different prompted rates (i.e., mildly, lightly), eliminating the need for daisy-chaining multiple models to get the desired perceptual effect. We train our model on our new dataset of source and perceptually enhanced images, and their corresponding text prompts. We evaluate our model's performance on embedded platforms and validate the perceptual quality of our model through a user study. Our method achieves on-par quality with the state-of-the-art task-specific methods using a single inference step, while offering faster inference speeds and flexibility to blend effects at various intensities.

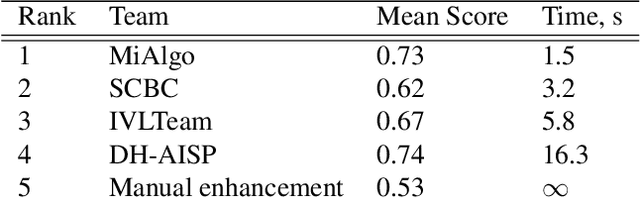

NTIRE 2024 Challenge on Night Photography Rendering

Jun 18, 2024

Abstract:This paper presents a review of the NTIRE 2024 challenge on night photography rendering. The goal of the challenge was to find solutions that process raw camera images taken in nighttime conditions, and thereby produce a photo-quality output images in the standard RGB (sRGB) space. Unlike the previous year's competition, the challenge images were collected with a mobile phone and the speed of algorithms was also measured alongside the quality of their output. To evaluate the results, a sufficient number of viewers were asked to assess the visual quality of the proposed solutions, considering the subjective nature of the task. There were 2 nominations: quality and efficiency. Top 5 solutions in terms of output quality were sorted by evaluation time (see Fig. 1). The top ranking participants' solutions effectively represent the state-of-the-art in nighttime photography rendering. More results can be found at https://nightimaging.org.

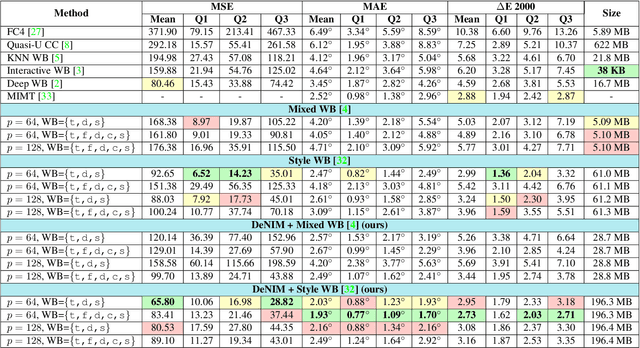

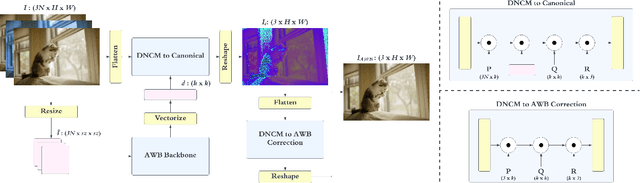

Deterministic Neural Illumination Mapping for Efficient Auto-White Balance Correction

Aug 07, 2023

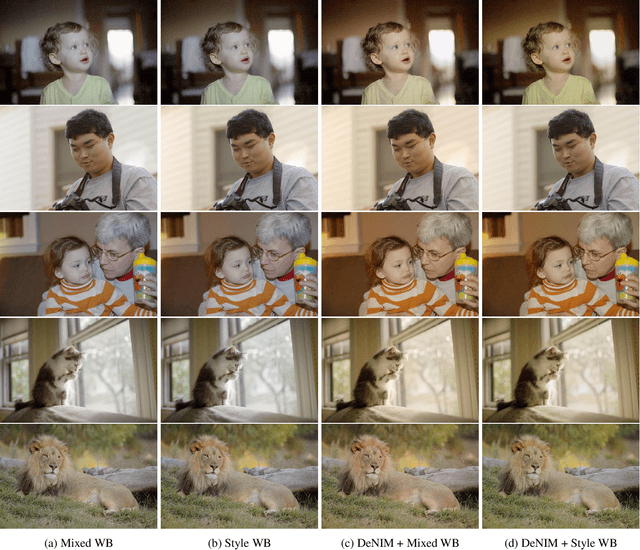

Abstract:Auto-white balance (AWB) correction is a critical operation in image signal processors for accurate and consistent color correction across various illumination scenarios. This paper presents a novel and efficient AWB correction method that achieves at least 35 times faster processing with equivalent or superior performance on high-resolution images for the current state-of-the-art methods. Inspired by deterministic color style transfer, our approach introduces deterministic illumination color mapping, leveraging learnable projection matrices for both canonical illumination form and AWB-corrected output. It involves feeding high-resolution images and corresponding latent representations into a mapping module to derive a canonical form, followed by another mapping module that maps the pixel values to those for the corrected version. This strategy is designed as resolution-agnostic and also enables seamless integration of any pre-trained AWB network as the backbone. Experimental results confirm the effectiveness of our approach, revealing significant performance improvements and reduced time complexity compared to state-of-the-art methods. Our method provides an efficient deep learning-based AWB correction solution, promising real-time, high-quality color correction for digital imaging applications. Source code is available at https://github.com/birdortyedi/DeNIM/

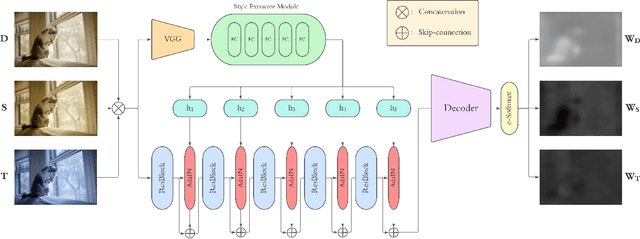

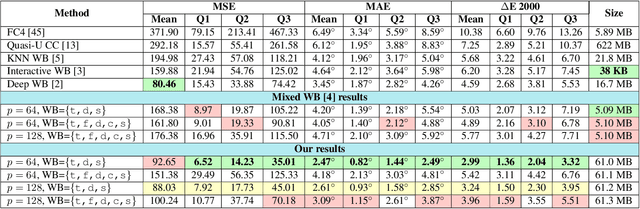

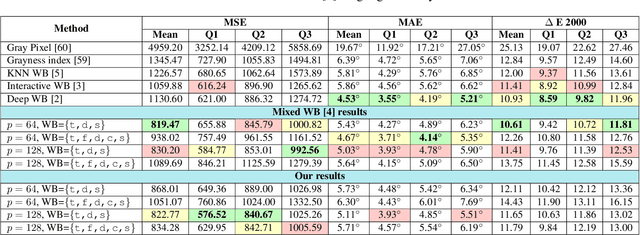

Modeling the Lighting in Scenes as Style for Auto White-Balance Correction

Oct 17, 2022

Abstract:Style may refer to different concepts (e.g. painting style, hairstyle, texture, color, filter, etc.) depending on how the feature space is formed. In this work, we propose a novel idea of interpreting the lighting in the single- and multi-illuminant scenes as the concept of style. To verify this idea, we introduce an enhanced auto white-balance (AWB) method that models the lighting in single- and mixed-illuminant scenes as the style factor. Our AWB method does not require any illumination estimation step, yet contains a network learning to generate the weighting maps of the images with different WB settings. Proposed network utilizes the style information, extracted from the scene by a multi-head style extraction module. AWB correction is completed after blending these weighting maps and the scene. Experiments on single- and mixed-illuminant datasets demonstrate that our proposed method achieves promising correction results when compared to the recent works. This shows that the lighting in the scenes with multiple illuminations can be modeled by the concept of style. Source code and trained models are available on https://github.com/birdortyedi/lighting-as-style-awb-correction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge