Dingqi Ye

SFNet: Fusion of Spatial and Frequency-Domain Features for Remote Sensing Image Forgery Detection

Jun 25, 2025

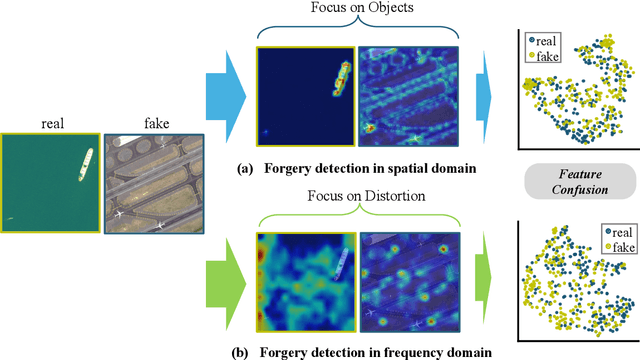

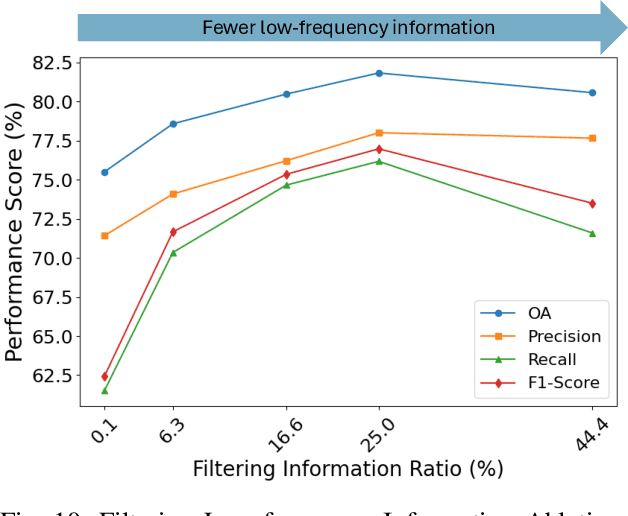

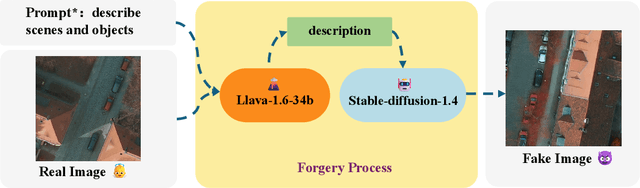

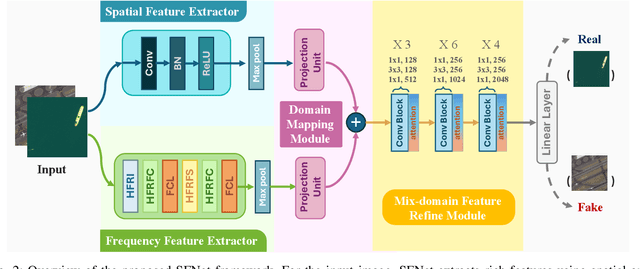

Abstract:The rapid advancement of generative artificial intelligence is producing fake remote sensing imagery (RSI) that is increasingly difficult to detect, potentially leading to erroneous intelligence, fake news, and even conspiracy theories. Existing forgery detection methods typically rely on single visual features to capture predefined artifacts, such as spatial-domain cues to detect forged objects like roads or buildings in RSI, or frequency-domain features to identify artifacts from up-sampling operations in adversarial generative networks (GANs). However, the nature of artifacts can significantly differ depending on geographic terrain, land cover types, or specific features within the RSI. Moreover, these complex artifacts evolve as generative models become more sophisticated. In short, over-reliance on a single visual cue makes existing forgery detectors struggle to generalize across diverse remote sensing data. This paper proposed a novel forgery detection framework called SFNet, designed to identify fake images in diverse remote sensing data by leveraging spatial and frequency domain features. Specifically, to obtain rich and comprehensive visual information, SFNet employs two independent feature extractors to capture spatial and frequency domain features from input RSIs. To fully utilize the complementary domain features, the domain feature mapping module and the hybrid domain feature refinement module(CBAM attention) of SFNet are designed to successively align and fuse the multi-domain features while suppressing redundant information. Experiments on three datasets show that SFNet achieves an accuracy improvement of 4%-15.18% over the state-of-the-art RS forgery detection methods and exhibits robust generalization capabilities. The code is available at https://github.com/GeoX-Lab/RSTI/tree/main/SFNet.

OBSUM: An object-based spatial unmixing model for spatiotemporal fusion of remote sensing images

Oct 14, 2023

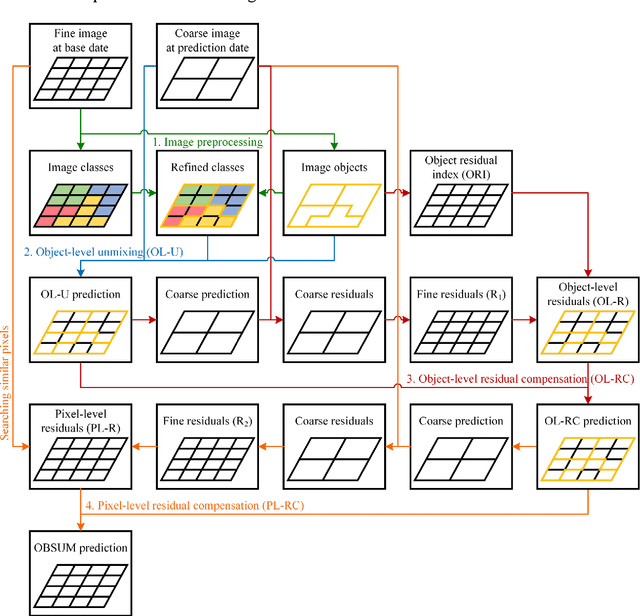

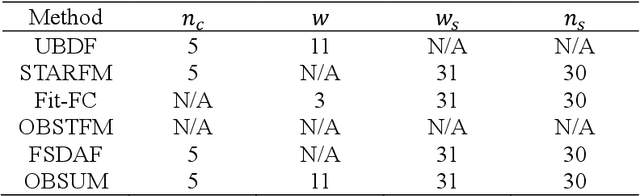

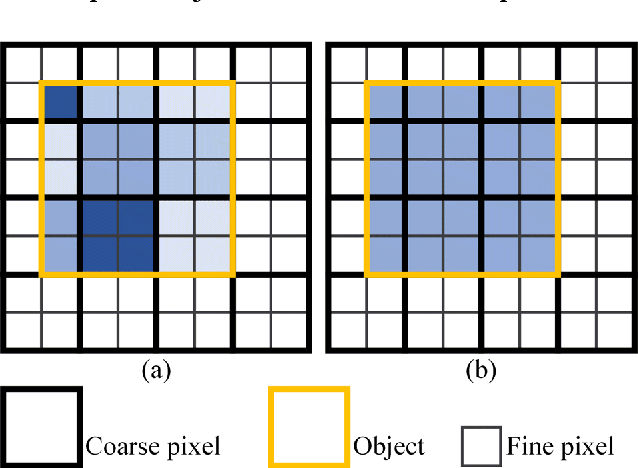

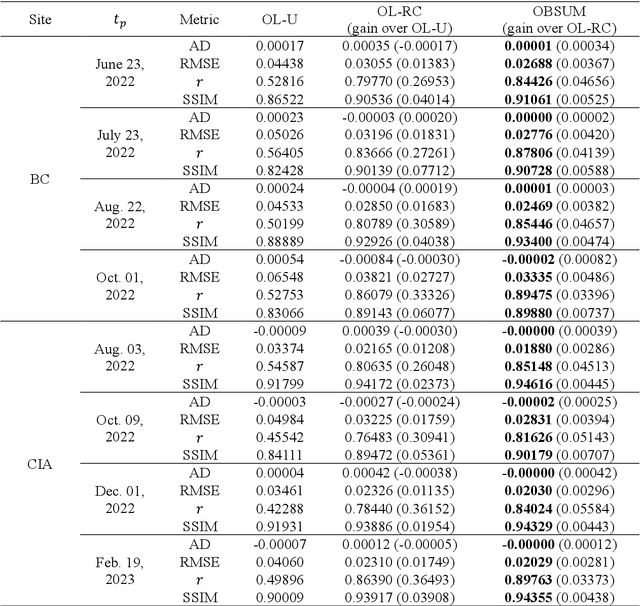

Abstract:Spatiotemporal fusion aims to improve both the spatial and temporal resolution of remote sensing images, thus facilitating time-series analysis at a fine spatial scale. However, there are several important issues that limit the application of current spatiotemporal fusion methods. First, most spatiotemporal fusion methods are based on pixel-level computation, which neglects the valuable object-level information of the land surface. Moreover, many existing methods cannot accurately retrieve strong temporal changes between the available high-resolution image at base date and the predicted one. This study proposes an Object-Based Spatial Unmixing Model (OBSUM), which incorporates object-based image analysis and spatial unmixing, to overcome the two abovementioned problems. OBSUM consists of one preprocessing step and three fusion steps, i.e., object-level unmixing, object-level residual compensation, and pixel-level residual compensation. OBSUM can be applied using only one fine image at the base date and one coarse image at the prediction date, without the need of a coarse image at the base date. The performance of OBSUM was compared with five representative spatiotemporal fusion methods. The experimental results demonstrated that OBSUM outperformed other methods in terms of both accuracy indices and visual effects over time-series. Furthermore, OBSUM also achieved satisfactory results in two typical remote sensing applications. Therefore, it has great potential to generate accurate and high-resolution time-series observations for supporting various remote sensing applications.

Overcome Anterograde Forgetting with Cycled Memory Networks

Dec 04, 2021

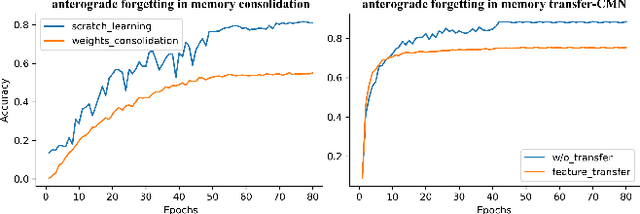

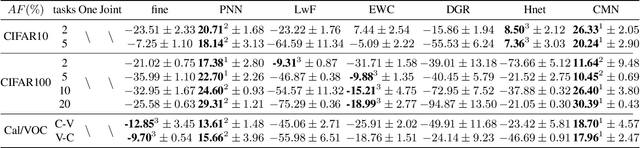

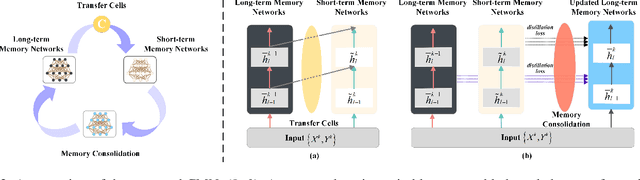

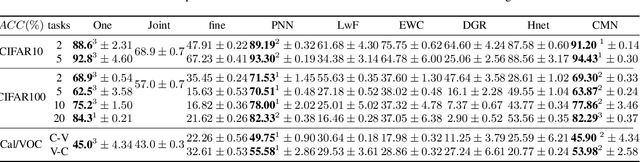

Abstract:Learning from a sequence of tasks for a lifetime is essential for an agent towards artificial general intelligence. This requires the agent to continuously learn and memorize new knowledge without interference. This paper first demonstrates a fundamental issue of lifelong learning using neural networks, named anterograde forgetting, i.e., preserving and transferring memory may inhibit the learning of new knowledge. This is attributed to the fact that the learning capacity of a neural network will be reduced as it keeps memorizing historical knowledge, and the fact that conceptual confusion may occur as it transfers irrelevant old knowledge to the current task. This work proposes a general framework named Cycled Memory Networks (CMN) to address the anterograde forgetting in neural networks for lifelong learning. The CMN consists of two individual memory networks to store short-term and long-term memories to avoid capacity shrinkage. A transfer cell is designed to connect these two memory networks, enabling knowledge transfer from the long-term memory network to the short-term memory network to mitigate the conceptual confusion, and a memory consolidation mechanism is developed to integrate short-term knowledge into the long-term memory network for knowledge accumulation. Experimental results demonstrate that the CMN can effectively address the anterograde forgetting on several task-related, task-conflict, class-incremental and cross-domain benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge