Dieter Kranzlmüller

4D-based Robot Navigation Using Relativistic Image Processing

Oct 29, 2024

Abstract:Machine perception is an important prerequisite for safe interaction and locomotion in dynamic environments. This requires not only the timely perception of surrounding geometries and distances but also the ability to react to changing situations through predefined, learned but also reusable skill endings of a robot so that physical damage or bodily harm can be avoided. In this context, 4D perception offers the possibility of predicting one's own position and changes in the environment over time. In this paper, we present a 4D-based approach to robot navigation using relativistic image processing. Relativistic image processing handles the temporal-related sensor information in a tensor model within a constructive 4D space. 4D-based navigation expands the causal understanding and the resulting interaction radius of a robot through the use of visual and sensory 4D information.

AI-based Density Recognition

Jul 24, 2024Abstract:Learning-based analysis of images is commonly used in the fields of mobility and robotics for safe environmental motion and interaction. This requires not only object recognition but also the assignment of certain properties to them. With the help of this information, causally related actions can be adapted to different circumstances. Such logical interactions can be optimized by recognizing object-assigned properties. Density as a physical property offers the possibility to recognize how heavy an object is, which material it is made of, which forces are at work, and consequently which influence it has on its environment. Our approach introduces an AI-based concept for assigning physical properties to objects through the use of associated images. Based on synthesized data, we derive specific patterns from 2D images using a neural network to extract further information such as volume, material, or density. Accordingly, we discuss the possibilities of property-based feature extraction to improve causally related logics.

Towards Confidential Computing: A Secure Cloud Architecture for Big Data Analytics and AI

May 28, 2023

Abstract:Cloud computing provisions computer resources at a cost-effective way based on demand. Therefore it has become a viable solution for big data analytics and artificial intelligence which have been widely adopted in various domain science. Data security in certain fields such as biomedical research remains a major concern when moving their workflows to cloud, because cloud environments are generally outsourced which are more exposed to risks. We present a secure cloud architecture and describes how it enables workflow packaging and scheduling while keeping its data, logic and computation secure in transit, in use and at rest.

Quantum-inspired tensor network for Earth science

Jan 15, 2023Abstract:Deep Learning (DL) is one of many successful methodologies to extract informative patterns and insights from ever increasing noisy large-scale datasets (in our case, satellite images). However, DL models consist of a few thousand to millions of training parameters, and these training parameters require tremendous amount of electrical power for extracting informative patterns from noisy large-scale datasets (e.g., computationally expensive). Hence, we employ a quantum-inspired tensor network for compressing trainable parameters of physics-informed neural networks (PINNs) in Earth science. PINNs are DL models penalized by enforcing the law of physics; in particular, the law of physics is embedded in DL models. In addition, we apply tensor decomposition to HyperSpectral Images (HSIs) to improve their spectral resolution. A quantum-inspired tensor network is also the native formulation to efficiently represent and train quantum machine learning models on big datasets on GPU tensor cores. Furthermore, the key contribution of this paper is twofold: (I) we reduced a number of trainable parameters of PINNs by using a quantum-inspired tensor network, and (II) we improved the spectral resolution of remotely-sensed images by employing tensor decomposition. As a benchmark PDE, we solved Burger's equation. As practical satellite data, we employed HSIs of Indian Pine, USA and of Pavia University, Italy.

* This article is submitted to IGARSS 2023 conference

Dynamic Sensor Matching based on Geomagnetic Inertial Navigation

Aug 12, 2022

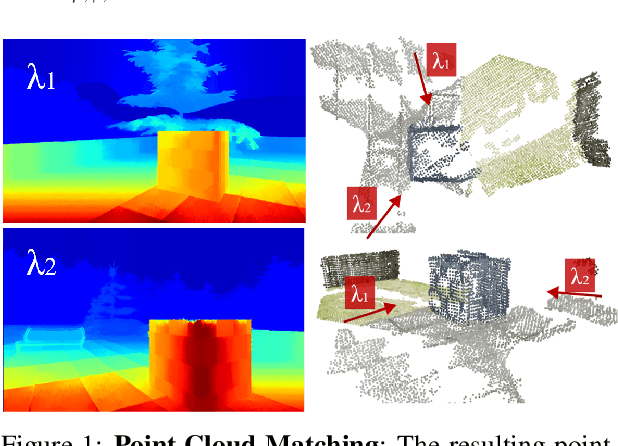

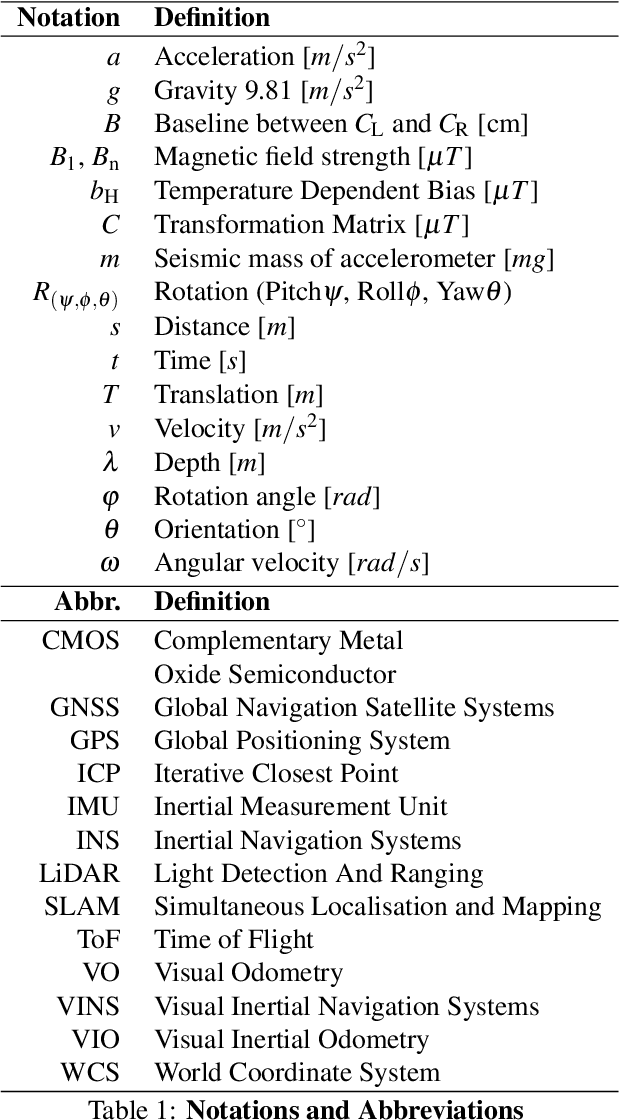

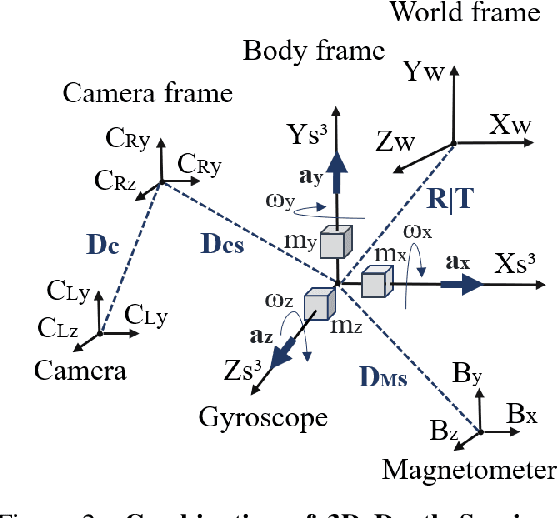

Abstract:Optical sensors can capture dynamic environments and derive depth information in near real-time. The quality of these digital reconstructions is determined by factors like illumination, surface and texture conditions, sensing speed and other sensor characteristics as well as the sensor-object relations. Improvements can be obtained by using dynamically collected data from multiple sensors. However, matching the data from multiple sensors requires a shared world coordinate system. We present a concept for transferring multi-sensor data into a commonly referenced world coordinate system: the earth's magnetic field. The steady presence of our planetary magnetic field provides a reliable world coordinate system, which can serve as a reference for a position-defined reconstruction of dynamic environments. Our approach is evaluated using magnetic field sensors of the ZED 2 stereo camera from Stereolabs, which provides orientation relative to the North Pole similar to a compass. With the help of inertial measurement unit informations, each camera's position data can be transferred into the unified world coordinate system. Our evaluation reveals the level of quality possible using the earth magnetic field and allows a basis for dynamic and real-time-based applications of optical multi-sensors for environment detection.

* Page 16-25

Pandemic Drugs at Pandemic Speed: Accelerating COVID-19 Drug Discovery with Hybrid Machine Learning- and Physics-based Simulations on High Performance Computers

Mar 04, 2021

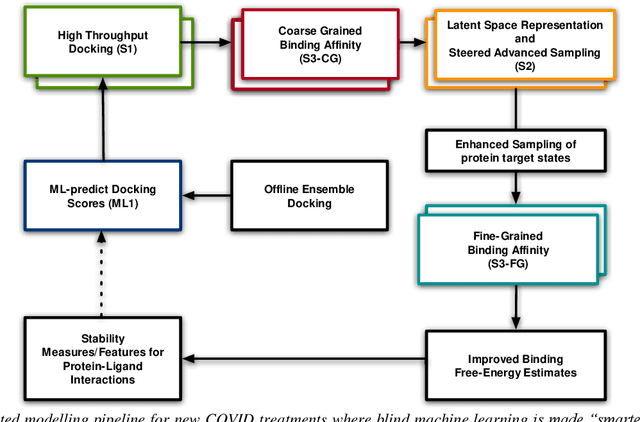

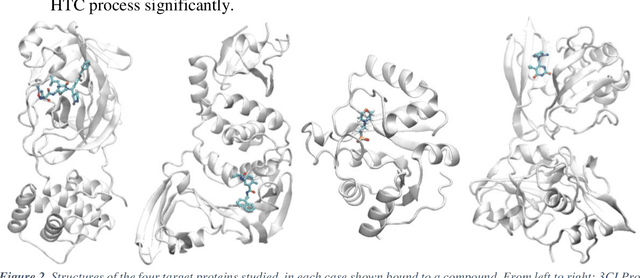

Abstract:The race to meet the challenges of the global pandemic has served as a reminder that the existing drug discovery process is expensive, inefficient and slow. There is a major bottleneck screening the vast number of potential small molecules to shortlist lead compounds for antiviral drug development. New opportunities to accelerate drug discovery lie at the interface between machine learning methods, in this case developed for linear accelerators, and physics-based methods. The two in silico methods, each have their own advantages and limitations which, interestingly, complement each other. Here, we present an innovative method that combines both approaches to accelerate drug discovery. The scale of the resulting workflow is such that it is dependent on high performance computing. We have demonstrated the applicability of this workflow on four COVID-19 target proteins and our ability to perform the required large-scale calculations to identify lead compounds on a variety of supercomputers.

Using Supervised Learning to Classify Metadata of Research Data by Discipline of Research

Oct 16, 2019

Abstract:Automated classification of metadata of research data by their discipline(s) of research can be used in scientometric research, by repository service providers, and in the context of research data aggregation services. Openly available metadata of the DataCite index for research data were used to compile a large training and evaluation set comprised of 609,524 records, which is published alongside this paper. These data allow to reproducibly assess classification approaches, such as tree-based models and neural networks. According to our experiments with 20 base classes (multi-label classification), multi-layer perceptron models perform best with a f1-macro score of 0.760 closely followed by Long Short-Term Memory models (f1-macro score of 0.755). A possible application of the trained classification models is the quantitative analysis of trends towards interdisciplinarity of digital scholarly output or the characterization of growth patterns of research data, stratified by discipline of research. Both applications perform at scale with the proposed models which are available for re-use.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge