Simone Müller

4D-based Robot Navigation Using Relativistic Image Processing

Oct 29, 2024

Abstract:Machine perception is an important prerequisite for safe interaction and locomotion in dynamic environments. This requires not only the timely perception of surrounding geometries and distances but also the ability to react to changing situations through predefined, learned but also reusable skill endings of a robot so that physical damage or bodily harm can be avoided. In this context, 4D perception offers the possibility of predicting one's own position and changes in the environment over time. In this paper, we present a 4D-based approach to robot navigation using relativistic image processing. Relativistic image processing handles the temporal-related sensor information in a tensor model within a constructive 4D space. 4D-based navigation expands the causal understanding and the resulting interaction radius of a robot through the use of visual and sensory 4D information.

AI-based Density Recognition

Jul 24, 2024Abstract:Learning-based analysis of images is commonly used in the fields of mobility and robotics for safe environmental motion and interaction. This requires not only object recognition but also the assignment of certain properties to them. With the help of this information, causally related actions can be adapted to different circumstances. Such logical interactions can be optimized by recognizing object-assigned properties. Density as a physical property offers the possibility to recognize how heavy an object is, which material it is made of, which forces are at work, and consequently which influence it has on its environment. Our approach introduces an AI-based concept for assigning physical properties to objects through the use of associated images. Based on synthesized data, we derive specific patterns from 2D images using a neural network to extract further information such as volume, material, or density. Accordingly, we discuss the possibilities of property-based feature extraction to improve causally related logics.

Dynamic Sensor Matching based on Geomagnetic Inertial Navigation

Aug 12, 2022

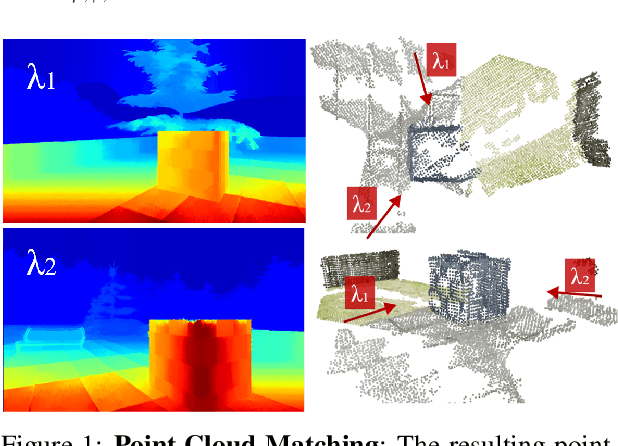

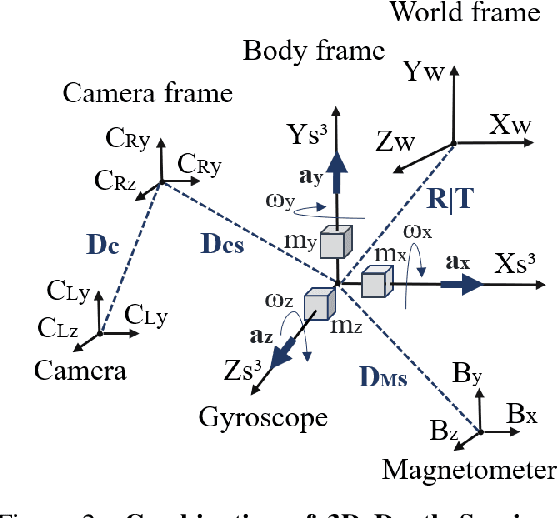

Abstract:Optical sensors can capture dynamic environments and derive depth information in near real-time. The quality of these digital reconstructions is determined by factors like illumination, surface and texture conditions, sensing speed and other sensor characteristics as well as the sensor-object relations. Improvements can be obtained by using dynamically collected data from multiple sensors. However, matching the data from multiple sensors requires a shared world coordinate system. We present a concept for transferring multi-sensor data into a commonly referenced world coordinate system: the earth's magnetic field. The steady presence of our planetary magnetic field provides a reliable world coordinate system, which can serve as a reference for a position-defined reconstruction of dynamic environments. Our approach is evaluated using magnetic field sensors of the ZED 2 stereo camera from Stereolabs, which provides orientation relative to the North Pole similar to a compass. With the help of inertial measurement unit informations, each camera's position data can be transferred into the unified world coordinate system. Our evaluation reveals the level of quality possible using the earth magnetic field and allows a basis for dynamic and real-time-based applications of optical multi-sensors for environment detection.

* Page 16-25

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge