Devanathan Thiruvenkatachari

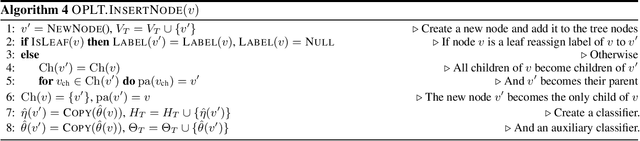

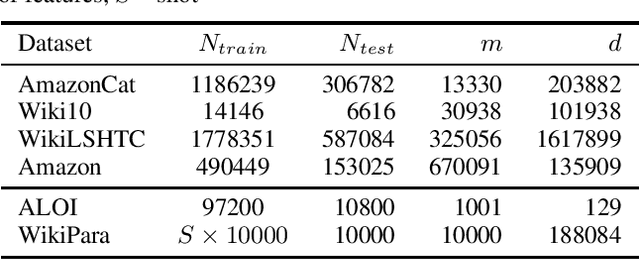

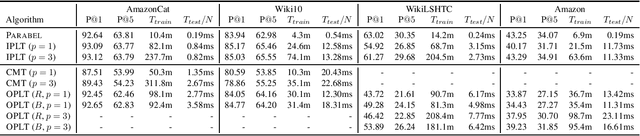

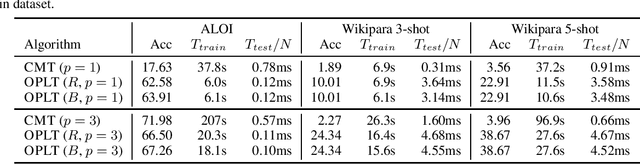

Online probabilistic label trees

Jul 08, 2020

Abstract:We introduce online probabilistic label trees (OPLTs), an algorithm that trains a label tree classifier in a fully online manner, without any prior knowledge about the number of training instances, their features and labels. OPLTs are characterized by low time and space complexity as well as strong theoretical guarantees. They can be used for online multi-label and multi-class classification, including the very challenging scenarios of one- or few-shot learning. We demonstrate the attractiveness of OPLTs in a wide empirical study on several instances of the tasks mentioned above.

Bandit Multiclass Linear Classification: Efficient Algorithms for the Separable Case

Feb 06, 2019

Abstract:We study the problem of efficient online multiclass linear classification with bandit feedback, where all examples belong to one of $K$ classes and lie in the $d$-dimensional Euclidean space. Previous works have left open the challenge of designing efficient algorithms with finite mistake bounds when the data is linearly separable by a margin $\gamma$. In this work, we take a first step towards this problem. We consider two notions of linear separability, \emph{strong} and \emph{weak}. 1. Under the strong linear separability condition, we design an efficient algorithm that achieves a near-optimal mistake bound of $O\left( K/\gamma^2 \right)$. 2. Under the more challenging weak linear separability condition, we design an efficient algorithm with a mistake bound of $\min (2^{\widetilde{O}(K \log^2 (1/\gamma))}, 2^{\widetilde{O}(\sqrt{1/\gamma} \log K)})$. Our algorithm is based on kernel Perceptron, which is inspired by the work of \citet{Klivans-Servedio-2008} on improperly learning intersection of halfspaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge