Debayan Gupta

ObjMST: An Object-Focused Multimodal Style Transfer Framework

Mar 06, 2025Abstract:We propose ObjMST, an object-focused multimodal style transfer framework that provides separate style supervision for salient objects and surrounding elements while addressing alignment issues in multimodal representation learning. Existing image-text multimodal style transfer methods face the following challenges: (1) generating non-aligned and inconsistent multimodal style representations; and (2) content mismatch, where identical style patterns are applied to both salient objects and their surrounding elements. Our approach mitigates these issues by: (1) introducing a Style-Specific Masked Directional CLIP Loss, which ensures consistent and aligned style representations for both salient objects and their surroundings; and (2) incorporating a salient-to-key mapping mechanism for stylizing salient objects, followed by image harmonization to seamlessly blend the stylized objects with their environment. We validate the effectiveness of ObjMST through experiments, using both quantitative metrics and qualitative visual evaluations of the stylized outputs. Our code is available at: https://github.com/chandagrover/ObjMST.

* 8 pages, 8 Figures, 3 Tables

Improving text-conditioned latent diffusion for cancer pathology

Dec 09, 2024Abstract:The development of generative models in the past decade has allowed for hyperrealistic data synthesis. While potentially beneficial, this synthetic data generation process has been relatively underexplored in cancer histopathology. One algorithm for synthesising a realistic image is diffusion; it iteratively converts an image to noise and learns the recovery process from this noise [Wang and Vastola, 2023]. While effective, it is highly computationally expensive for high-resolution images, rendering it infeasible for histopathology. The development of Variational Autoencoders (VAEs) has allowed us to learn the representation of complex high-resolution images in a latent space. A vital by-product of this is the ability to compress high-resolution images to space and recover them lossless. The marriage of diffusion and VAEs allows us to carry out diffusion in the latent space of an autoencoder, enabling us to leverage the realistic generative capabilities of diffusion while maintaining reasonable computational requirements. Rombach et al. [2021b] and Yellapragada et al. [2023] build foundational models for this task, paving the way to generate realistic histopathology images. In this paper, we discuss the pitfalls of current methods, namely [Yellapragada et al., 2023] and resolve critical errors while proposing improvements along the way. Our methods achieve an FID score of 21.11, beating its SOTA counterparts in [Yellapragada et al., 2023] by 1.2 FID, while presenting a train-time GPU memory usage reduction of 7%.

Visual Concept Networks: A Graph-Based Approach to Detecting Anomalous Data in Deep Neural Networks

Sep 26, 2024Abstract:Deep neural networks (DNNs), while increasingly deployed in many applications, struggle with robustness against anomalous and out-of-distribution (OOD) data. Current OOD benchmarks often oversimplify, focusing on single-object tasks and not fully representing complex real-world anomalies. This paper introduces a new, straightforward method employing graph structures and topological features to effectively detect both far-OOD and near-OOD data. We convert images into networks of interconnected human understandable features or visual concepts. Through extensive testing on two novel tasks, including ablation studies with large vocabularies and diverse tasks, we demonstrate the method's effectiveness. This approach enhances DNN resilience to OOD data and promises improved performance in various applications.

SimSAM: Simple Siamese Representations Based Semantic Affinity Matrix for Unsupervised Image Segmentation

Jun 12, 2024

Abstract:Recent developments in self-supervised learning (SSL) have made it possible to learn data representations without the need for annotations. Inspired by the non-contrastive SSL approach (SimSiam), we introduce a novel framework SIMSAM to compute the Semantic Affinity Matrix, which is significant for unsupervised image segmentation. Given an image, SIMSAM first extracts features using pre-trained DINO-ViT, then projects the features to predict the correlations of dense features in a non-contrastive way. We show applications of the Semantic Affinity Matrix in object segmentation and semantic segmentation tasks. Our code is available at https://github.com/chandagrover/SimSAM.

* 6 Pages-Main Paper , 6 figures, 6Tables (Main Paper), ICIP 2024, 8 Pages: Supplementary

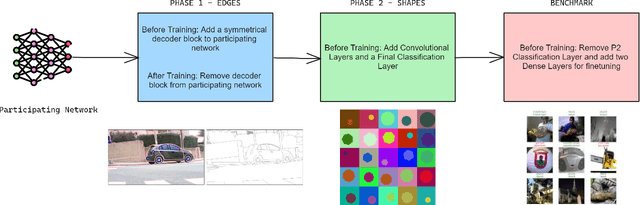

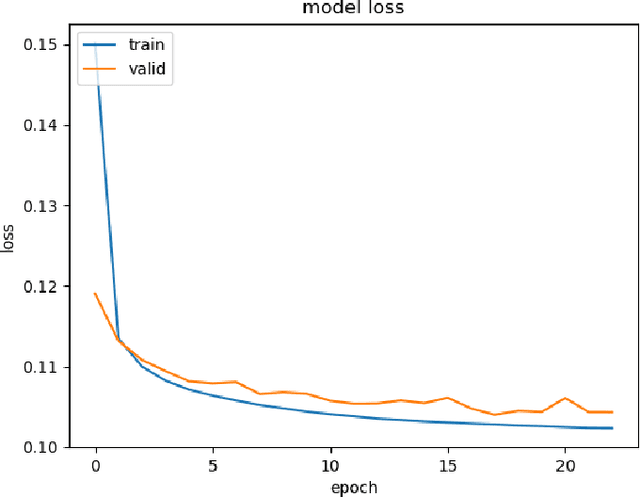

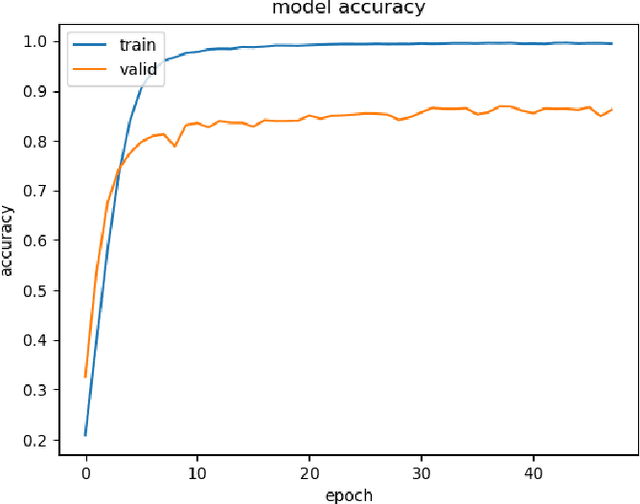

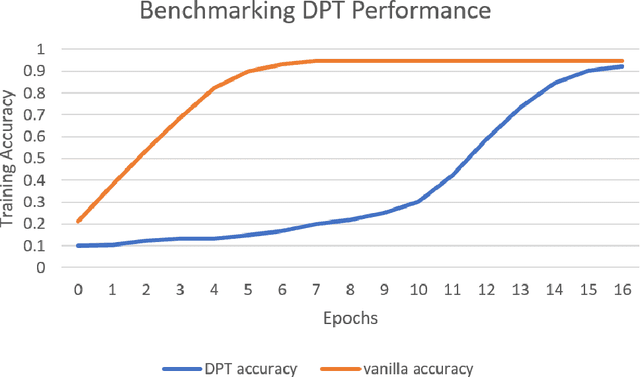

Developmental Pretraining (DPT) for Image Classification Networks

Dec 01, 2023

Abstract:In the backdrop of increasing data requirements of Deep Neural Networks for object recognition that is growing more untenable by the day, we present Developmental PreTraining (DPT) as a possible solution. DPT is designed as a curriculum-based pre-training approach designed to rival traditional pre-training techniques that are data-hungry. These training approaches also introduce unnecessary features that could be misleading when the network is employed in a downstream classification task where the data is sufficiently different from the pre-training data and is scarce. We design the curriculum for DPT by drawing inspiration from human infant visual development. DPT employs a phased approach where carefully-selected primitive and universal features like edges and shapes are taught to the network participating in our pre-training regime. A model that underwent the DPT regime is tested against models with randomised weights to evaluate the viability of DPT.

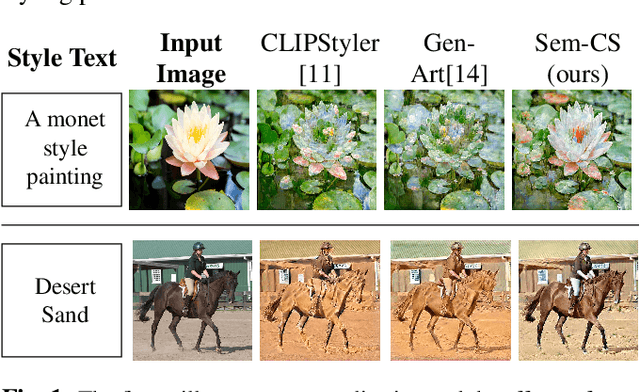

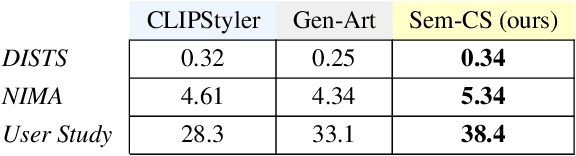

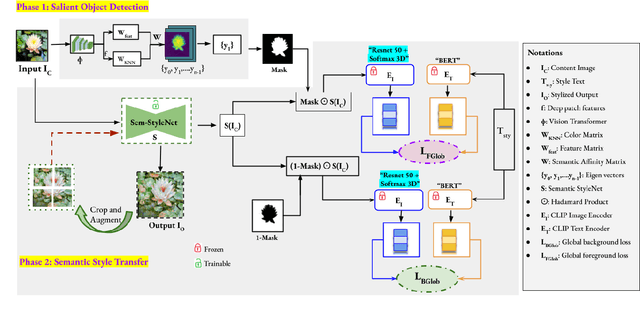

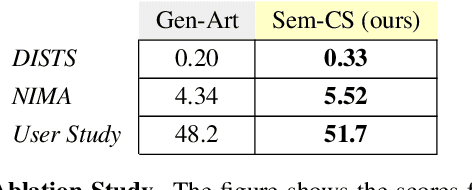

Sem-CS: Semantic CLIPStyler for Text-Based Image Style Transfer

Jul 12, 2023

Abstract:CLIPStyler demonstrated image style transfer with realistic textures using only a style text description (instead of requiring a reference style image). However, the ground semantics of objects in the style transfer output is lost due to style spill-over on salient and background objects (content mismatch) or over-stylization. To solve this, we propose Semantic CLIPStyler (Sem-CS), that performs semantic style transfer. Sem-CS first segments the content image into salient and non-salient objects and then transfers artistic style based on a given style text description. The semantic style transfer is achieved using global foreground loss (for salient objects) and global background loss (for non-salient objects). Our empirical results, including DISTS, NIMA and user study scores, show that our proposed framework yields superior qualitative and quantitative performance. Our code is available at github.com/chandagrover/sem-cs.

* 5 pages, 4 Figures, 2 Tables. arXiv admin note: substantial text overlap with arXiv:2303.06334

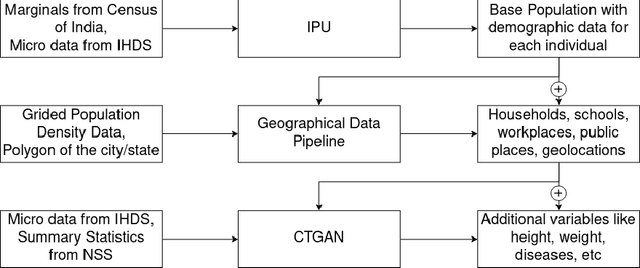

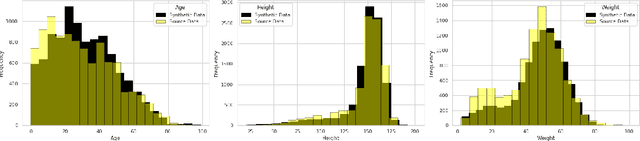

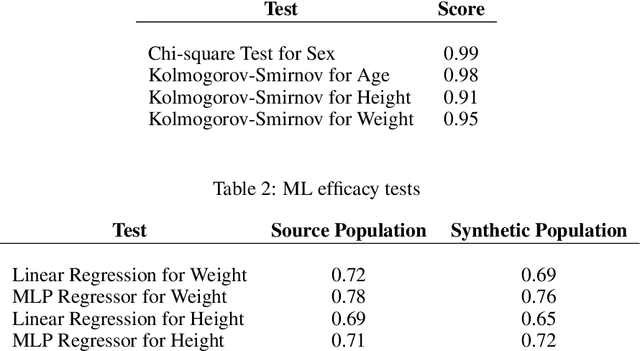

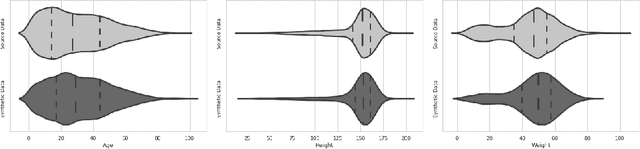

Synthpop++: A Hybrid Framework for Generating A Country-scale Synthetic Population

Apr 24, 2023

Abstract:Population censuses are vital to public policy decision-making. They provide insight into human resources, demography, culture, and economic structure at local, regional, and national levels. However, such surveys are very expensive (especially for low and middle-income countries with high populations, such as India), time-consuming, and may also raise privacy concerns, depending upon the kinds of data collected. In light of these issues, we introduce SynthPop++, a novel hybrid framework, which can combine data from multiple real-world surveys (with different, partially overlapping sets of attributes) to produce a real-scale synthetic population of humans. Critically, our population maintains family structures comprising individuals with demographic, socioeconomic, health, and geolocation attributes: this means that our ``fake'' people live in realistic locations, have realistic families, etc. Such data can be used for a variety of purposes: we explore one such use case, Agent-based modelling of infectious disease in India. To gauge the quality of our synthetic population, we use both machine learning and statistical metrics. Our experimental results show that synthetic population can realistically simulate the population for various administrative units of India, producing real-scale, detailed data at the desired level of zoom -- from cities, to districts, to states, eventually combining to form a country-scale synthetic population.

ContextCLIP: Contextual Alignment of Image-Text pairs on CLIP visual representations

Nov 14, 2022Abstract:State-of-the-art empirical work has shown that visual representations learned by deep neural networks are robust in nature and capable of performing classification tasks on diverse datasets. For example, CLIP demonstrated zero-shot transfer performance on multiple datasets for classification tasks in a joint embedding space of image and text pairs. However, it showed negative transfer performance on standard datasets, e.g., BirdsNAP, RESISC45, and MNIST. In this paper, we propose ContextCLIP, a contextual and contrastive learning framework for the contextual alignment of image-text pairs by learning robust visual representations on Conceptual Captions dataset. Our framework was observed to improve the image-text alignment by aligning text and image representations contextually in the joint embedding space. ContextCLIP showed good qualitative performance for text-to-image retrieval tasks and enhanced classification accuracy. We evaluated our model quantitatively with zero-shot transfer and fine-tuning experiments on CIFAR-10, CIFAR-100, Birdsnap, RESISC45, and MNIST datasets for classification task.

BEAS: Blockchain Enabled Asynchronous & Secure Federated Machine Learning

Feb 06, 2022

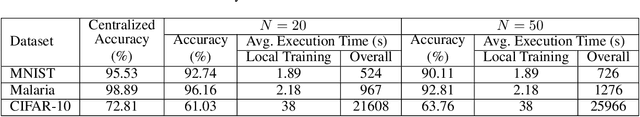

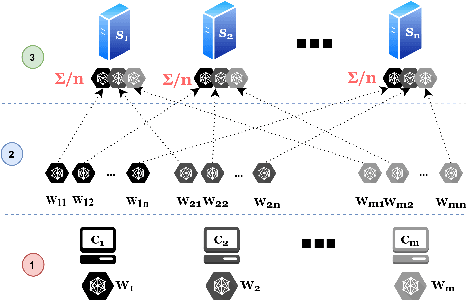

Abstract:Federated Learning (FL) enables multiple parties to distributively train a ML model without revealing their private datasets. However, it assumes trust in the centralized aggregator which stores and aggregates model updates. This makes it prone to gradient tampering and privacy leakage by a malicious aggregator. Malicious parties can also introduce backdoors into the joint model by poisoning the training data or model gradients. To address these issues, we present BEAS, the first blockchain-based framework for N-party FL that provides strict privacy guarantees of training data using gradient pruning (showing improved differential privacy compared to existing noise and clipping based techniques). Anomaly detection protocols are used to minimize the risk of data-poisoning attacks, along with gradient pruning that is further used to limit the efficacy of model-poisoning attacks. We also define a novel protocol to prevent premature convergence in heterogeneous learning environments. We perform extensive experiments on multiple datasets with promising results: BEAS successfully prevents privacy leakage from dataset reconstruction attacks, and minimizes the efficacy of poisoning attacks. Moreover, it achieves an accuracy similar to centralized frameworks, and its communication and computation overheads scale linearly with the number of participants.

Scotch: An Efficient Secure Computation Framework for Secure Aggregation

Jan 19, 2022

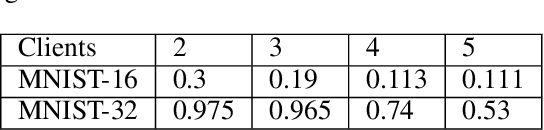

Abstract:Federated learning enables multiple data owners to jointly train a machine learning model without revealing their private datasets. However, a malicious aggregation server might use the model parameters to derive sensitive information about the training dataset used. To address such leakage, differential privacy and cryptographic techniques have been investigated in prior work, but these often result in large communication overheads or impact model performance. To mitigate this centralization of power, we propose \textsc{Scotch}, a decentralized \textit{m-party} secure-computation framework for federated aggregation that deploys MPC primitives, such as \textit{secret sharing}. Our protocol is simple, efficient, and provides strict privacy guarantees against curious aggregators or colluding data-owners with minimal communication overheads compared to other existing \textit{state-of-the-art} privacy-preserving federated learning frameworks. We evaluate our framework by performing extensive experiments on multiple datasets with promising results. \textsc{Scotch} can train the standard MLP NN with the training dataset split amongst 3 participating users and 3 aggregating servers with 96.57\% accuracy on MNIST, and 98.40\% accuracy on the Extended MNIST (digits) dataset, while providing various optimizations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge