David Gotz

Risks and Opportunities in Human-Machine Teaming in Operationalizing Machine Learning Target Variables

Oct 29, 2025Abstract:Predictive modeling has the potential to enhance human decision-making. However, many predictive models fail in practice due to problematic problem formulation in cases where the prediction target is an abstract concept or construct and practitioners need to define an appropriate target variable as a proxy to operationalize the construct of interest. The choice of an appropriate proxy target variable is rarely self-evident in practice, requiring both domain knowledge and iterative data modeling. This process is inherently collaborative, involving both domain experts and data scientists. In this work, we explore how human-machine teaming can support this process by accelerating iterations while preserving human judgment. We study the impact of two human-machine teaming strategies on proxy construction: 1) relevance-first: humans leading the process by selecting relevant proxies, and 2) performance-first: machines leading the process by recommending proxies based on predictive performance. Based on a controlled user study of a proxy construction task (N = 20), we show that the performance-first strategy facilitated faster iterations and decision-making, but also biased users towards well-performing proxies that are misaligned with the application goal. Our study highlights the opportunities and risks of human-machine teaming in operationalizing machine learning target variables, yielding insights for future research to explore the opportunities and mitigate the risks.

How Does Imperfect Automatic Indexing Affect Semantic Search Performance?

Apr 08, 2023Abstract:Documents in the health domain are often annotated with semantic concepts (i.e., terms) from controlled vocabularies. As the volume of these documents gets large, the annotation work is increasingly done by algorithms. Compared to humans, automatic indexing algorithms are imperfect and may assign wrong terms to documents, which affect subsequent search tasks where queries contain these terms. In this work, we aim to understand the performance impact of using imperfectly assigned terms in Boolean semantic searches. We used MeSH terms and biomedical literature search as a case study. We implemented multiple automatic indexing algorithms on real-world Boolean queries that consist of MeSH terms, and found that (1) probabilistic logic can handle inaccurately assigned terms better than traditional Boolean logic, (2) query-level performance is mostly limited by lowest-performing terms in a query, and (3) mixing a small amount of human indexing with automatic indexing can regain excellent query-level performance. These findings provide important implications for future work on automatic indexing.

GRAFS: Graphical Faceted Search System to Support Conceptual Understanding in Exploratory Search

Feb 19, 2023Abstract:When people search for information about a new topic within large document collections, they implicitly construct a mental model of the unfamiliar information space to represent what they currently know and guide their exploration into the unknown. Building this mental model can be challenging as it requires not only finding relevant documents, but also synthesizing important concepts and the relationships that connect those concepts both within and across documents. This paper describes a novel interactive approach designed to help users construct a mental model of an unfamiliar information space during exploratory search. We propose a new semantic search system to organize and visualize important concepts and their relations for a set of search results. A user study ($n=20$) was conducted to compare the proposed approach against a baseline faceted search system on exploratory literature search tasks. Experimental results show that the proposed approach is more effective in helping users recognize relationships between key concepts, leading to a more sophisticated understanding of the search topic while maintaining similar functionality and usability as a faceted search system.

Visual Causality Analysis of Event Sequence Data

Sep 01, 2020

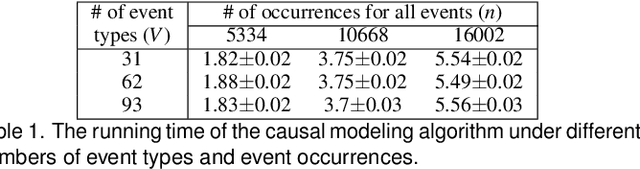

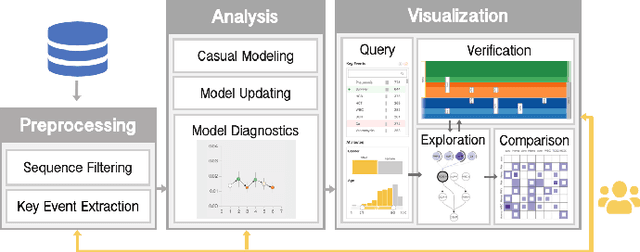

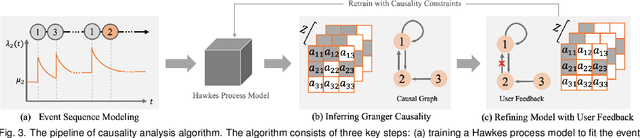

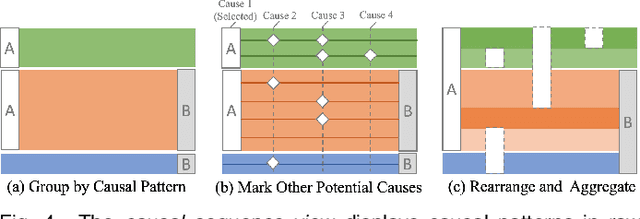

Abstract:Causality is crucial to understanding the mechanisms behind complex systems and making decisions that lead to intended outcomes. Event sequence data is widely collected from many real-world processes, such as electronic health records, web clickstreams, and financial transactions, which transmit a great deal of information reflecting the causal relations among event types. Unfortunately, recovering causalities from observational event sequences is challenging, as the heterogeneous and high-dimensional event variables are often connected to rather complex underlying event excitation mechanisms that are hard to infer from limited observations. Many existing automated causal analysis techniques suffer from poor explainability and fail to include an adequate amount of human knowledge. In this paper, we introduce a visual analytics method for recovering causalities in event sequence data. We extend the Granger causality analysis algorithm on Hawkes processes to incorporate user feedback into causal model refinement. The visualization system includes an interactive causal analysis framework that supports bottom-up causal exploration, iterative causal verification and refinement, and causal comparison through a set of novel visualizations and interactions. We report two forms of evaluation: a quantitative evaluation of the model improvements resulting from the user-feedback mechanism, and a qualitative evaluation through case studies in different application domains to demonstrate the usefulness of the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge