David Delgado

The RoSiD Tool: Empowering Users to Design Multimodal Signals for Human-Robot Collaboration

Jan 05, 2024

Abstract:Robots that cooperate with humans must be effective at communicating with them. However, people have varied preferences for communication based on many contextual factors, such as culture, environment, and past experience. To communicate effectively, robots must take those factors into consideration. In this work, we present the Robot Signal Design (RoSiD) tool to empower people to easily self-specify communicative preferences for collaborative robots. We show through a participatory design study that the RoSiD tool enables users to create signals that align with their communicative preferences, and we illuminate how this tool can be further improved.

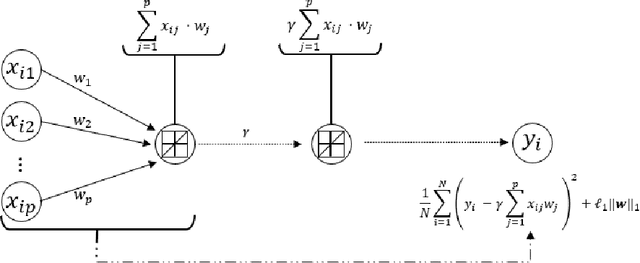

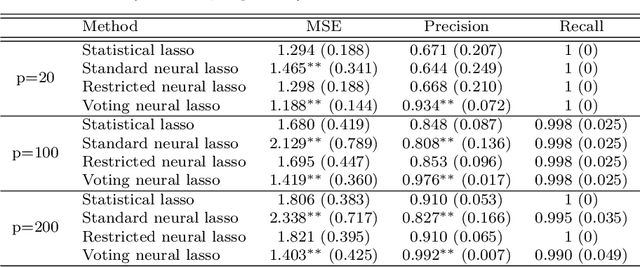

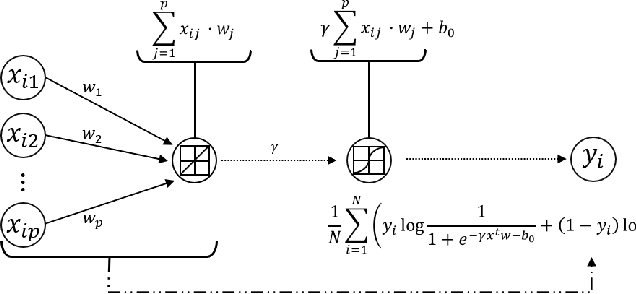

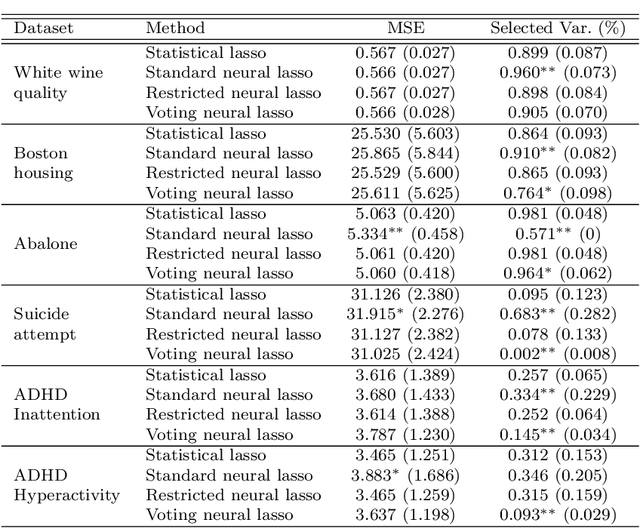

Neural lasso: a unifying approach of lasso and neural networks

Sep 07, 2023

Abstract:In recent years, there is a growing interest in combining techniques attributed to the areas of Statistics and Machine Learning in order to obtain the benefits of both approaches. In this article, the statistical technique lasso for variable selection is represented through a neural network. It is observed that, although both the statistical approach and its neural version have the same objective function, they differ due to their optimization. In particular, the neural version is usually optimized in one-step using a single validation set, while the statistical counterpart uses a two-step optimization based on cross-validation. The more elaborated optimization of the statistical method results in more accurate parameter estimation, especially when the training set is small. For this reason, a modification of the standard approach for training neural networks, that mimics the statistical framework, is proposed. During the development of the above modification, a new optimization algorithm for identifying the significant variables emerged. Experimental results, using synthetic and real data sets, show that this new optimization algorithm achieves better performance than any of the three previous optimization approaches.

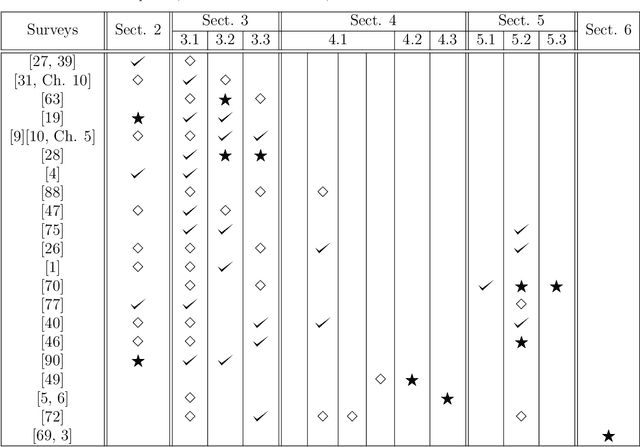

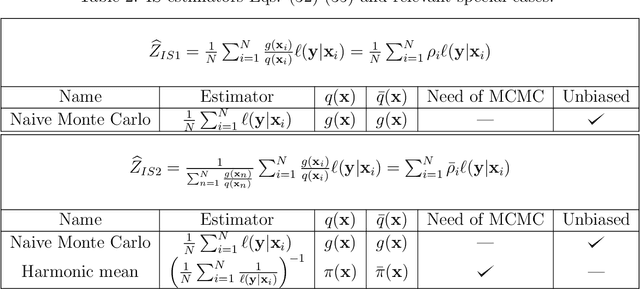

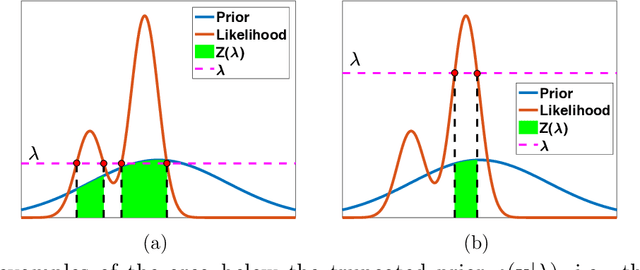

Marginal likelihood computation for model selection and hypothesis testing: an extensive review

May 17, 2020

Abstract:This is an up-to-date introduction to, and overview of, marginal likelihood computation for model selection and hypothesis testing. Computing normalizing constants of probability models (or ratio of constants) is a fundamental issue in many applications in statistics, applied mathematics, signal processing and machine learning. This article provides a comprehensive study of the state-of-the-art of the topic. We highlight limitations, benefits, connections and differences among the different techniques. Problems and possible solutions with the use of improper priors are also described. Some of the most relevant methodologies are compared through theoretical comparisons and numerical experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge