David Bierbach

Socially competent robots: adaptation improves leadership performance in groups of live fish

Sep 14, 2020

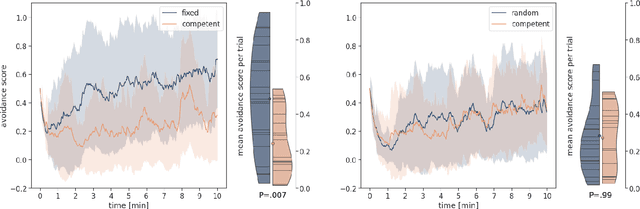

Abstract:Collective motion is commonly modeled with simple interaction rules between agents. Yet in nature, numerous observables vary within and between individuals and it remains largely unknown how animals respond to this variability, and how much of it may be the result of social responses. Here, we hypothesize that Guppies (\textit{Poecilia reticulata}) respond to avoidance behaviors of their shoal mates and that "socially competent" responses allow them to be more effective leaders. We test this hypothesis in an experimental setting in which a robotic Guppy, called RoboFish, is programmed to adapt to avoidance reactions of its live interaction partner. We compare the leadership performance between socially competent robots and two non-competent control behaviors and find that 1) behavioral variability itself appears attractive and that socially competent robots are better leaders that 2) require fewer approach attempts to 3) elicit longer average following behavior than non-competent agents. This work provides evidence that social responsiveness to avoidance reactions plays a role in the social dynamics of guppies. We showcase how social responsiveness can be modeled and tested directly embedded in a living animal model using adaptive, interactive robots.

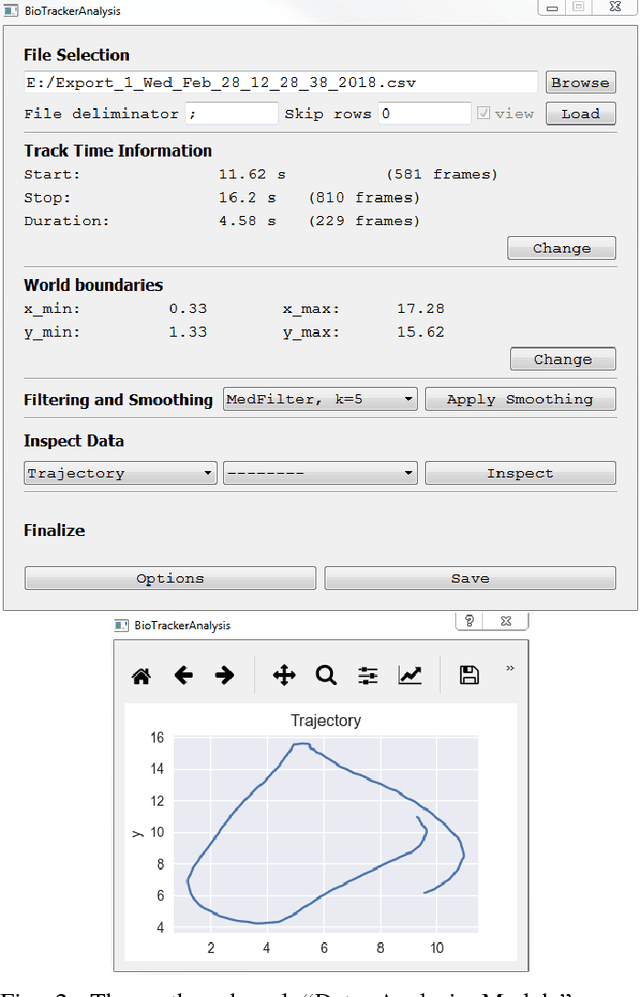

BioTracker: An Open-Source Computer Vision Framework for Visual Animal Tracking

Mar 21, 2018

Abstract:The study of animal behavior increasingly relies on (semi-) automatic methods for the extraction of relevant behavioral features from video or picture data. To date, several specialized software products exist to detect and track animals' positions in simple (laboratory) environments. Tracking animals in their natural environments, however, often requires substantial customization of the image processing algorithms to the problem-specific image characteristics. Here we introduce BioTracker, an open-source computer vision framework, that provides programmers with core functionalities that are essential parts of a tracking software, such as video I/O, graphics overlays and mouse and keyboard interfaces. BioTracker additionally provides a number of different tracking algorithms suitable for a variety of image recording conditions. The main feature of BioTracker is however the straightforward implementation of new problem-specific tracking modules and vision algorithms that can build upon BioTracker's core functionalities. With this open-source framework the scientific community can accelerate their research and focus on the development of new vision algorithms.

Dancing Honey bee Robot Elicits Dance-Following and Recruits Foragers

Mar 19, 2018

Abstract:The honey bee dance communication system is one of the most popular examples of animal communication. Forager bees communicate the flight vector towards food, water, or resin sources to nestmates by performing a stereotypical motion pattern on the comb surface in the darkness of the hive. Bees that actively follow the circles of the dancer, so called dance-followers, may decode the message and fly according to the indicated vector that refers to the sun compass and their visual odometer. We investigated the dance communication system with a honeybee robot that reproduced the waggle dance pattern for a flight vector chosen by the experimenter. The dancing robot, called RoboBee, generated multiple cues contained in the biological dance pattern and elicited natural dance-following behavior in live bees. By tracking the flight trajectory of departing bees after following the dancing robot via harmonic radar we confirmed that bees used information obtained from the robotic dance to adjust their flight path. This is the first report on successful dance following and subsequent flight performance of bees recruited by a biomimetic robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge