David Dormagen

BioTracker: An Open-Source Computer Vision Framework for Visual Animal Tracking

Mar 21, 2018

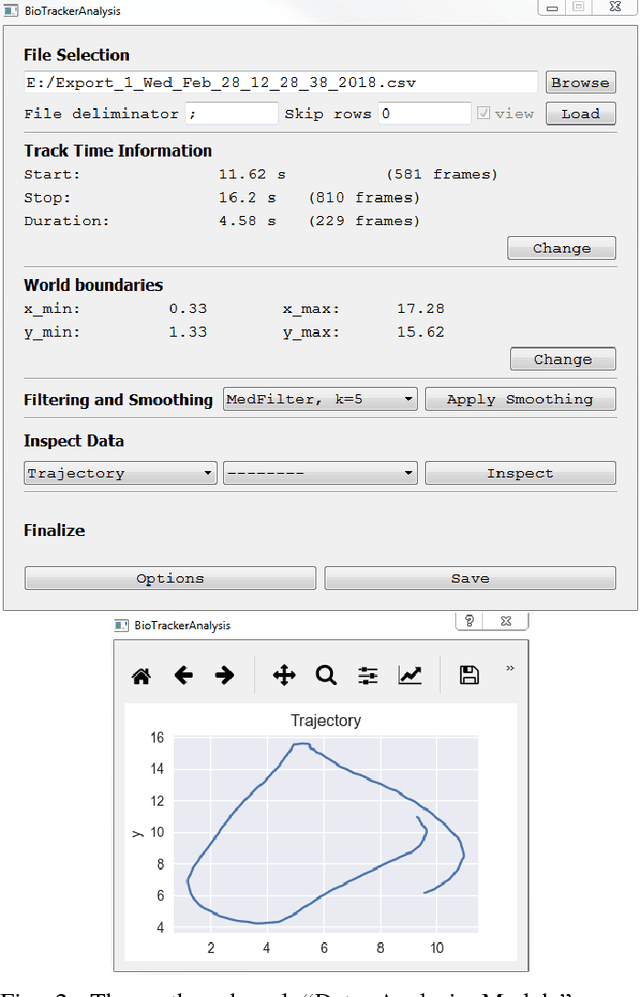

Abstract:The study of animal behavior increasingly relies on (semi-) automatic methods for the extraction of relevant behavioral features from video or picture data. To date, several specialized software products exist to detect and track animals' positions in simple (laboratory) environments. Tracking animals in their natural environments, however, often requires substantial customization of the image processing algorithms to the problem-specific image characteristics. Here we introduce BioTracker, an open-source computer vision framework, that provides programmers with core functionalities that are essential parts of a tracking software, such as video I/O, graphics overlays and mouse and keyboard interfaces. BioTracker additionally provides a number of different tracking algorithms suitable for a variety of image recording conditions. The main feature of BioTracker is however the straightforward implementation of new problem-specific tracking modules and vision algorithms that can build upon BioTracker's core functionalities. With this open-source framework the scientific community can accelerate their research and focus on the development of new vision algorithms.

Tracking all members of a honey bee colony over their lifetime

Mar 15, 2018

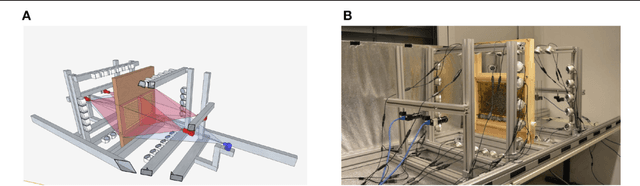

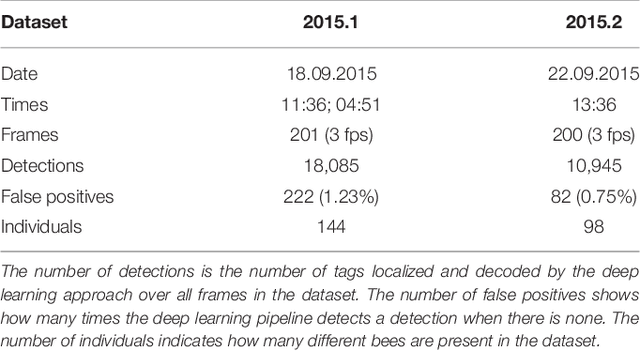

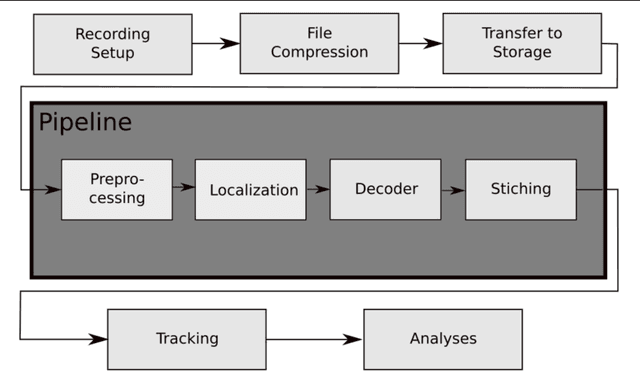

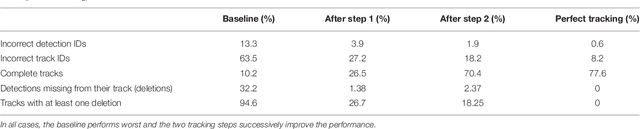

Abstract:Computational approaches to the analysis of collective behavior in social insects increasingly rely on motion paths as an intermediate data layer from which one can infer individual behaviors or social interactions. Honey bees are a popular model for learning and memory. Previous experience has been shown to affect and modulate future social interactions. So far, no lifetime history observations have been reported for all bees of a colony. In a previous work we introduced a tracking system customized to track up to $4000$ bees over several weeks. In this contribution we present an in-depth description of the underlying multi-step algorithm which both produces the motion paths, and also improves the marker decoding accuracy significantly. We automatically tracked ${\sim}2000$ marked honey bees over 10 weeks with inexpensive recording hardware using markers without any error correction bits. We found that the proposed two-step tracking reduced incorrect ID decodings from initially ${\sim}13\%$ to around $2\%$ post-tracking. Alongside this paper, we publish the first trajectory dataset for all bees in a colony, extracted from ${\sim} 4$ million images. We invite researchers to join the collective scientific effort to investigate this intriguing animal system. All components of our system are open-source.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge