Daniel Seifert

Deep Learning-based Codes for Wiretap Fading Channels

Sep 13, 2024

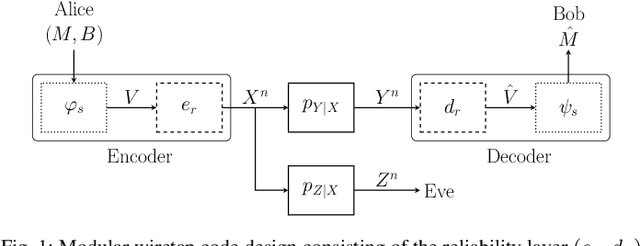

Abstract:The wiretap channel is a well-studied problem in the physical layer security (PLS) literature. Although it is proven that the decoding error probability and information leakage can be made arbitrarily small in the asymptotic regime, further research on finite-blocklength codes is required on the path towards practical, secure communications systems. This work provides the first experimental characterization of a deep learning-based, finite-blocklength code construction for multi-tap fading wiretap channels without channel state information (CSI). In addition to the evaluation of the average probability of error and information leakage, we illustrate the influence of (i) the number of fading taps, (ii) differing variances of the fading coefficients and (iii) the seed selection for the hash function-based security layer.

Operationalizing Assurance Cases for Data Scientists: A Showcase of Concepts and Tooling in the Context of Test Data Quality for Machine Learning

Dec 08, 2023Abstract:Assurance Cases (ACs) are an established approach in safety engineering to argue quality claims in a structured way. In the context of quality assurance for Machine Learning (ML)-based software components, ACs are also being discussed and appear promising. Tools for operationalizing ACs do exist, yet mainly focus on supporting safety engineers on the system level. However, assuring the quality of an ML component within the system is commonly the responsibility of data scientists, who are usually less familiar with these tools. To address this gap, we propose a framework to support the operationalization of ACs for ML components based on technologies that data scientists use on a daily basis: Python and Jupyter Notebook. Our aim is to make the process of creating ML-related evidence in ACs more effective. Results from the application of the framework, documented through notebooks, can be integrated into existing AC tools. We illustrate the application of the framework on an example excerpt concerned with the quality of the test data.

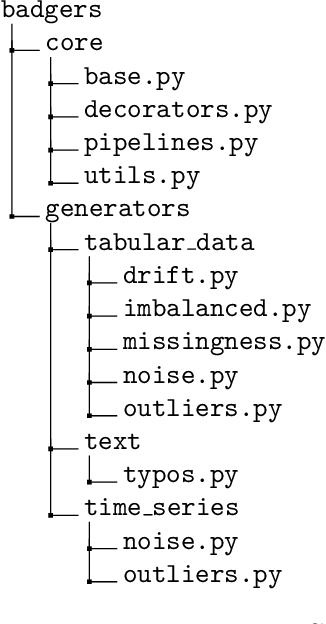

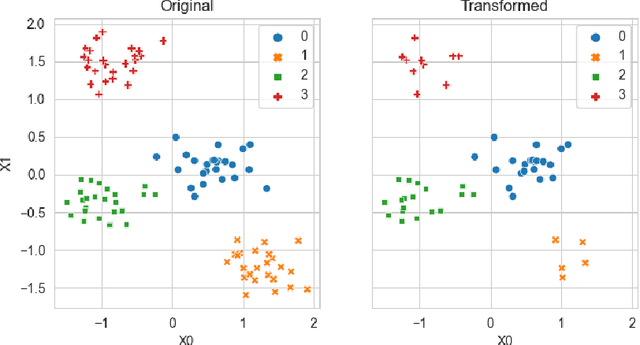

Badgers: generating data quality deficits with Python

Jul 10, 2023

Abstract:Generating context specific data quality deficits is necessary to experimentally assess data quality of data-driven (artificial intelligence (AI) or machine learning (ML)) applications. In this paper we present badgers, an extensible open-source Python library to generate data quality deficits (outliers, imbalanced data, drift, etc.) for different modalities (tabular data, time-series, text, etc.). The documentation is accessible at https://fraunhofer-iese.github.io/badgers/ and the source code at https://github.com/Fraunhofer-IESE/badgers

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge