Daniel Liu

A Large-Scale Study on the Development and Issues of Multi-Agent AI Systems

Jan 12, 2026Abstract:The rapid emergence of multi-agent AI systems (MAS), including LangChain, CrewAI, and AutoGen, has shaped how large language model (LLM) applications are developed and orchestrated. However, little is known about how these systems evolve and are maintained in practice. This paper presents the first large-scale empirical study of open-source MAS, analyzing over 42K unique commits and over 4.7K resolved issues across eight leading systems. Our analysis identifies three distinct development profiles: sustained, steady, and burst-driven. These profiles reflect substantial variation in ecosystem maturity. Perfective commits constitute 40.8% of all changes, suggesting that feature enhancement is prioritized over corrective maintenance (27.4%) and adaptive updates (24.3%). Data about issues shows that the most frequent concerns involve bugs (22%), infrastructure (14%), and agent coordination challenges (10%). Issue reporting also increased sharply across all frameworks starting in 2023. Median resolution times range from under one day to about two weeks, with distributions skewed toward fast responses but a minority of issues requiring extended attention. These results highlight both the momentum and the fragility of the current ecosystem, emphasizing the need for improved testing infrastructure, documentation quality, and maintenance practices to ensure long-term reliability and sustainability.

Knowledge distillation for fast and accurate DNA sequence correction

Nov 17, 2022

Abstract:Accurate genome sequencing can improve our understanding of biology and the genetic basis of disease. The standard approach for generating DNA sequences from PacBio instruments relies on HMM-based models. Here, we introduce Distilled DeepConsensus - a distilled transformer-encoder model for sequence correction, which improves upon the HMM-based methods with runtime constraints in mind. Distilled DeepConsensus is 1.3x faster and 1.5x smaller than its larger counterpart while improving the yield of high quality reads (Q30) over the HMM-based method by 1.69x (vs. 1.73x for larger model). With improved accuracy of genomic sequences, Distilled DeepConsensus improves downstream applications of genomic sequence analysis such as reducing variant calling errors by 39% (34% for larger model) and improving genome assembly quality by 3.8% (4.2% for larger model). We show that the representations learned by Distilled DeepConsensus are similar between faster and slower models.

Asymmetric Private Set Intersection with Applications to Contact Tracing and Private Vertical Federated Machine Learning

Nov 18, 2020

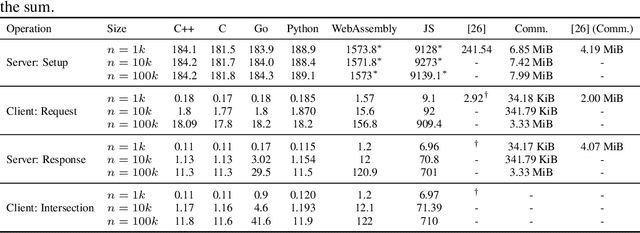

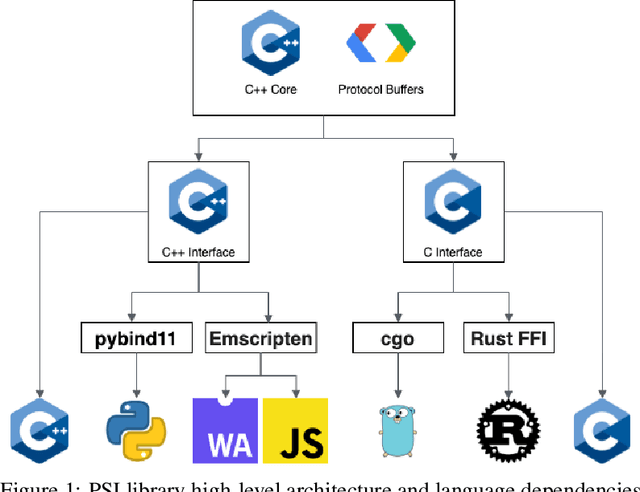

Abstract:We present a multi-language, cross-platform, open-source library for asymmetric private set intersection (PSI) and PSI-Cardinality (PSI-C). Our protocol combines traditional DDH-based PSI and PSI-C protocols with compression based on Bloom filters that helps reduce communication in the asymmetric setting. Currently, our library supports C++, C, Go, WebAssembly, JavaScript, Python, and Rust, and runs on both traditional hardware (x86) and browser targets. We further apply our library to two use cases: (i) a privacy-preserving contact tracing protocol that is compatible with existing approaches, but improves their privacy guarantees, and (ii) privacy-preserving machine learning on vertically partitioned data.

Adversarial point perturbations on 3D objects

Aug 16, 2019

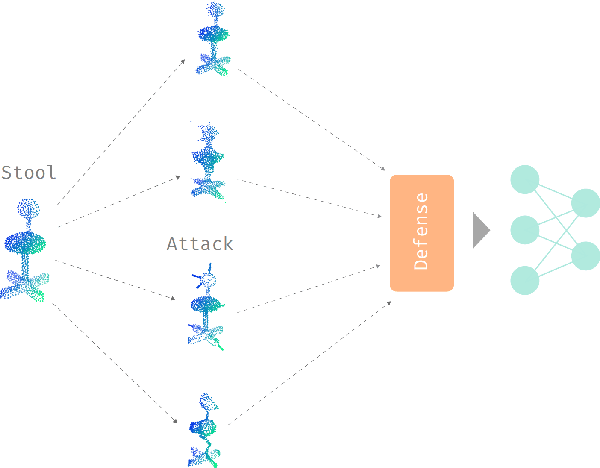

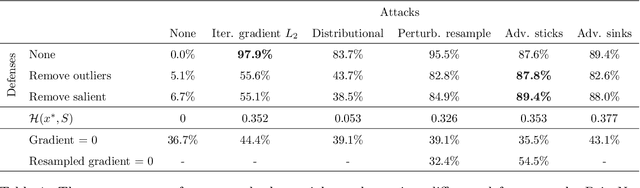

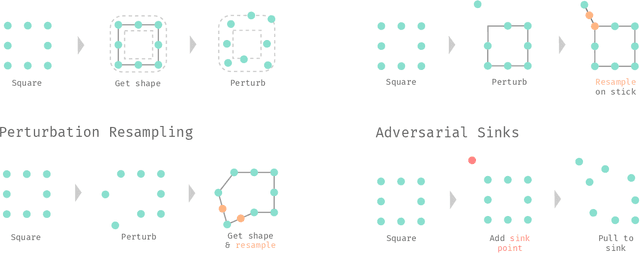

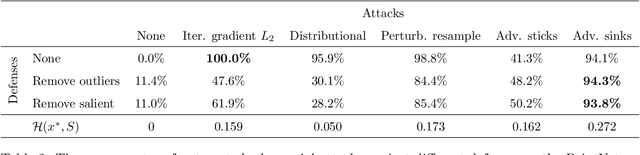

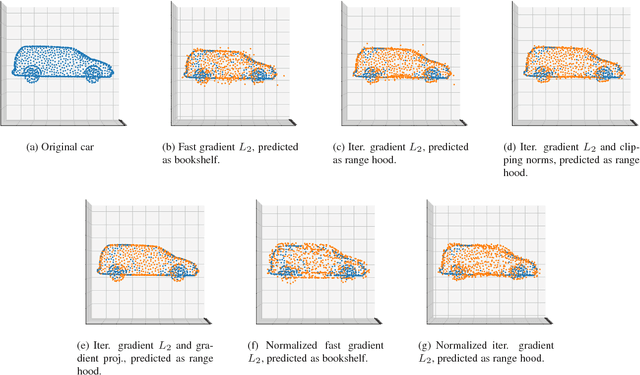

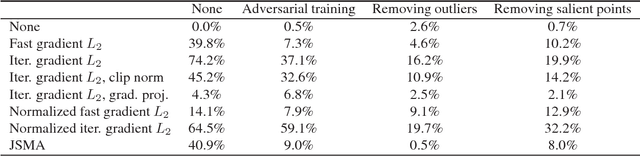

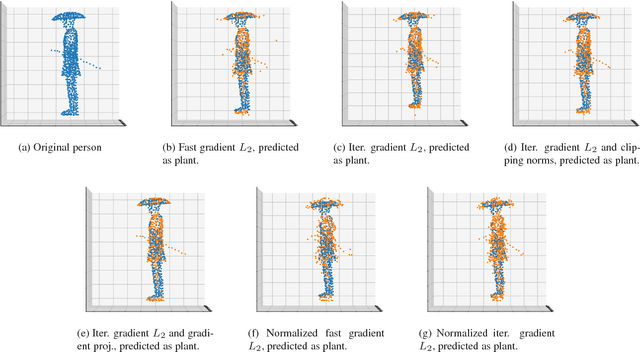

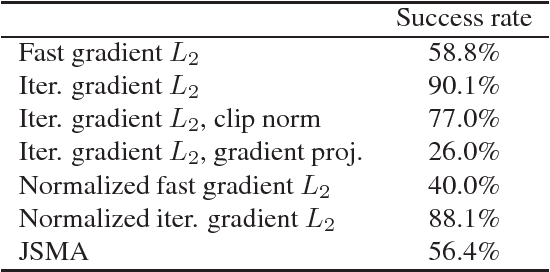

Abstract:The importance of training robust neural network grows as 3D data is increasingly utilized in deep learning for vision tasks, like autonomous driving. We examine this problem from the perspective of the attacker, which is necessary in understanding how neural networks can be exploited, and thus defended. More specifically, we propose adversarial attacks based on solving different optimization problems, like minimizing the perceptibility of our generated adversarial examples, or maintaining a uniform density distribution of points across the adversarial object surfaces. Our four proposed algorithms for attacking 3D point cloud classification are all highly successful on existing neural networks, and we find that some of them are even effective against previously proposed point removal defenses.

Extending Adversarial Attacks and Defenses to Deep 3D Point Cloud Classifiers

Jan 10, 2019

Abstract:3D object classification and segmentation using deep neural networks has been extremely successful. As the problem of identifying 3D objects has many safety-critical applications, the neural networks have to be robust against adversarial changes to the input data set. There is a growing body of research on generating human-imperceptible adversarial attacks and defenses against them in the 2D image classification domain. However, 3D objects have various differences with 2D images, and this specific domain has not been rigorously studied so far. We present a preliminary evaluation of adversarial attacks on deep 3D point cloud classifiers, namely PointNet and PointNet++, by evaluating both white-box and black-box adversarial attacks that were proposed for 2D images and extending those attacks to reduce the perceptibility of the perturbations in 3D space. We also show the high effectiveness of simple defenses against those attacks by proposing new defenses that exploit the unique structure of 3D point clouds. Finally, we attempt to explain the effectiveness of the defenses through the intrinsic structures of both the point clouds and the neural network architectures. Overall, we find that networks that process 3D point cloud data are weak to adversarial attacks, but they are also more easily defensible compared to 2D image classifiers. Our investigation will provide the groundwork for future studies on improving the robustness of deep neural networks that handle 3D data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge