Daniel J. B. Harrold

An Empirical Game-Theoretic Analysis of Autonomous Cyber-Defence Agents

Jan 31, 2025Abstract:The recent rise in increasingly sophisticated cyber-attacks raises the need for robust and resilient autonomous cyber-defence (ACD) agents. Given the variety of cyber-attack tactics, techniques and procedures (TTPs) employed, learning approaches that can return generalisable policies are desirable. Meanwhile, the assurance of ACD agents remains an open challenge. We address both challenges via an empirical game-theoretic analysis of deep reinforcement learning (DRL) approaches for ACD using the principled double oracle (DO) algorithm. This algorithm relies on adversaries iteratively learning (approximate) best responses against each others' policies; a computationally expensive endeavour for autonomous cyber operations agents. In this work we introduce and evaluate a theoretically-sound, potential-based reward shaping approach to expedite this process. In addition, given the increasing number of open-source ACD-DRL approaches, we extend the DO formulation to allow for multiple response oracles (MRO), providing a framework for a holistic evaluation of ACD approaches.

Deep Reinforcement Learning for Autonomous Cyber Operations: A Survey

Oct 11, 2023

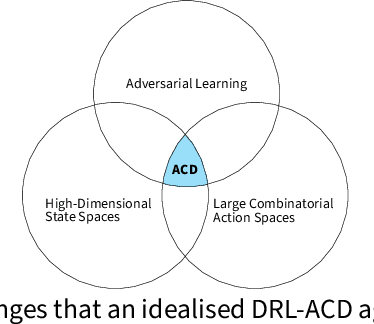

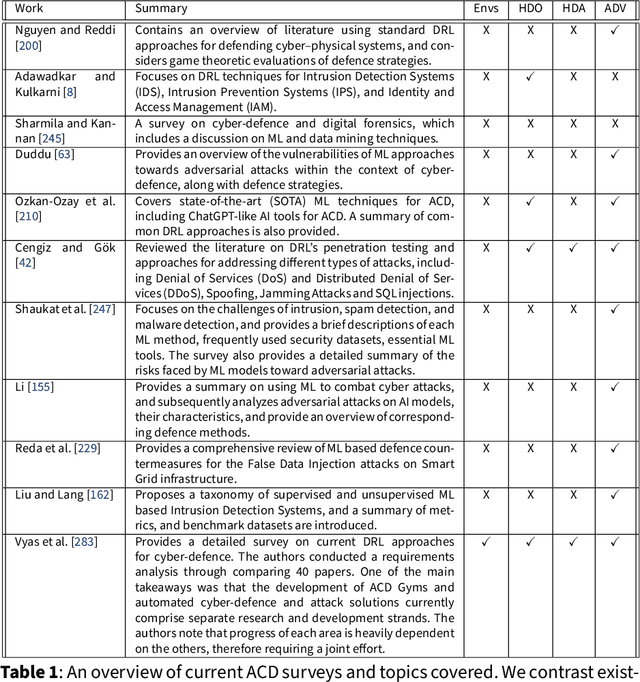

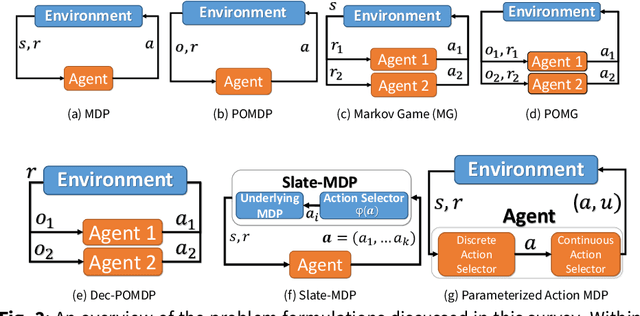

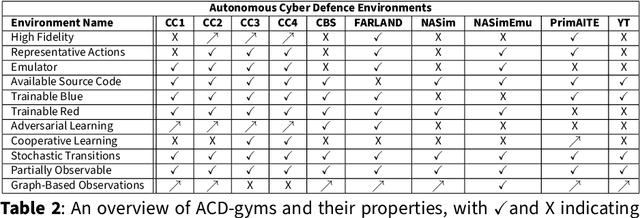

Abstract:The rapid increase in the number of cyber-attacks in recent years raises the need for principled methods for defending networks against malicious actors. Deep reinforcement learning (DRL) has emerged as a promising approach for mitigating these attacks. However, while DRL has shown much potential for cyber-defence, numerous challenges must be overcome before DRL can be applied to autonomous cyber-operations (ACO) at scale. Principled methods are required for environments that confront learners with very high-dimensional state spaces, large multi-discrete action spaces, and adversarial learning. Recent works have reported success in solving these problems individually. There have also been impressive engineering efforts towards solving all three for real-time strategy games. However, applying DRL to the full ACO problem remains an open challenge. Here, we survey the relevant DRL literature and conceptualize an idealised ACO-DRL agent. We provide: i.) A summary of the domain properties that define the ACO problem; ii.) A comprehensive evaluation of the extent to which domains used for benchmarking DRL approaches are comparable to ACO; iii.) An overview of state-of-the-art approaches for scaling DRL to domains that confront learners with the curse of dimensionality, and; iv.) A survey and critique of current methods for limiting the exploitability of agents within adversarial settings from the perspective of ACO. We conclude with open research questions that we hope will motivate future directions for researchers and practitioners working on ACO.

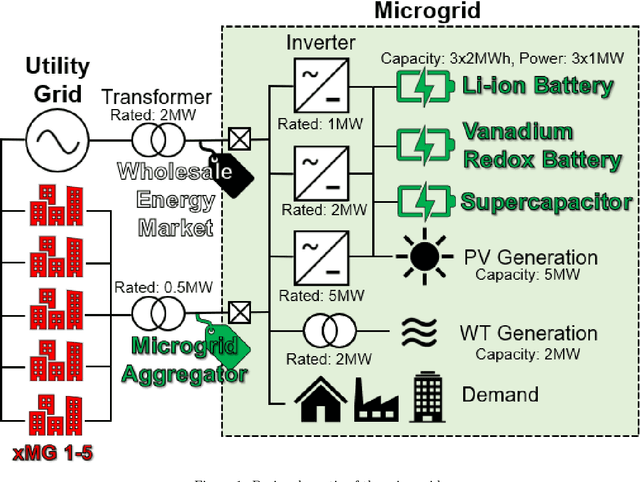

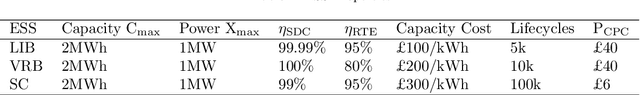

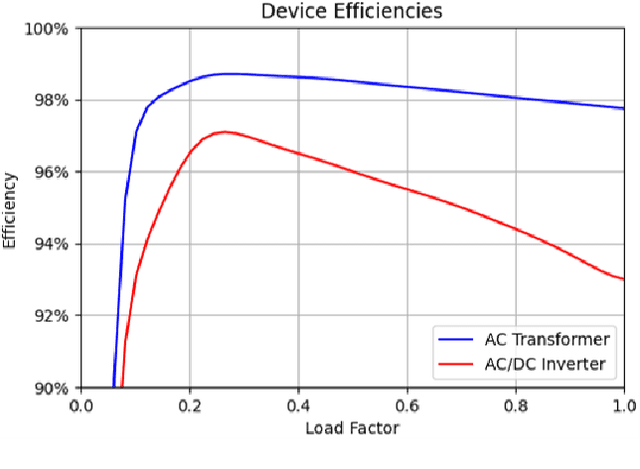

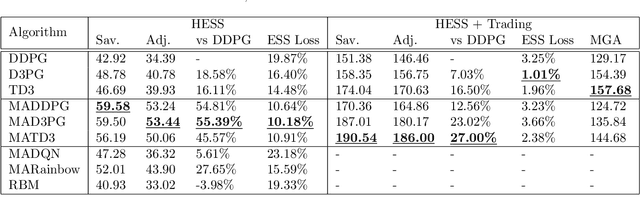

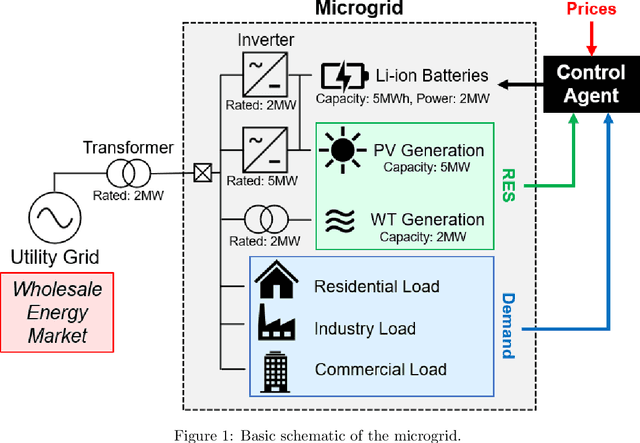

Renewable energy integration and microgrid energy trading using multi-agent deep reinforcement learning

Dec 05, 2021

Abstract:In this paper, multi-agent reinforcement learning is used to control a hybrid energy storage system working collaboratively to reduce the energy costs of a microgrid through maximising the value of renewable energy and trading. The agents must learn to control three different types of energy storage system suited for short, medium, and long-term storage under fluctuating demand, dynamic wholesale energy prices, and unpredictable renewable energy generation. Two case studies are considered: the first looking at how the energy storage systems can better integrate renewable energy generation under dynamic pricing, and the second with how those same agents can be used alongside an aggregator agent to sell energy to self-interested external microgrids looking to reduce their own energy bills. This work found that the centralised learning with decentralised execution of the multi-agent deep deterministic policy gradient and its state-of-the-art variants allowed the multi-agent methods to perform significantly better than the control from a single global agent. It was also found that using separate reward functions in the multi-agent approach performed much better than using a single control agent. Being able to trade with the other microgrids, rather than just selling back to the utility grid, also was found to greatly increase the grid's savings.

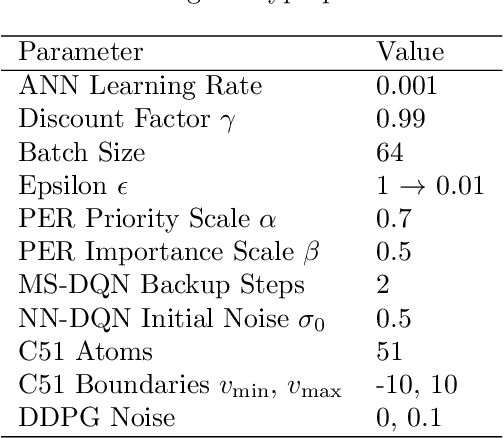

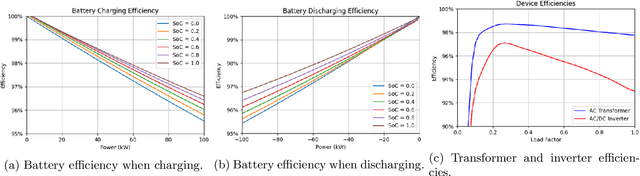

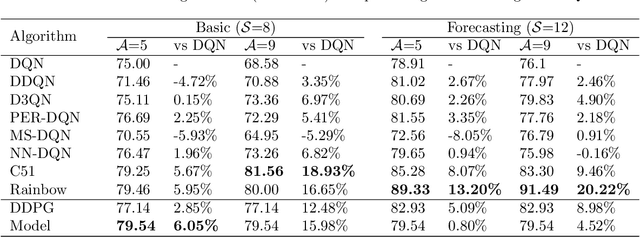

Data-driven battery operation for energy arbitrage using rainbow deep reinforcement learning

Jun 10, 2021

Abstract:As the world seeks to become more sustainable, intelligent solutions are needed to increase the penetration of renewable energy. In this paper, the model-free deep reinforcement learning algorithm Rainbow Deep Q-Networks is used to control a battery in a small microgrid to perform energy arbitrage and more efficiently utilise solar and wind energy sources. The grid operates with its own demand and renewable generation based on a dataset collected at Keele University, as well as using dynamic energy pricing from a real wholesale energy market. Four scenarios are tested including using demand and price forecasting produced with local weather data. The algorithm and its subcomponents are evaluated against two continuous control benchmarks with Rainbow able to outperform all other method. This research shows the importance of using the distributional approach for reinforcement learning when working with complex environments and reward functions, as well as how it can be used to visualise and contextualise the agent's behaviour for real-world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge