Data-driven battery operation for energy arbitrage using rainbow deep reinforcement learning

Paper and Code

Jun 10, 2021

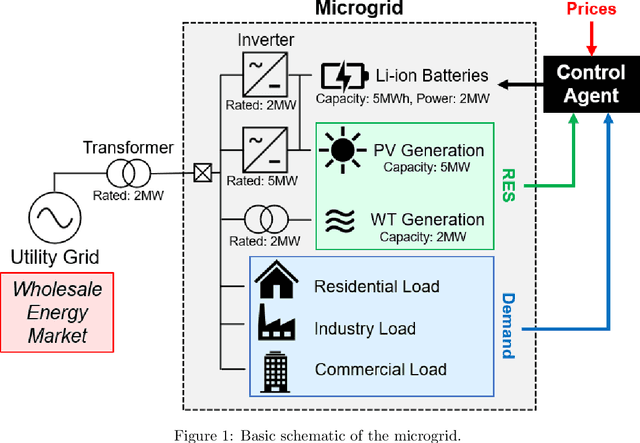

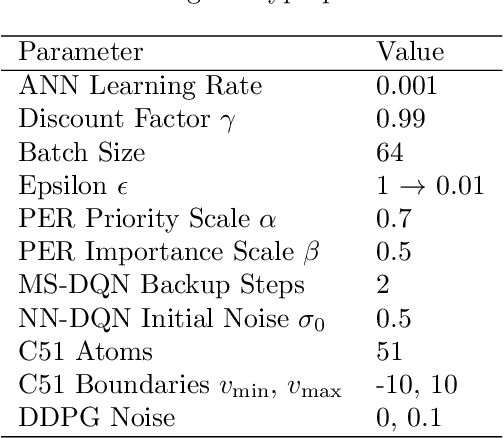

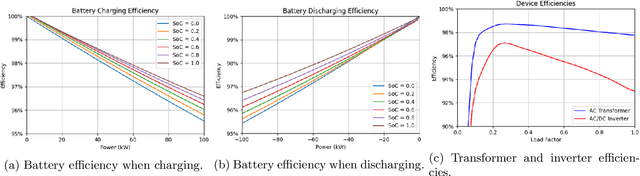

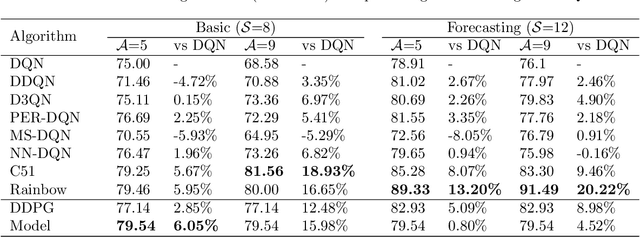

As the world seeks to become more sustainable, intelligent solutions are needed to increase the penetration of renewable energy. In this paper, the model-free deep reinforcement learning algorithm Rainbow Deep Q-Networks is used to control a battery in a small microgrid to perform energy arbitrage and more efficiently utilise solar and wind energy sources. The grid operates with its own demand and renewable generation based on a dataset collected at Keele University, as well as using dynamic energy pricing from a real wholesale energy market. Four scenarios are tested including using demand and price forecasting produced with local weather data. The algorithm and its subcomponents are evaluated against two continuous control benchmarks with Rainbow able to outperform all other method. This research shows the importance of using the distributional approach for reinforcement learning when working with complex environments and reward functions, as well as how it can be used to visualise and contextualise the agent's behaviour for real-world applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge