Damith Ranasinghe

LAVAPilot: Lightweight UAV Trajectory Planner with Situational Awareness for Embedded Autonomy to Track and Locate Radio-tags

Jul 31, 2020

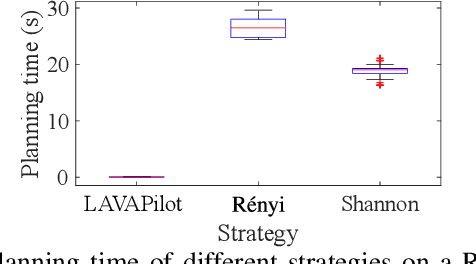

Abstract:Tracking and locating radio-tagged wildlife is a labor-intensive and time-consuming task necessary in wildlife conservation. In this article, we focus on the problem of achieving embedded autonomy for a resource-limited aerial robot for the task capable of avoiding undesirable disturbances to wildlife. We employ a lightweight sensor system capable of simultaneous (noisy) measurements of radio signal strength information from multiple tags for estimating object locations. We formulate a new lightweight task-based trajectory planning method-LAVAPilot-with a greedy evaluation strategy and a void functional formulation to achieve situational awareness to maintain a safe distance from objects of interest. Conceptually, we embed our intuition of moving closer to reduce the uncertainty of measurements into LAVAPilot instead of employing a computationally intensive information gain based planning strategy. We employ LAVAPilot and the sensor to build a lightweight aerial robot platform with fully embedded autonomy for jointly tracking and planning to track and locate multiple VHF radio collar tags used by conservation biologists. Using extensive Monte Carlo simulation-based experiments, implementations on a single board compute module, and field experiments using an aerial robot platform with multiple VHF radio collar tags, we evaluate our joint planning and tracking algorithms. Further, we compare our method with other information-based planning methods with and without situational awareness to demonstrate the effectiveness of our robot executing LAVAPilot. Our experiments demonstrate that LAVAPilot significantly reduces (by 98.5%) the computational cost of planning to enable real-time planning decisions whilst achieving similar localization accuracy of objects compared to information gain based planning methods, albeit taking a slightly longer time to complete a mission.

Deep Auto-Set: A Deep Auto-Encoder-Set Network for Activity Recognition Using Wearables

Nov 20, 2018

Abstract:Automatic recognition of human activities from time-series sensor data (referred to as HAR) is a growing area of research in ubiquitous computing. Most recent research in the field adopts supervised deep learning paradigms to automate extraction of intrinsic features from raw signal inputs and addresses HAR as a multi-class classification problem where detecting a single activity class within the duration of a sensory data segment suffices. However, due to the innate diversity of human activities and their corresponding duration, no data segment is guaranteed to contain sensor recordings of a single activity type. In this paper, we express HAR more naturally as a set prediction problem where the predictions are sets of ongoing activity elements with unfixed and unknown cardinality. For the first time, we address this problem by presenting a novel HAR approach that learns to output activity sets using deep neural networks. Moreover, motivated by the limited availability of annotated HAR datasets as well as the unfortunate immaturity of existing unsupervised systems, we complement our supervised set learning scheme with a prior unsupervised feature learning process that adopts convolutional auto-encoders to exploit unlabeled data. The empirical experiments on two widely adopted HAR datasets demonstrate the substantial improvement of our proposed methodology over the baseline models.

Efficient Dense Labeling of Human Activity Sequences from Wearables using Fully Convolutional Networks

Feb 20, 2017

Abstract:Recognizing human activities in a sequence is a challenging area of research in ubiquitous computing. Most approaches use a fixed size sliding window over consecutive samples to extract features---either handcrafted or learned features---and predict a single label for all samples in the window. Two key problems emanate from this approach: i) the samples in one window may not always share the same label. Consequently, using one label for all samples within a window inevitably lead to loss of information; ii) the testing phase is constrained by the window size selected during training while the best window size is difficult to tune in practice. We propose an efficient algorithm that can predict the label of each sample, which we call dense labeling, in a sequence of human activities of arbitrary length using a fully convolutional network. In particular, our approach overcomes the problems posed by the sliding window step. Additionally, our algorithm learns both the features and classifier automatically. We release a new daily activity dataset based on a wearable sensor with hospitalized patients. We conduct extensive experiments and demonstrate that our proposed approach is able to outperform the state-of-the-arts in terms of classification and label misalignment measures on three challenging datasets: Opportunity, Hand Gesture, and our new dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge