Cori Tymoszek

DashCam Pay: A System for In-vehicle Payments Using Face and Voice

Apr 08, 2020

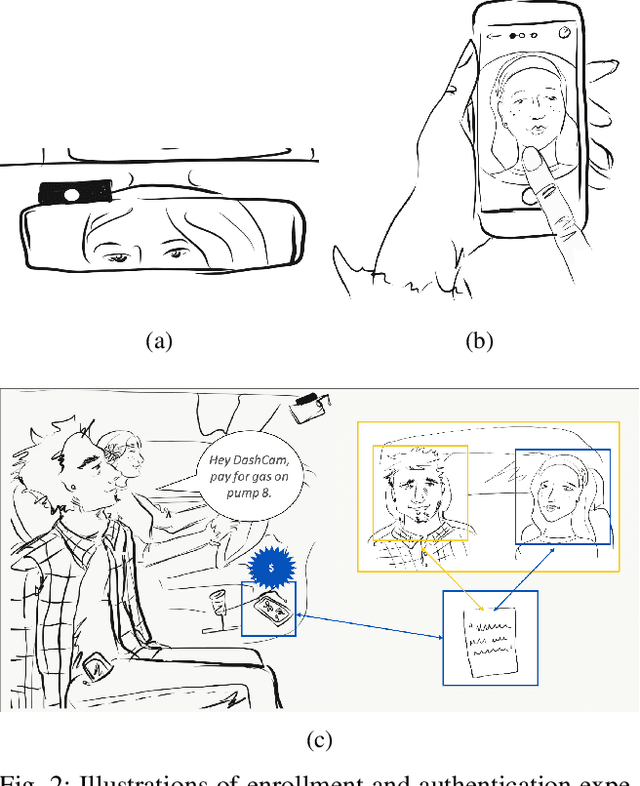

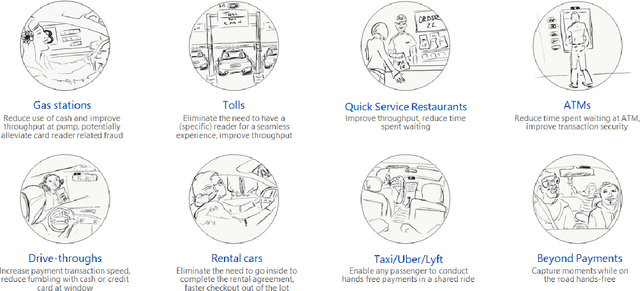

Abstract:We present an open loop system, called DashCam Pay, that enables in-vehicle payments using face and voice biometrics. The system uses a plug-and-play device (dashcam) mounted in the vehicle to capture face images and voice commands of passengers. The dashcam is connected to mobile devices of passengers sitting in the vehicle, and uses privacy-preserving biometric comparison techniques to compare the biometric data captured by the dashcam with the biometric data enrolled on the users' mobile devices to determine the payer. Once the payer is verified, payment is initiated via the mobile device of the payer. For initial feasibility analysis, we collected data from 20 different subjects at two different sites using a commercially available dashcam, and evaluated open-source biometric algorithms on the collected data. Subsequently, we built an android prototype of the proposed system using open-source software packages to demonstrate the utility of the proposed system in facilitating secure in-vehicle payments. DashCam Pay can be integrated either by dashcam or vehicle manufacturers to enable open loop in-vehicle payments. We also discuss the applicability of the system to other payments scenarios, such as in-store payments.

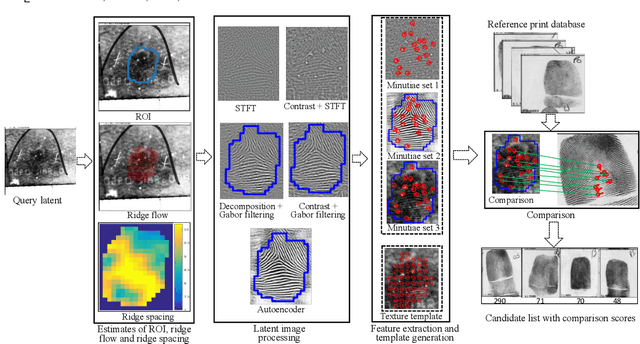

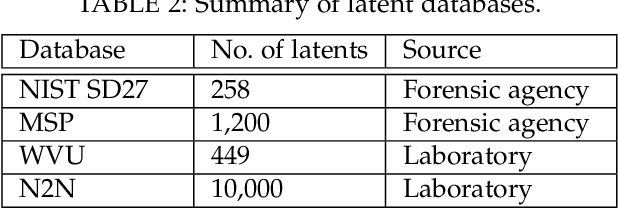

End-to-End Latent Fingerprint Search

Dec 26, 2018

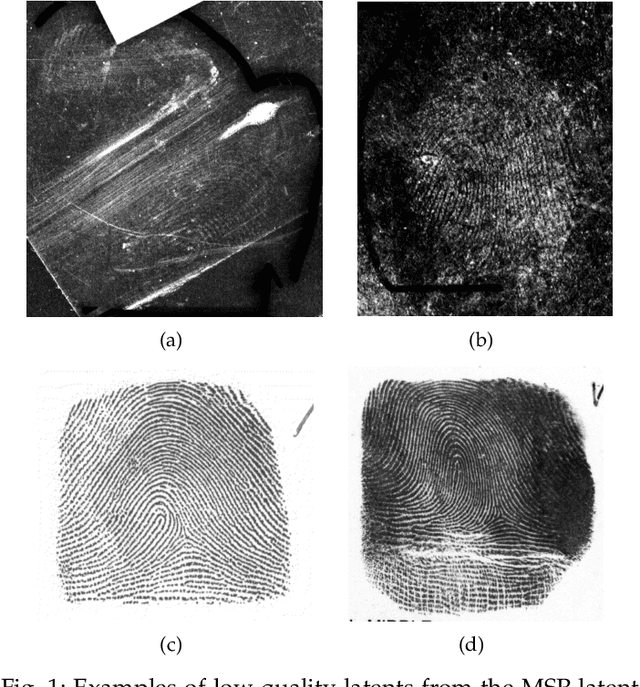

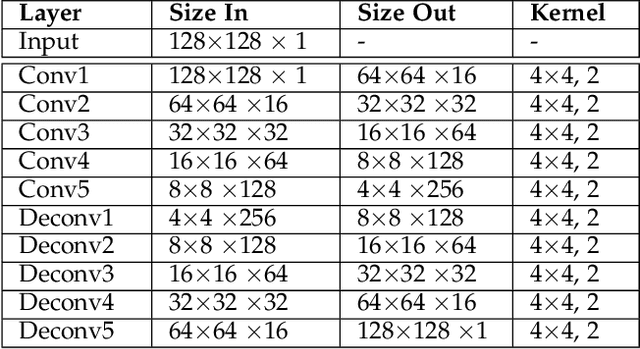

Abstract:Latent fingerprints are one of the most important and widely used sources of evidence in law enforcement and forensic agencies. Yet the performance of the state-of-the-art latent recognition systems is far from satisfactory, and they often require manual markups to boost the latent search performance. Further, the COTS systems are proprietary and do not output the true comparison scores between a latent and reference prints to conduct quantitative evidential analysis. We present an end-to-end latent fingerprint search system, including automated region of interest (ROI) cropping, latent image preprocessing, feature extraction, feature comparison , and outputs a candidate list. Two separate minutiae extraction models provide complementary minutiae templates. To compensate for the small number of minutiae in small area and poor quality latents, a virtual minutiae set is generated to construct a texture template. A 96-dimensional descriptor is extracted for each minutia from its neighborhood. For computational efficiency, the descriptor length for virtual minutiae is further reduced to 16 using product quantization. Our end-to-end system is evaluated on three latent databases: NIST SD27 (258 latents); MSP (1,200 latents), WVU (449 latents) and N2N (10,000 latents) against a background set of 100K rolled prints, which includes the true rolled mates of the latents with rank-1 retrieval rates of 65.7%, 69.4%, 65.5%, and 7.6% respectively. A multi-core solution implemented on 24 cores obtains 1ms per latent to rolled comparison.

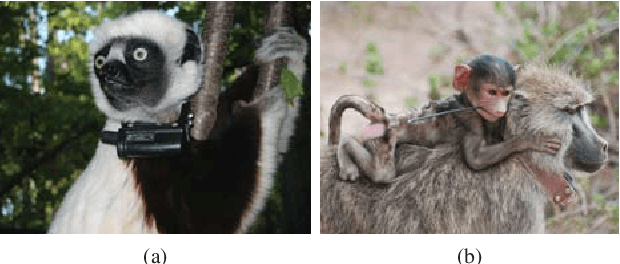

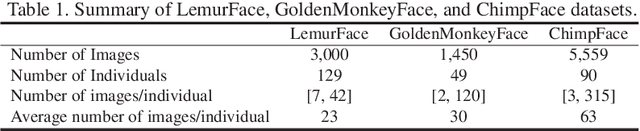

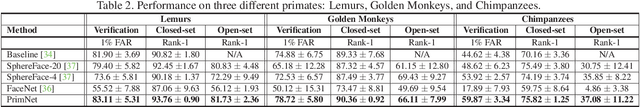

Face Recognition: Primates in the Wild

Apr 24, 2018

Abstract:We present a new method of primate face recognition, and evaluate this method on several endangered primates, including golden monkeys, lemurs, and chimpanzees. The three datasets contain a total of 11,637 images of 280 individual primates from 14 species. Primate face recognition performance is evaluated using two existing state-of-the-art open-source systems, (i) FaceNet and (ii) SphereFace, (iii) a lemur face recognition system from literature, and (iv) our new convolutional neural network (CNN) architecture called PrimNet. Three recognition scenarios are considered: verification (1:1 comparison), and both open-set and closed-set identification (1:N search). We demonstrate that PrimNet outperforms all of the other systems in all three scenarios for all primate species tested. Finally, we implement an Android application of this recognition system to assist primate researchers and conservationists in the wild for individual recognition of primates.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge