Constantine Nakos

Interactively Diagnosing Errors in a Semantic Parser

Jul 08, 2024

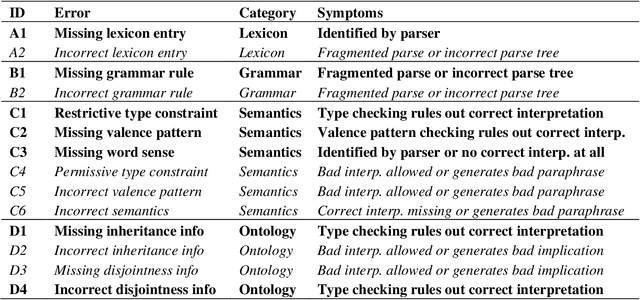

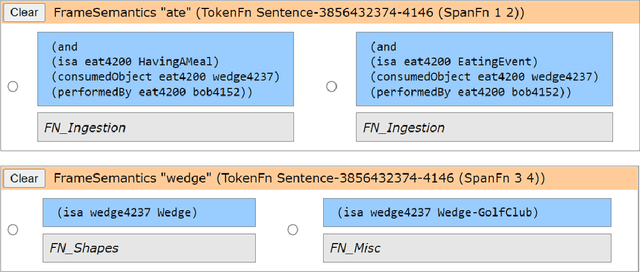

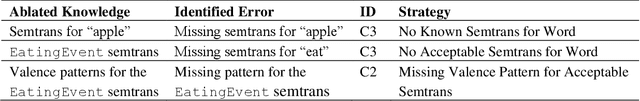

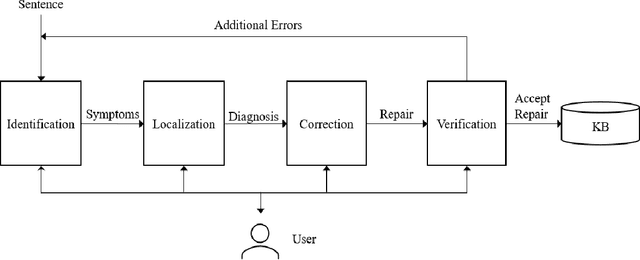

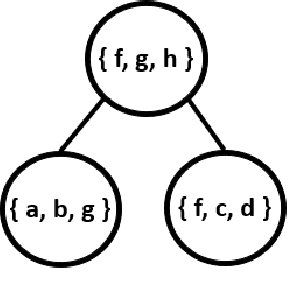

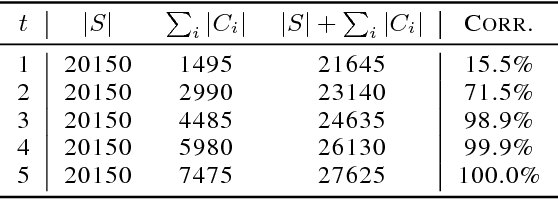

Abstract:Hand-curated natural language systems provide an inspectable, correctable alternative to language systems based on machine learning, but maintaining them requires considerable effort and expertise. Interactive Natural Language Debugging (INLD) aims to lessen this burden by casting debugging as a reasoning problem, asking the user a series of questions to diagnose and correct errors in the system's knowledge. In this paper, we present work in progress on an interactive error diagnosis system for the CNLU semantic parser. We show how the first two stages of the INLD pipeline (symptom identification and error localization) can be cast as a model-based diagnosis problem, demonstrate our system's ability to diagnose semantic errors on synthetic examples, and discuss design challenges and frontiers for future work.

Knowledge Management in the Companion Cognitive Architecture

Jul 08, 2024

Abstract:One of the fundamental aspects of cognitive architectures is their ability to encode and manipulate knowledge. Without a consistent, well-designed, and scalable knowledge management scheme, an architecture will be unable to move past toy problems and tackle the broader problems of cognition. In this paper, we document some of the challenges we have faced in developing the knowledge stack for the Companion cognitive architecture and discuss the tools, representations, and practices we have developed to overcome them. We also lay out a series of potential next steps that will allow Companion agents to play a greater role in managing their own knowledge. It is our hope that these observations will prove useful to other cognitive architecture developers facing similar challenges.

Neural Analogical Matching

Apr 27, 2020

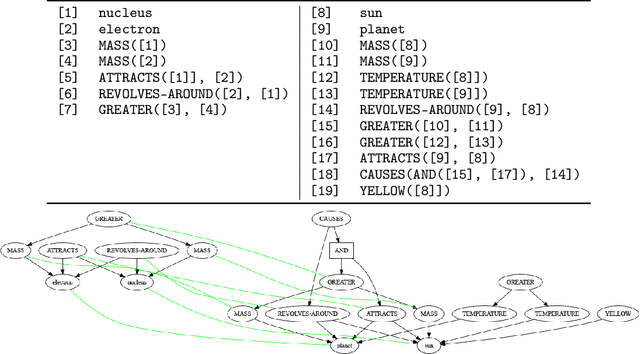

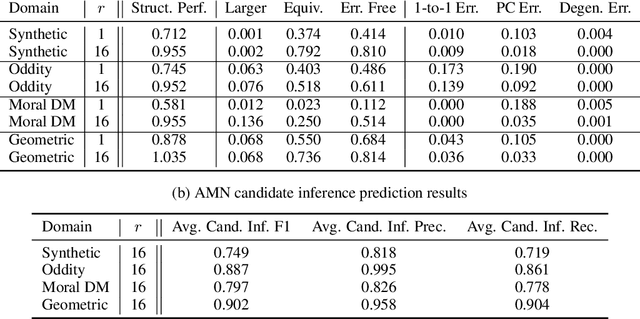

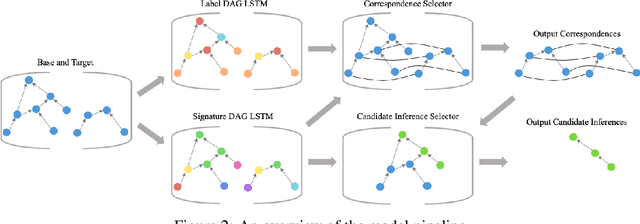

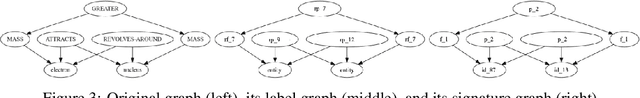

Abstract:Analogy is core to human cognition. It allows us to solve problems based on prior experience, it governs the way we conceptualize new information, and it even influences our visual perception. The importance of analogy to humans has made it an active area of research in the broader field of artificial intelligence, resulting in data-efficient models that learn and reason in human-like ways. While analogy and deep learning have generally been studied independently of one another, the integration of the two lines of research seems like a promising step towards more robust and efficient learning techniques. As part of the first steps towards such an integration, we introduce the Analogical Matching Network: a neural architecture that learns to produce analogies between structured, symbolic representations that are largely consistent with the principles of Structure-Mapping Theory.

High-Fidelity Vector Space Models of Structured Data

Jan 15, 2019

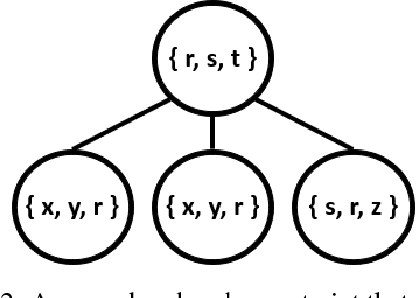

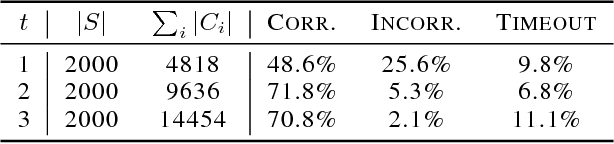

Abstract:Machine learning systems regularly deal with structured data in real-world applications. Unfortunately, such data has been difficult to faithfully represent in a way that most machine learning techniques would expect, i.e. as a real-valued vector of a fixed, pre-specified size. In this work, we introduce a novel approach that compiles structured data into a satisfiability problem which has in its set of solutions at least (and often only) the input data. The satisfiability problem is constructed from constraints which are generated automatically a priori from a given signature, thus trivially allowing for a bag-of-words-esque vector representation of the input to be constructed. The method is demonstrated in two areas, automated reasoning and natural language processing, where it is shown to produce vector representations of natural-language sentences and first-order logic clauses that can be precisely translated back to their original, structured input forms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge