Interactively Diagnosing Errors in a Semantic Parser

Paper and Code

Jul 08, 2024

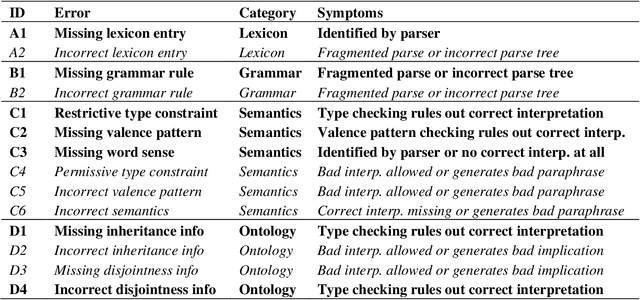

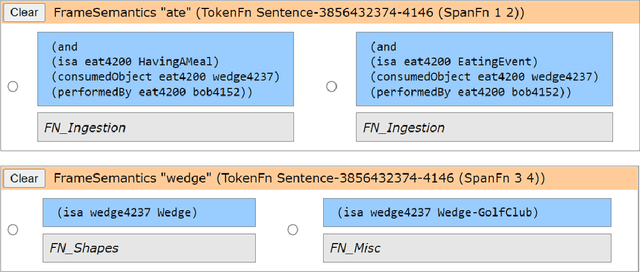

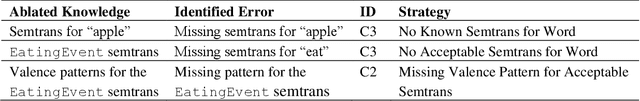

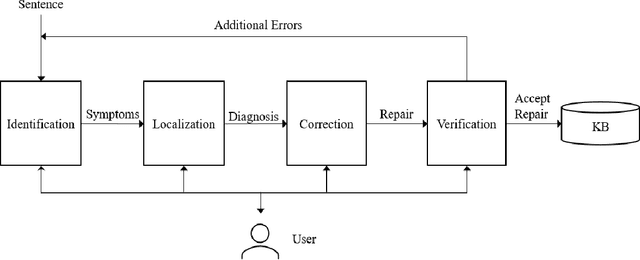

Hand-curated natural language systems provide an inspectable, correctable alternative to language systems based on machine learning, but maintaining them requires considerable effort and expertise. Interactive Natural Language Debugging (INLD) aims to lessen this burden by casting debugging as a reasoning problem, asking the user a series of questions to diagnose and correct errors in the system's knowledge. In this paper, we present work in progress on an interactive error diagnosis system for the CNLU semantic parser. We show how the first two stages of the INLD pipeline (symptom identification and error localization) can be cast as a model-based diagnosis problem, demonstrate our system's ability to diagnose semantic errors on synthetic examples, and discuss design challenges and frontiers for future work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge