Connor Basich

University of Massachusetts Amherst

Competence-Aware Path Planning via Introspective Perception

Sep 28, 2021

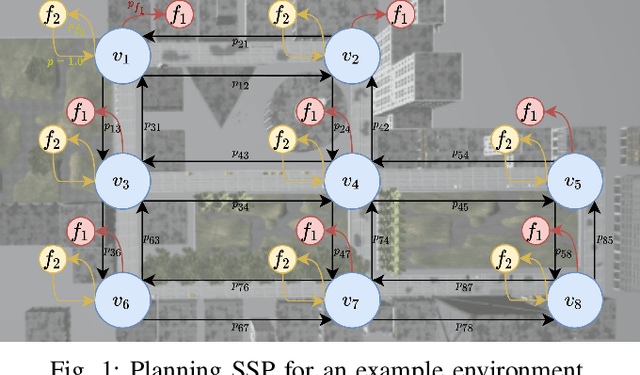

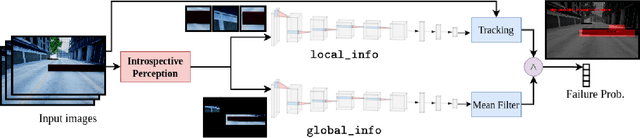

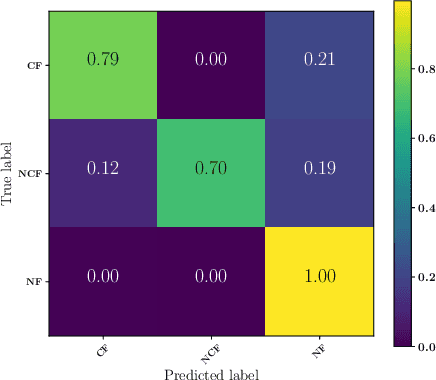

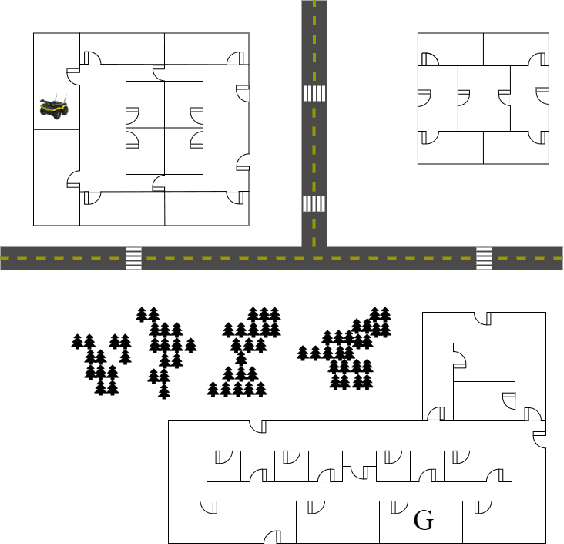

Abstract:Robots deployed in the real world over extended periods of time need to reason about unexpected failures, learn to predict them, and to proactively take actions to avoid future failures. Existing approaches for competence-aware planning are either model-based, requiring explicit enumeration of known failure modes, or purely statistical, using state- and location-specific failure statistics to infer competence. We instead propose a structured model-free approach to competence-aware planning by reasoning about plan execution failures due to errors in perception, without requiring a-priori enumeration of failure modes or requiring location-specific failure statistics. We introduce competence-aware path planning via introspective perception (CPIP), a Bayesian framework to iteratively learn and exploit task-level competence in novel deployment environments. CPIP factorizes the competence-aware planning problem into two components. First, perception errors are learned in a model-free and location-agnostic setting via introspective perception prior to deployment in novel environments. Second, during actual deployments, the prediction of task-level failures is learned in a context-aware setting. Experiments in a simulation show that the proposed CPIP approach outperforms the frequentist baseline in multiple mobile robot tasks, and is further validated via real robot experiments in an environment with perceptually challenging obstacles and terrain.

Improving Competence for Reliable Autonomy

Jul 23, 2020

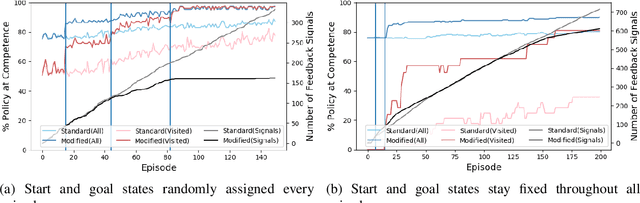

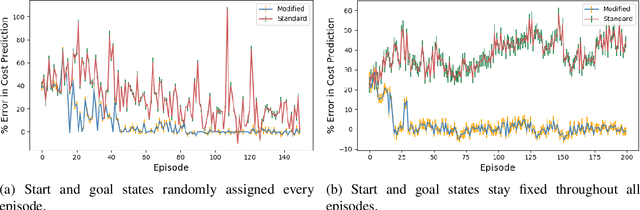

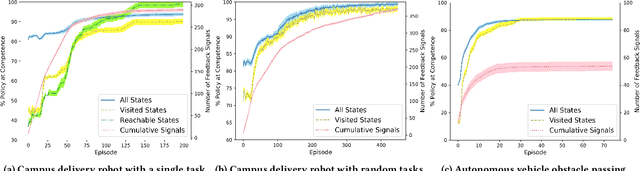

Abstract:Given the complexity of real-world, unstructured domains, it is often impossible or impractical to design models that include every feature needed to handle all possible scenarios that an autonomous system may encounter. For an autonomous system to be reliable in such domains, it should have the ability to improve its competence online. In this paper, we propose a method for improving the competence of a system over the course of its deployment. We specifically focus on a class of semi-autonomous systems known as competence-aware systems that model their own competence -- the optimal extent of autonomy to use in any given situation -- and learn this competence over time from feedback received through interactions with a human authority. Our method exploits such feedback to identify important state features missing from the system's initial model, and incorporates them into its state representation. The result is an agent that better predicts human involvement, leading to improvements in its competence and reliability, and as a result, its overall performance.

* In Proceedings AREA 2020, arXiv:2007.11260

Learning to Optimize Autonomy in Competence-Aware Systems

Mar 17, 2020

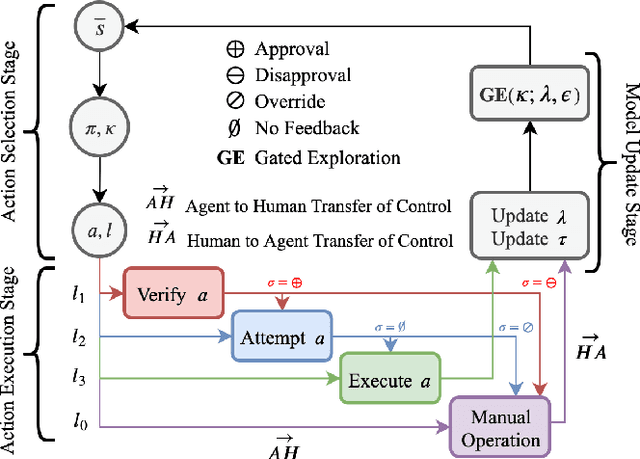

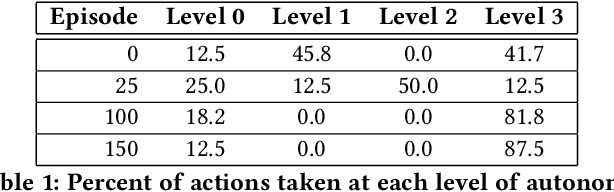

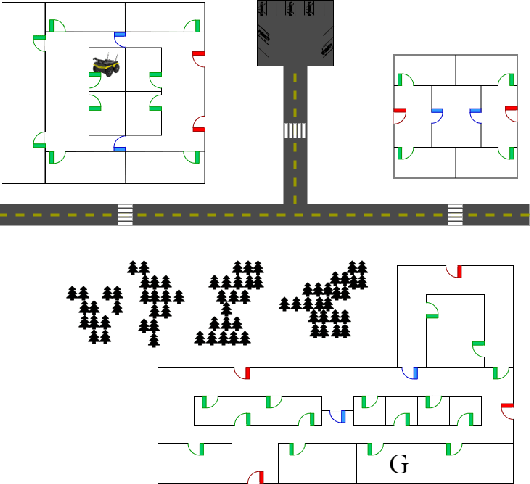

Abstract:Interest in semi-autonomous systems (SAS) is growing rapidly as a paradigm to deploy autonomous systems in domains that require occasional reliance on humans. This paradigm allows service robots or autonomous vehicles to operate at varying levels of autonomy and offer safety in situations that require human judgment. We propose an introspective model of autonomy that is learned and updated online through experience and dictates the extent to which the agent can act autonomously in any given situation. We define a competence-aware system (CAS) that explicitly models its own proficiency at different levels of autonomy and the available human feedback. A CAS learns to adjust its level of autonomy based on experience to maximize overall efficiency, factoring in the cost of human assistance. We analyze the convergence properties of CAS and provide experimental results for robot delivery and autonomous driving domains that demonstrate the benefits of the approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge