Claudionor Coelho

PSL is Dead. Long Live PSL

May 27, 2022

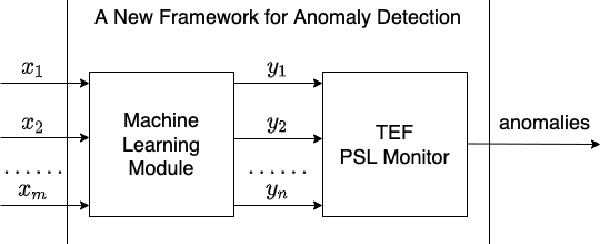

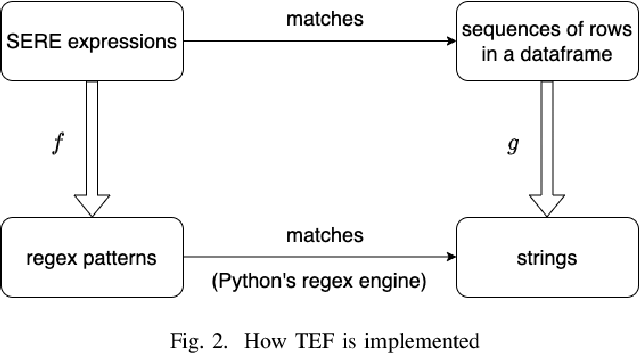

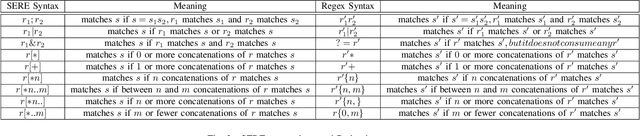

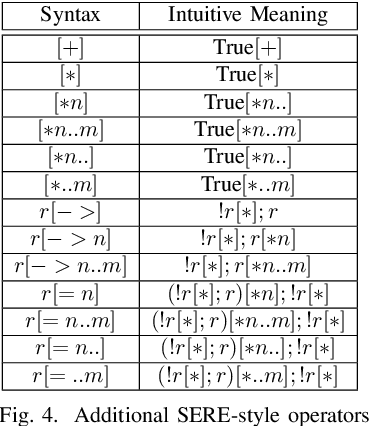

Abstract:Property Specification Language (PSL) is a form of temporal logic that has been mainly used in discrete domains (e.g. formal hardware verification). In this paper, we show that by merging machine learning techniques with PSL monitors, we can extend PSL to work on continuous domains. We apply this technique in machine learning-based anomaly detection to analyze scenarios of real-time streaming events from continuous variables in order to detect abnormal behaviors of a system. By using machine learning with formal models, we leverage the strengths of both machine learning methods and formal semantics of time. On one hand, machine learning techniques can produce distributions on continuous variables, where abnormalities can be captured as deviations from the distributions. On the other hand, formal methods can characterize discrete temporal behaviors and relations that cannot be easily learned by machine learning techniques. Interestingly, the anomalies detected by machine learning and the underlying time representation used are discrete events. We implemented a temporal monitoring package (TEF) that operates in conjunction with normal data science packages for anomaly detection machine learning systems, and we show that TEF can be used to perform accurate interpretation of temporal correlation between events.

Enabling Binary Neural Network Training on the Edge

Feb 10, 2021

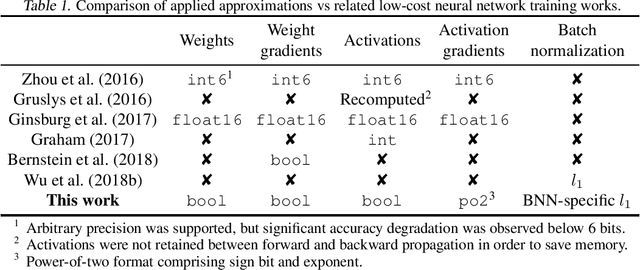

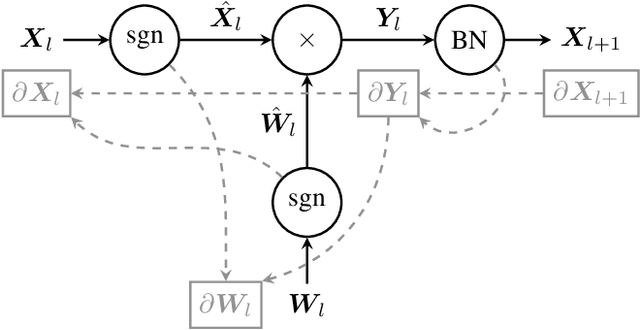

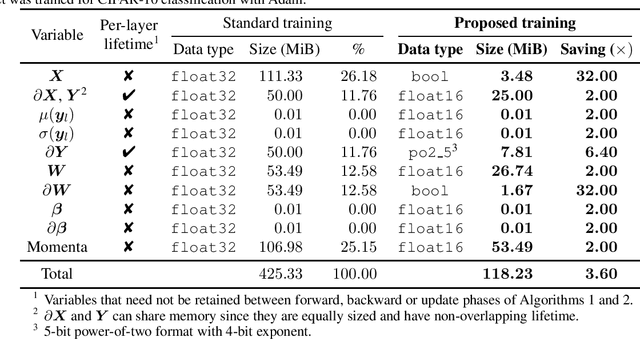

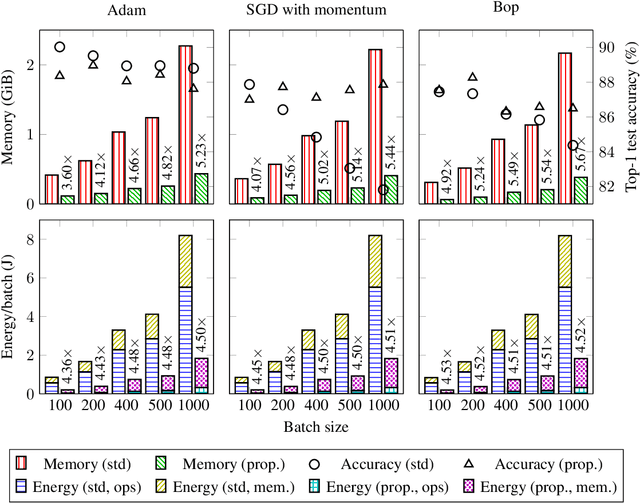

Abstract:The ever-growing computational demands of increasingly complex machine learning models frequently necessitate the use of powerful cloud-based infrastructure for their training. Binary neural networks are known to be promising candidates for on-device inference due to their extreme compute and memory savings over higher-precision alternatives. In this paper, we demonstrate that they are also strongly robust to gradient quantization, thereby making the training of modern models on the edge a practical reality. We introduce a low-cost binary neural network training strategy exhibiting sizable memory footprint reductions and energy savings vs Courbariaux & Bengio's standard approach. Against the latter, we see coincident memory requirement and energy consumption drops of 2--6$\times$, while reaching similar test accuracy in comparable time, across a range of small-scale models trained to classify popular datasets. We also showcase ImageNet training of ResNetE-18, achieving a 3.12$\times$ memory reduction over the aforementioned standard. Such savings will allow for unnecessary cloud offloading to be avoided, reducing latency, increasing energy efficiency and safeguarding privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge