Marjorie Sayer

PSL is Dead. Long Live PSL

May 27, 2022

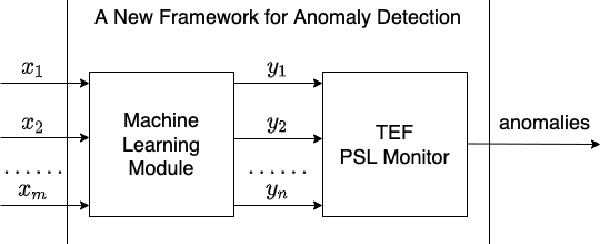

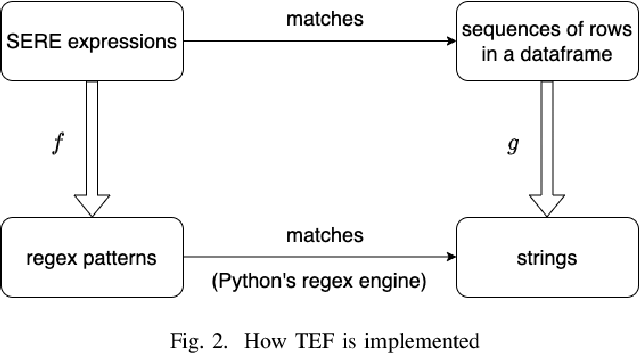

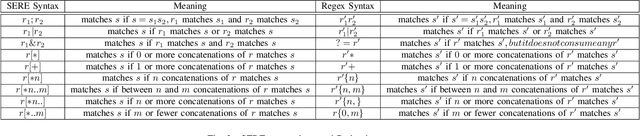

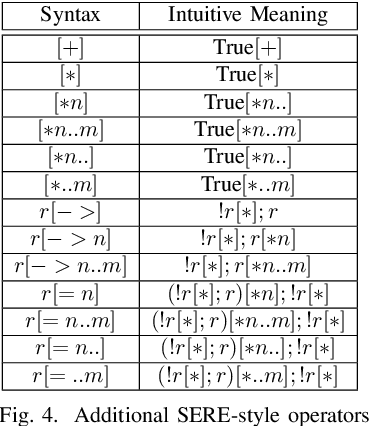

Abstract:Property Specification Language (PSL) is a form of temporal logic that has been mainly used in discrete domains (e.g. formal hardware verification). In this paper, we show that by merging machine learning techniques with PSL monitors, we can extend PSL to work on continuous domains. We apply this technique in machine learning-based anomaly detection to analyze scenarios of real-time streaming events from continuous variables in order to detect abnormal behaviors of a system. By using machine learning with formal models, we leverage the strengths of both machine learning methods and formal semantics of time. On one hand, machine learning techniques can produce distributions on continuous variables, where abnormalities can be captured as deviations from the distributions. On the other hand, formal methods can characterize discrete temporal behaviors and relations that cannot be easily learned by machine learning techniques. Interestingly, the anomalies detected by machine learning and the underlying time representation used are discrete events. We implemented a temporal monitoring package (TEF) that operates in conjunction with normal data science packages for anomaly detection machine learning systems, and we show that TEF can be used to perform accurate interpretation of temporal correlation between events.

Real-time Drift Detection on Time-series Data

Oct 12, 2021

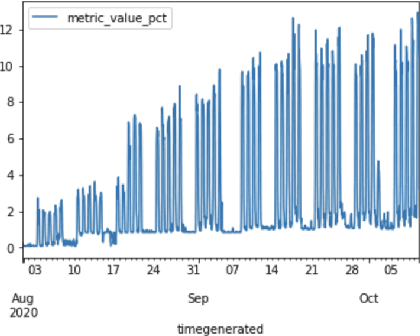

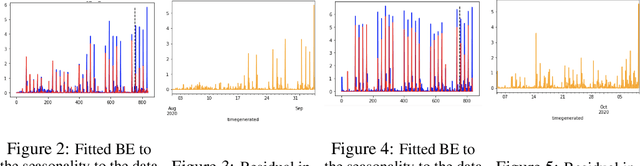

Abstract:Practical machine learning applications involving time series data, such as firewall log analysis to proactively detect anomalous behavior, are concerned with real time analysis of streaming data. Consequently, we need to update the ML models as the statistical characteristics of such data may shift frequently with time. One alternative explored in the literature is to retrain models with updated data whenever the models accuracy is observed to degrade. However, these methods rely on near real time availability of ground truth, which is rarely fulfilled. Further, in applications with seasonal data, temporal concept drift is confounded by seasonal variation. In this work, we propose an approach called Unsupervised Temporal Drift Detector or UTDD to flexibly account for seasonal variation, efficiently detect temporal concept drift in time series data in the absence of ground truth, and subsequently adapt our ML models to concept drift for better generalization.

Log2NS: Enhancing Deep Learning Based Analysis of Logs With Formal to Prevent Survivorship Bias

May 29, 2021

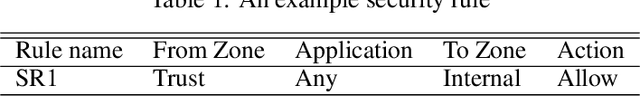

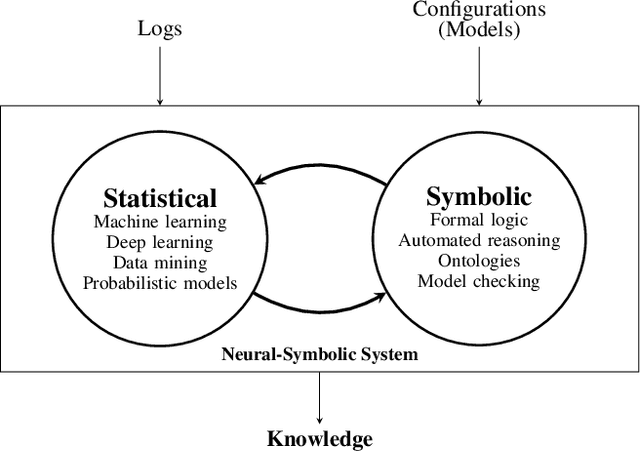

Abstract:Analysis of large observational data sets generated by a reactive system is a common challenge in debugging system failures and determining their root cause. One of the major problems is that these observational data suffer from survivorship bias. Examples include analyzing traffic logs from networks, and simulation logs from circuit design. In such applications, users want to detect non-spurious correlations from observational data and obtain actionable insights about them. In this paper, we introduce log to Neuro-symbolic (Log2NS), a framework that combines probabilistic analysis from machine learning (ML) techniques on observational data with certainties derived from symbolic reasoning on an underlying formal model. We apply the proposed framework to network traffic debugging by employing the following steps. To detect patterns in network logs, we first generate global embedding vector representations of entities such as IP addresses, ports, and applications. Next, we represent large log flow entries as clusters that make it easier for the user to visualize and detect interesting scenarios that will be further analyzed. To generalize these patterns, Log2NS provides an ability to query from static logs and correlation engines for positive instances, as well as formal reasoning for negative and unseen instances. By combining the strengths of deep learning and symbolic methods, Log2NS provides a very powerful reasoning and debugging tool for log-based data. Empirical evaluations on a real internal data set demonstrate the capabilities of Log2NS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge