Chunmei Ma

Filter Pruning For CNN With Enhanced Linear Representation Redundancy

Oct 10, 2023

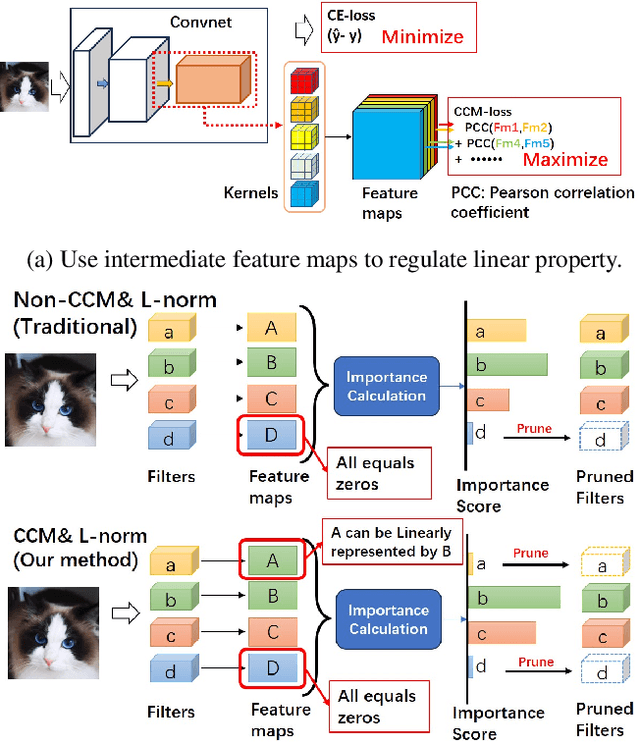

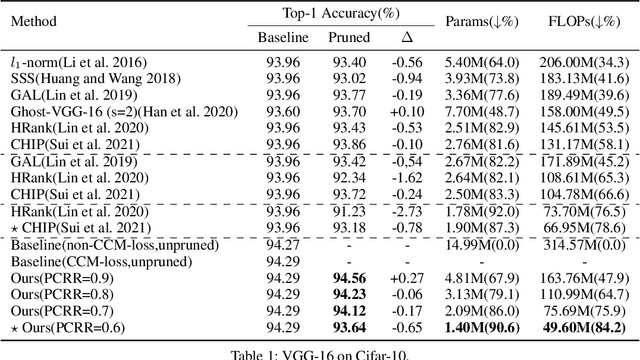

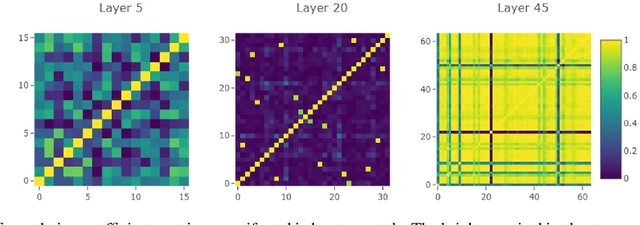

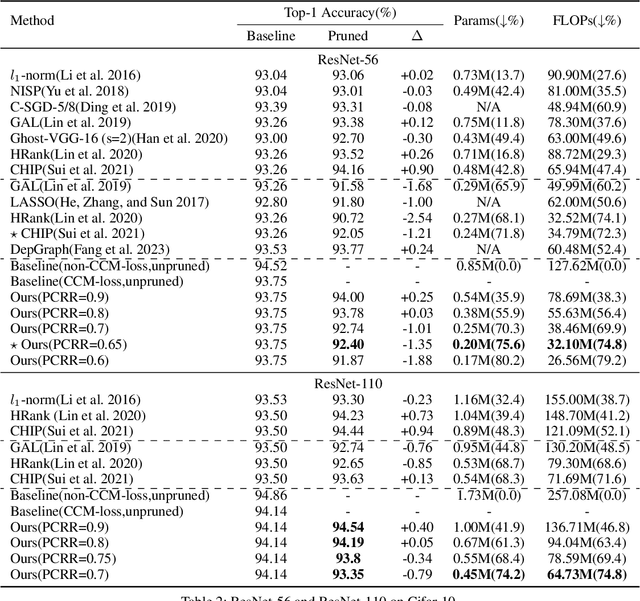

Abstract:Structured network pruning excels non-structured methods because they can take advantage of the thriving developed parallel computing techniques. In this paper, we propose a new structured pruning method. Firstly, to create more structured redundancy, we present a data-driven loss function term calculated from the correlation coefficient matrix of different feature maps in the same layer, named CCM-loss. This loss term can encourage the neural network to learn stronger linear representation relations between feature maps during the training from the scratch so that more homogenous parts can be removed later in pruning. CCM-loss provides us with another universal transcendental mathematical tool besides L*-norm regularization, which concentrates on generating zeros, to generate more redundancy but for the different genres. Furthermore, we design a matching channel selection strategy based on principal components analysis to exploit the maximum potential ability of CCM-loss. In our new strategy, we mainly focus on the consistency and integrality of the information flow in the network. Instead of empirically hard-code the retain ratio for each layer, our channel selection strategy can dynamically adjust each layer's retain ratio according to the specific circumstance of a per-trained model to push the prune ratio to the limit. Notably, on the Cifar-10 dataset, our method brings 93.64% accuracy for pruned VGG-16 with only 1.40M parameters and 49.60M FLOPs, the pruned ratios for parameters and FLOPs are 90.6% and 84.2%, respectively. For ResNet-50 trained on the ImageNet dataset, our approach achieves 42.8% and 47.3% storage and computation reductions, respectively, with an accuracy of 76.23%. Our code is available at https://github.com/Bojue-Wang/CCM-LRR.

Boundary-to-Solution Mapping for Groundwater Flows in a Toth Basin

Mar 28, 2023

Abstract:In this paper, the authors propose a new approach to solving the groundwater flow equation in the Toth basin of arbitrary top and bottom topographies using deep learning. Instead of using traditional numerical solvers, they use a DeepONet to produce the boundary-to-solution mapping. This mapping takes the geometry of the physical domain along with the boundary conditions as inputs to output the steady state solution of the groundwater flow equation. To implement the DeepONet, the authors approximate the top and bottom boundaries using truncated Fourier series or piecewise linear representations. They present two different implementations of the DeepONet: one where the Toth basin is embedded in a rectangular computational domain, and another where the Toth basin with arbitrary top and bottom boundaries is mapped into a rectangular computational domain via a nonlinear transformation. They implement the DeepONet with respect to the Dirichlet and Robin boundary condition at the top and the Neumann boundary condition at the impervious bottom boundary, respectively. Using this deep-learning enabled tool, the authors investigate the impact of surface topography on the flow pattern by both the top surface and the bottom impervious boundary with arbitrary geometries. They discover that the average slope of the top surface promotes long-distance transport, while the local curvature controls localized circulations. Additionally, they find that the slope of the bottom impervious boundary can seriously impact the long-distance transport of groundwater flows. Overall, this paper presents a new and innovative approach to solving the groundwater flow equation using deep learning, which allows for the investigation of the impact of surface topography on groundwater flow patterns.

Robust Recommendation with Implicit Feedback via Eliminating the Effects of Unexpected Behaviors

Dec 21, 2021

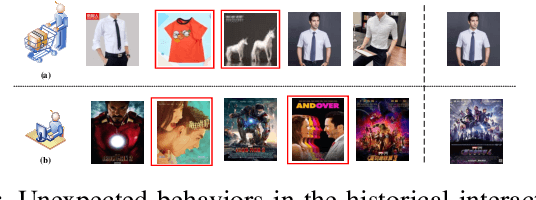

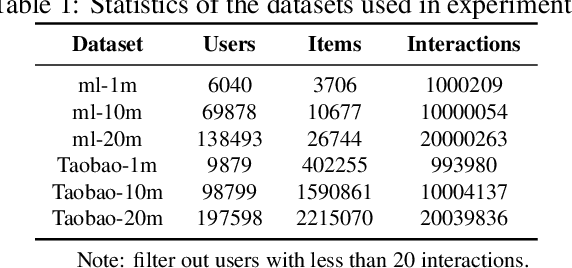

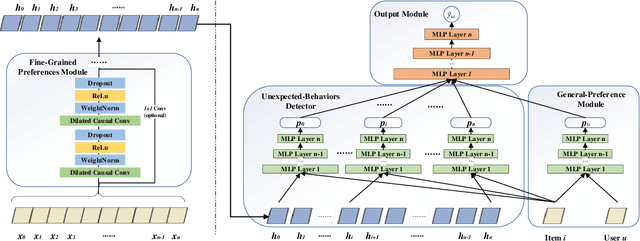

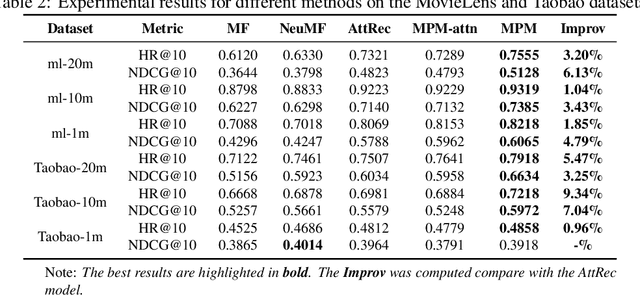

Abstract:In the implicit feedback recommendation, incorporating short-term preference into recommender systems has attracted increasing attention in recent years. However, unexpected behaviors in historical interactions like clicking some items by accident don't well reflect users' inherent preferences. Existing studies fail to model the effects of unexpected behaviors, thus achieve inferior recommendation performance. In this paper, we propose a Multi-Preferences Model (MPM) to eliminate the effects of unexpected behaviors. MPM first extracts the users' instant preferences from their recent historical interactions by a fine-grained preference module. Then an unexpected-behaviors detector is trained to judge whether these instant preferences are biased by unexpected behaviors. We also integrate user's general preference in MPM. Finally, an output module is performed to eliminate the effects of unexpected behaviors and integrates all the information to make a final recommendation. We conduct extensive experiments on two datasets of a movie and an e-retailing, demonstrating significant improvements in our model over the state-of-the-art methods. The experimental results show that MPM gets a massive improvement in HR@10 and NDCG@10, which relatively increased by 3.643% and 4.107% compare with AttRec model on average. We publish our code at https://github.com/chenjie04/MPM/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge