Chunli Peng

Guided Combinatorial Algorithms for Submodular Maximization

May 08, 2024

Abstract:For constrained, not necessarily monotone submodular maximization, guiding the measured continuous greedy algorithm with a local search algorithm currently obtains the state-of-the-art approximation factor of 0.401 \citep{buchbinder2023constrained}. These algorithms rely upon the multilinear extension and the Lovasz extension of a submodular set function. However, the state-of-the-art approximation factor of combinatorial algorithms has remained $1/e \approx 0.367$ \citep{buchbinder2014submodular}. In this work, we develop combinatorial analogues of the guided measured continuous greedy algorithm and obtain approximation ratio of $0.385$ in $\oh{ kn }$ queries to the submodular set function for size constraint, and $0.305$ for a general matroid constraint. Further, we derandomize these algorithms, maintaining the same ratio and asymptotic time complexity. Finally, we develop a deterministic, nearly linear time algorithm with ratio $0.377$.

ReWiTe: Realistic Wide-angle and Telephoto Dual Camera Fusion Dataset via Beam Splitter Camera Rig

Apr 16, 2024

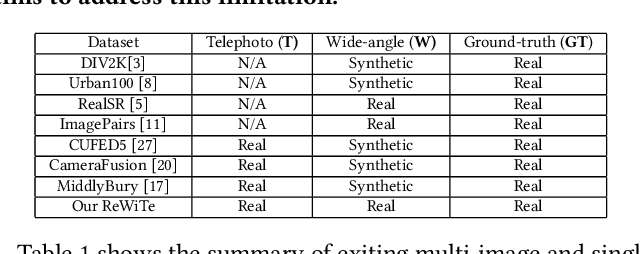

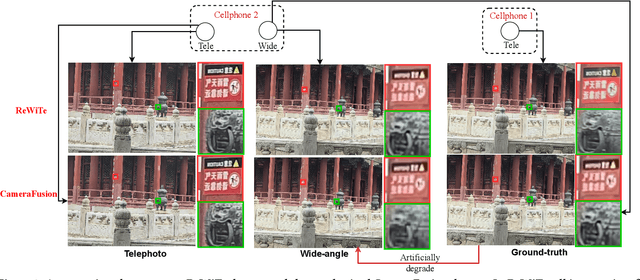

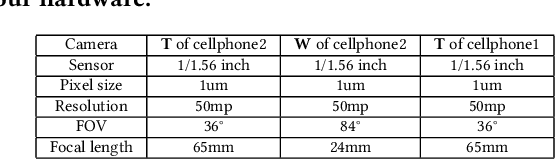

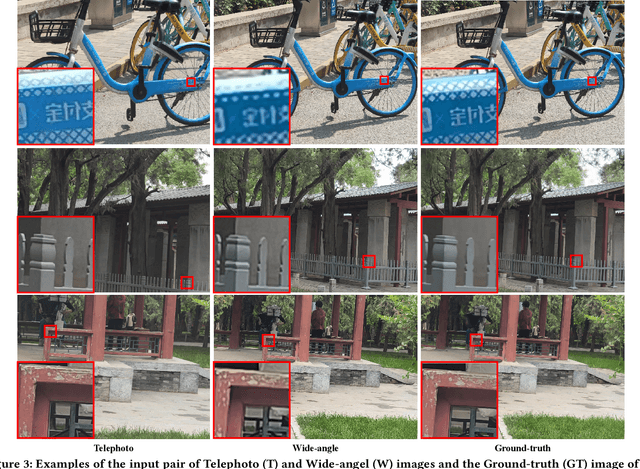

Abstract:The fusion of images from dual camera systems featuring a wide-angle and a telephoto camera has become a hotspot problem recently. By integrating simultaneously captured wide-angle and telephoto images from these systems, the resulting fused image achieves a wide field of view (FOV) coupled with high-definition quality. Existing approaches are mostly deep learning methods, and predominantly rely on supervised learning, where the training dataset plays a pivotal role. However, current datasets typically adopt a data synthesis approach generate input pairs of wide-angle and telephoto images alongside ground-truth images. Notably, the wide-angle inputs are synthesized rather than captured using real wide-angle cameras, and the ground-truth image is captured by wide-angle camera whose quality is substantially lower than that of input telephoto images captured by telephoto cameras. To address these limitations, we introduce a novel hardware setup utilizing a beam splitter to simultaneously capture three images, i.e. input pairs and ground-truth images, from two authentic cellphones equipped with wide-angle and telephoto dual cameras. Specifically, the wide-angle and telephoto images captured by cellphone 2 serve as the input pair, while the telephoto image captured by cellphone 1, which is calibrated to match the optical path of the wide-angle image from cellphone 2, serves as the ground-truth image, maintaining quality on par with the input telephoto image. Experiments validate the efficacy of our newly introduced dataset, named ReWiTe, significantly enhances the performance of various existing methods for real-world wide-angle and telephoto dual image fusion tasks.

View Transition based Dual Camera Image Fusion

Dec 18, 2023Abstract:The dual camera system of wide-angle ($\bf{W}$) and telephoto ($\bf{T}$) cameras has been widely adopted by popular phones. In the overlap region, fusing the $\bf{W}$ and $\bf{T}$ images can generate a higher quality image. Related works perform pixel-level motion alignment or high-dimensional feature alignment of the $\bf{T}$ image to the view of the $\bf{W}$ image and then perform image/feature fusion, but the enhancement in occlusion area is ill-posed and can hardly utilize data from $\bf{T}$ images. Our insight is to minimize the occlusion area and thus maximize the use of pixels from $\bf{T}$ images. Instead of insisting on placing the output in the $\bf{W}$ view, we propose a view transition method to transform both $\bf{W}$ and $\bf{T}$ images into a mixed view and then blend them into the output. The transformation ratio is kept small and not apparent to users, and the center area of the output, which has accumulated a sufficient amount of transformation, can directly use the contents from the T view to minimize occlusions. Experimental results show that, in comparison with the SOTA methods, occlusion area is largely reduced by our method and thus more pixels of the $\bf{T}$ image can be used for improving the quality of the output image.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge